Introduction

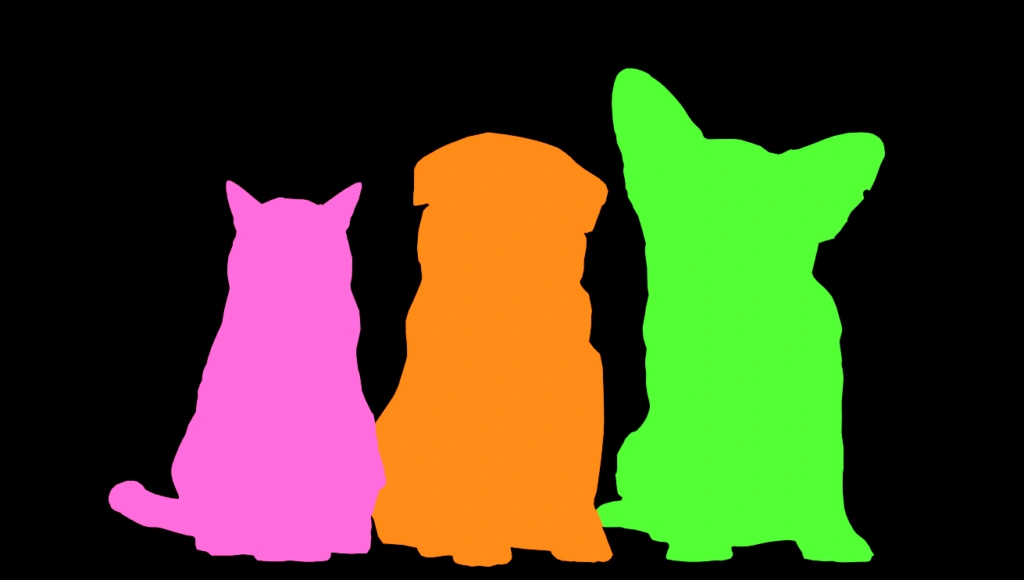

What is Segmentation in Computer Vision?

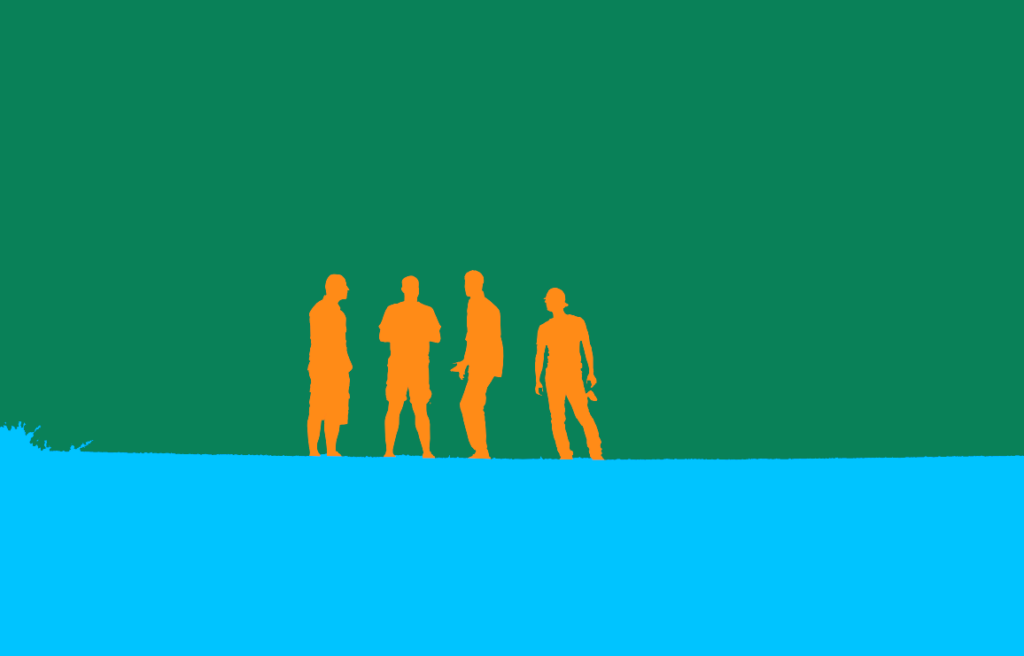

Segmentation is a core task in computer vision that involves dividing an image into multiple parts or regions. Each part represents a different object or area of interest. The goal of segmentation is to simplify and change the representation of an image into something more meaningful and easier to analyze.

Definition of Instance Segmentation

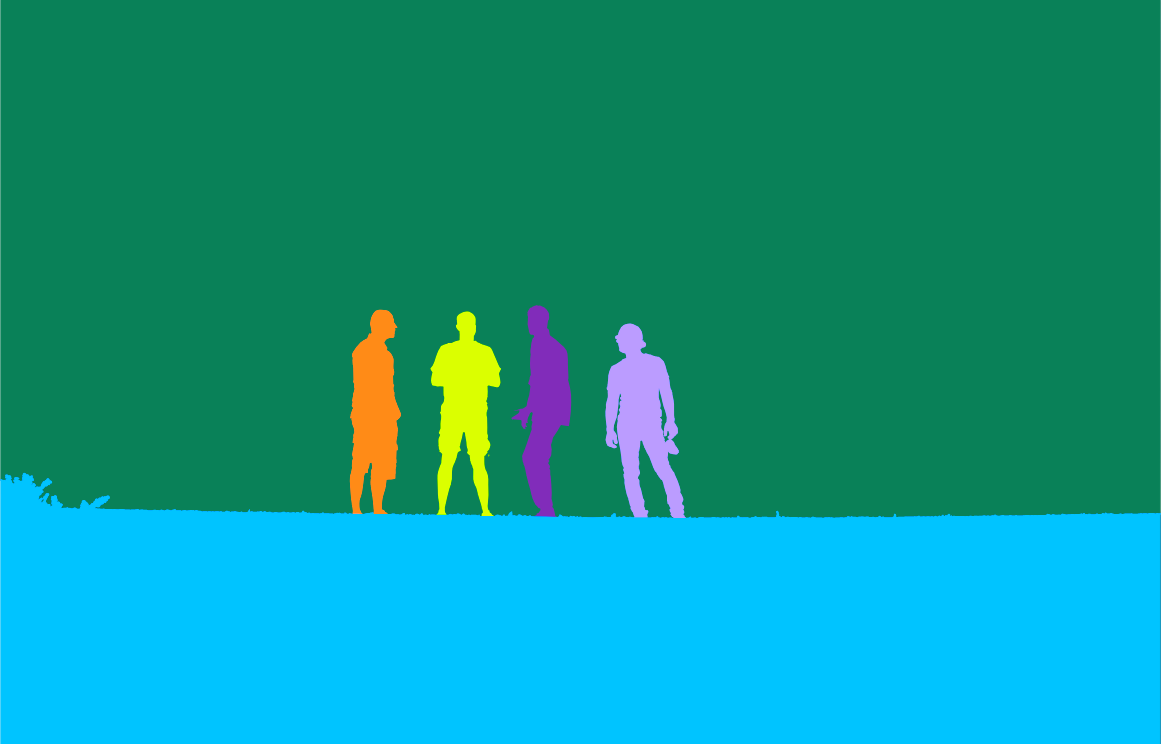

Instance segmentation goes beyond traditional object detection and semantic segmentation by not only identifying objects but also segmenting each instance of an object. It assigns each detected object a unique pixel-level mask, allowing models to distinguish between objects, even if they belong to the same class.

For example, in an image containing multiple cars, instance segmentation will individually mask each car rather than labeling them all under the "car" class.

Common Applications

Instance segmentation is used across various industries to enhance decision-making processes:

Autonomous driving: Cars rely on instance segmentation to identify and track objects such as pedestrians, vehicles, and traffic signs, ensuring safe navigation.

Medical imaging: Segmenting different anatomical structures helps doctors identify abnormalities like tumors or organ contours.

Video surveillance: Instance segmentation improves object tracking and activity recognition, making surveillance systems more intelligent.

Augmented reality: AR systems use instance segmentation to map real-world objects and create virtual interactions.

Understanding Instance Segmentation

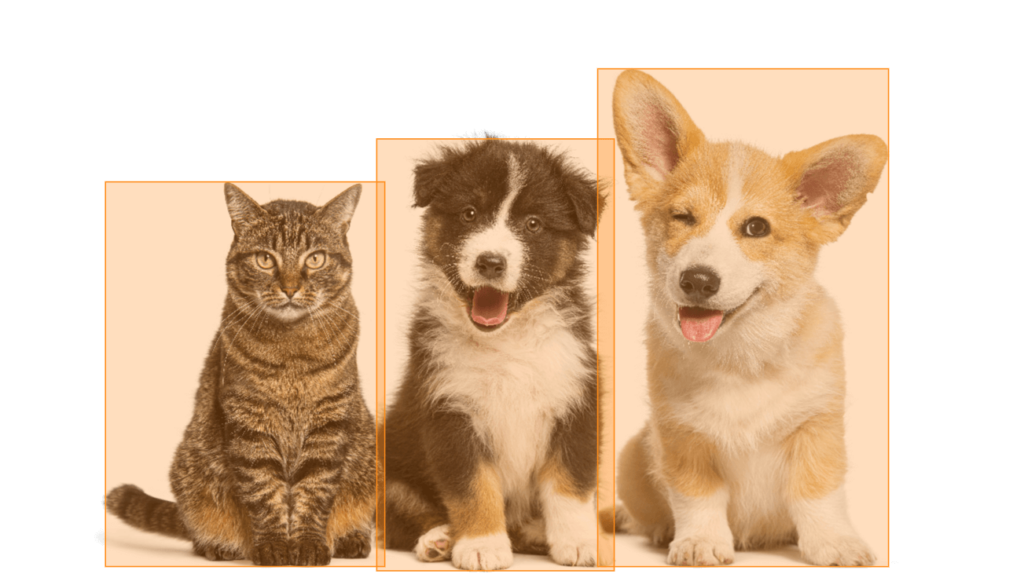

Instance Segmentation vs. Object Detection

Instance segmentation and object detection share a common goal of identifying objects within an image, but they differ in granularity.

Object detection uses bounding boxes to locate and classify objects, providing a general area for each detected object. However, it lacks pixel-level precision, meaning objects within the same bounding box may overlap or share boundaries without distinction.

In contrast, instance segmentation provides a more refined analysis by assigning a unique mask to each object at the pixel level, making it ideal for complex scenes where objects overlap or have intricate shapes.

Instance Segmentation vs. Semantic Segmentation

Semantic segmentation focuses on labeling every pixel in an image with a specific class, ensuring all objects are classified. However, it does not distinguish between different instances of the same class.

For example, two people in an image would both be labeled as "person" without distinguishing between them.

Instance segmentation, on the other hand, not only labels each object but also identifies individual instances, assigning a unique mask to each object. This allows for a much more detailed analysis, especially in environments where distinguishing between similar objects is critical, such as in medical imaging or autonomous driving.

Image Segmentation for Retail Applications

- E-commerce and Retail

-

1,000 high-resolution annotated images

30+ object classes per image - 6 weeks

Instance Segmentation vs. Panoptic Segmentation

Panoptic segmentation represents a hybrid approach, combining both instance and semantic segmentation. It labels every pixel in the image (semantic segmentation) while also distinguishing between individual objects (instance segmentation), creating a comprehensive map of the scene.

In comparison, instance segmentation is more narrowly focused on identifying and segmenting individual objects, without concern for labeling the entire image.

While instance segmentation excels in tasks requiring fine-grained object distinction, panoptic segmentation is more suitable for applications where both instance-level and scene-level understanding are required, such as in complex urban environments for self-driving cars.

Key Concepts and Terminology

- Bounding Boxes: In object detection, rectangular frames are used to locate objects.

- Masks: Pixel-level segmentation masks are used in instance segmentation to outline the precise shape of each object.

- Labels: Identifiers that categorize objects into different classes (e.g., "person," "car") and instances (e.g., "person 1," "person 2").

Techniques for Instance Segmentation

Several techniques are commonly used for instance segmentation:

- Mask R-CNN: One of the most popular models, which extends Faster R-CNN by adding a branch for predicting segmentation masks.

- Fully Convolutional Networks (FCNs): These are used for pixel-level classification and have been adapted for instance segmentation tasks.

- DeepLab: A model designed for semantic and instance segmentation, especially in challenging environments with overlapping objects.

Annotation Methods for Instance Segmentation

Manual Annotation

In manual annotation, human annotators carefully label objects within images by drawing pixel-level masks or bounding boxes. This method is highly accurate but labor-intensive and costly. It's typically used in domains where precision is paramount, such as medical imaging.

Crowdsourced Annotation: Platforms like Amazon Mechanical Turk allow for the distributed labeling of data. While efficient for large datasets, the quality can vary, and tasks often require secondary validation.

Automated Annotation

Automated annotation involves using algorithms to label data. While faster than manual methods, these algorithms often struggle with complex cases like occlusions and overlapping objects, requiring human intervention to refine the labels.

Human-in-the-Loop Annotation

This hybrid approach combines the efficiency of automated methods with the accuracy of human intervention. Machines make initial predictions, and humans correct mistakes, optimizing time and accuracy.

How to Perform Manual Instance Segmentation: Steps

Step 1: Prepare Your Tools

- Select Annotation Software: Choose an appropriate annotation tool that supports instance segmentation. Popular options include LabelMe, CVAT, and VGG Image Annotator (VIA).

At Unidata, we use CVAT for these tasks.

Step 2: Import Your Dataset

- Load Images: Import the images you wish to annotate into the selected software. Make sure the dataset is organized and easily navigable to streamline the annotation process.

- Review Dataset Characteristics: Familiarize yourself with the types of objects in the images, their sizes, and the complexity of the scenes.

Step 3: Define Annotation Guidelines

- Establish Clear Guidelines: Create a set of instructions that outline how to annotate different object classes, including edge cases and overlap scenarios. This helps maintain consistency throughout the annotation process.

Step 4: Annotate Objects

- Start Annotating: Begin with the most prominent objects in the image. Use the annotation tool to draw precise boundaries around each object.

- Handle Overlaps: For overlapping objects, carefully delineate each instance. Consider using multi-layer annotations to provide clarity.

Step 5: Review and Refine Annotations

- Quality Check: After completing the initial round of annotations, review the results to ensure accuracy. Look for mislabels, incomplete boundaries, or inconsistencies.

- Peer Review: If possible, have a colleague review your annotations. This collaborative approach can help identify errors and improve overall quality.

Step 6: Export Annotations

- Save the Data: Once satisfied with the annotations, export them in the required format (e.g., COCO, Pascal VOC) that is compatible with your machine learning framework.

- Backup Annotations: Always keep a backup of both your annotated images and the corresponding annotation files to prevent data loss.

Step 7: Prepare for Model Training

- Integrate Annotations: Organize your annotated dataset for training your machine learning model. Ensure that the images and their annotations are correctly linked.

- Perform Data Augmentation: If needed, apply data augmentation techniques to increase the diversity of your dataset, enhancing the model's robustness during training.

Evaluation Metrics

Mean Average Precision (mAP)

Mean Average Precision measures the precision-recall relationship in instance segmentation. It is often the gold standard metric for evaluating model performance.

Intersection over Union (IoU)

IoU compares the overlap between the predicted segmentation mask and the ground truth. A higher IoU indicates a more accurate segmentation.

Precision, Recall, and F1-Score

- Precision: Measures how many of the predicted masks are correct.

- Recall: Assesses how many of the actual objects were identified correctly.

- F1-Score: A balance between precision and recall, providing a holistic view of model performance.

Importance of High-Quality Instance Segmentation

Enhanced Object Recognition

Instance segmentation enhances a model’s ability to identify individual objects in cluttered environments, crucial for applications like autonomous vehicles, where precise object detection and differentiation are essential.

Improved Data Utilization

The pixel-level detail of instance segmentation allows models to extract more meaningful features, improving learning and generalization capabilities. This results in more accurate and robust predictions.

Challenges in Instance Annotation and their Solutions

Occlusion and Object Overlap

Challenge: One of the most significant challenges in instance segmentation annotation is accurately labeling objects that overlap or occlude each other.

For example, in crowded scenes, annotators may find it difficult to draw precise boundaries around objects that are partially hidden behind others. This is particularly problematic in applications like autonomous driving, where understanding individual object boundaries is critical.

Solution: Implement advanced annotation tools like CVAT with multi-layered segmentation capabilities, allowing annotators to label occluded objects by predicting hidden parts based on context. Additionally, using techniques like polygon-based annotation can improve accuracy in complex, overlapping scenarios.

Data Imbalance and Small Object Annotation

Challenge: Small objects and underrepresented classes in a dataset are often more difficult and time-consuming to annotate. Models trained on such imbalanced datasets tend to focus on larger, more frequent objects, leading to poor generalization when dealing with rare or smaller instances.

Solution: To address this, dataset augmentation techniques, such as synthetic data generation or minority class oversampling, can be employed. Additionally, annotators should be trained to focus on these difficult cases and tools can be fine-tuned to detect small object edges more precisely.

Consistency Across Annotators

Challenge: Achieving consistency across different annotators can be difficult, especially in subjective cases where object boundaries aren't well-defined. Different annotators may interpret boundaries differently, leading to variability in annotations.

Solution: A solution is to create comprehensive annotation guidelines that define precise instructions for handling difficult cases, ensuring all annotators follow the same procedures. Annotation review processes with feedback loops can also help standardize results, improving overall quality and consistency.

Time-Consuming Nature of Manual Annotation

Challenge: Annotating large datasets for instance segmentation manually is time-intensive, especially when annotators need to outline every instance in high-detail. This can significantly delay project timelines, especially when dealing with complex images requiring pixel-level precision.

Solution: One way to mitigate this challenge is to use human-in-the-loop (HITL) methods, where pre-trained models make initial predictions that annotators refine. This semi-automated approach can cut down annotation time by up to 50% while maintaining accuracy.

Scalability and Data Volume

Challenge: With the growing size of datasets and the demand for more detailed annotations, scalability becomes a major challenge. As more complex data comes in, ensuring that annotations keep up without sacrificing quality can overwhelm annotation teams.

Solution: The use of scalable cloud-based annotation tools allows for distributing work across large teams, ensuring efficiency without losing quality.

Automated annotation tools can also be incorporated to pre-process vast datasets, which are later validated and refined by human annotators.

Annotator Fatigue

Challenge: Annotating pixel-level details across thousands of images can lead to annotator fatigue, reducing the quality of the annotations over time. Fatigued annotators are prone to making errors, such as mislabeling or missing small objects.

Solution: To combat fatigue, task rotation and shorter annotation sessions can be implemented. Furthermore, user-friendly interfaces and tool features like auto-completion or smart predictions can make the task less monotonous and faster to execute, helping annotators maintain focus and productivity.

Future Trends in Instance Segmentation

Integration with 3D Data

Future models may combine 2D segmentation with 3D object detection, offering a richer spatial understanding. This will be particularly beneficial for autonomous driving and robotics.

Semi-Supervised and Weakly Supervised Learning

Reducing reliance on fully annotated datasets through semi-supervised learning could significantly lower the cost and time needed for data preparation.

Real-Time Instance Segmentation for Edge Devices

As AI moves towards edge computing, models will need to be more lightweight and efficient, enabling instance segmentation on mobile devices and drones.

Summary of Key Points

- Instance segmentation provides pixel-level masks for individual objects, going beyond simple object detection and semantic segmentation.

- It plays a crucial role in industries like autonomous driving, healthcare, and augmented reality.

- Manual, automated, and hybrid annotation methods each have advantages and challenges, with human-in-the-loop providing the best balance of speed and accuracy.

- Evaluation metrics like mAP, IoU, and F1-score are essential for assessing model performance.

- Overcoming challenges like occlusion, data imbalance, and real-time efficiency will be critical as instance segmentation continues to evolve.