Training an object detector isn’t a photo shoot — it’s crowd control in a hurricane. Frames smear, subjects overlap, lighting lies, and the “easy” classes vanish when it matters. Models don’t need curated beauty; they need honest chaos with ground truth.

This 2025 roundup is your field kit: video-first benchmarks that punish drift, 3D AV sets with LiDAR/radar, long-tail catalogs that expose blind spots, aerial/drone views with rotation and tiny targets, crowd and face gauntlets, plus scene-parsing for context. Every dataset is selected to break brittle pipelines and shape production-grade ones.

Choosing the Right Object Detection Dataset

Choosing an object detection dataset is less about luck and more about alignment—between your model’s world and the data’s reality. Use this quick checklist and you’ll ship models that survive outside the lab.

- Task & modality. Do you need images or video, 2D boxes, masks, oriented boxes, or 3D (LiDAR/radar + cams)? Match labels and sensors to your deployment.

- Domain & context. Train on the world you’ll see: urban AV (Waymo/nuScenes), aerial/drone (DOTA/VisDrone), long-tail retail (Objects365/LVIS), video tracking (YouTube-BB/TAO). Include weather, time of day, and camera height.

- Scale & tail. Big, diverse sets boost robustness. Check instances per image and rare classes; long-tail apps need more than COCO’s 80 usual suspects.

- Small objects & resolution. Faces, signs, and UAV targets are tiny. Prefer high-res sources and plan FPN/tiling—or you’ll miss what matters.

- Label quality & constraints. Consistent boxes/masks beat noisy ones. Verify IoU rules, splits, and license/access fit your roadmap and latency budget.

Fast path: Pretrain broad (COCO/Open Images) → fine-tune domain-specific (LVIS/Objects365, Waymo/nuScenes, DOTA/VisDrone, TAO) → stress-test the failure you fear (video drift, tiny/rotated, crowds).

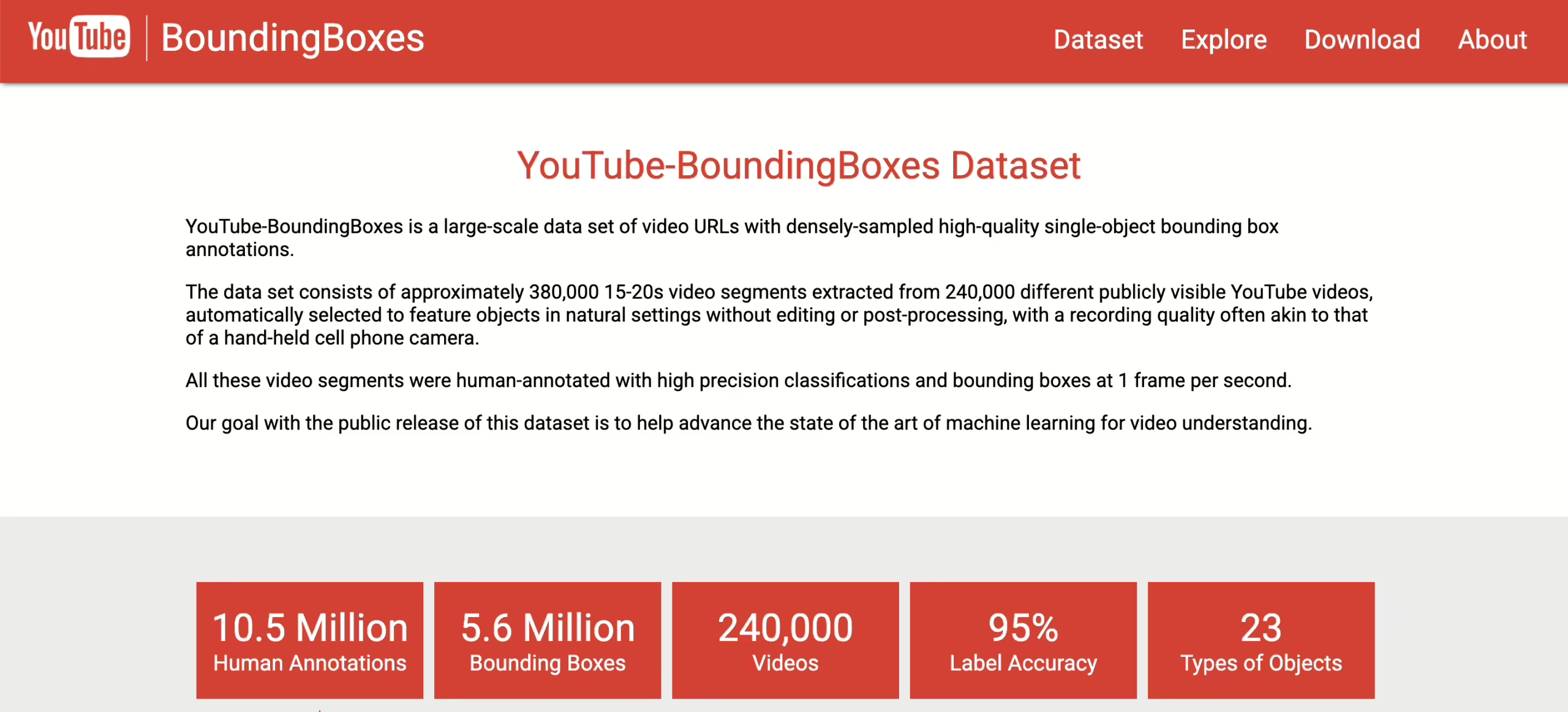

1. YouTube-BoundingBoxes

- Format: Bounding boxes on video frames

- Volume: 5.6M objects, 380K+ video segments

- Access: Free via Google

- Task Fit: Video object detection, tracking

Think static images are hard? Try 5.6M labeled objects yanked from real YouTube clips — motion blur, jump cuts, occlusion, the works. It’s a chaos lab for models that claim “video-ready.” If your detector holds track across these frames, it’s genuinely ready for the wild.

2. Waymo Open Dataset

- Format: LiDAR + multi-camera (2D/3D bounding boxes)

- Volume: 1,950 segments, 20M+ labeled objects

- Access: Free on Waymo site

- Task Fit: Autonomous driving, 3D detection, sensor fusion

Fleet-grade LiDAR + multi-camera across diverse U.S. cities, day/night, sun/rain — plus 20M+ labeled objects. If KITTI was the practice run, Waymo is the Formula 1 circuit with pit stops and photo finishes. Train here when you want sensor-fusion models that ship, not just demo.

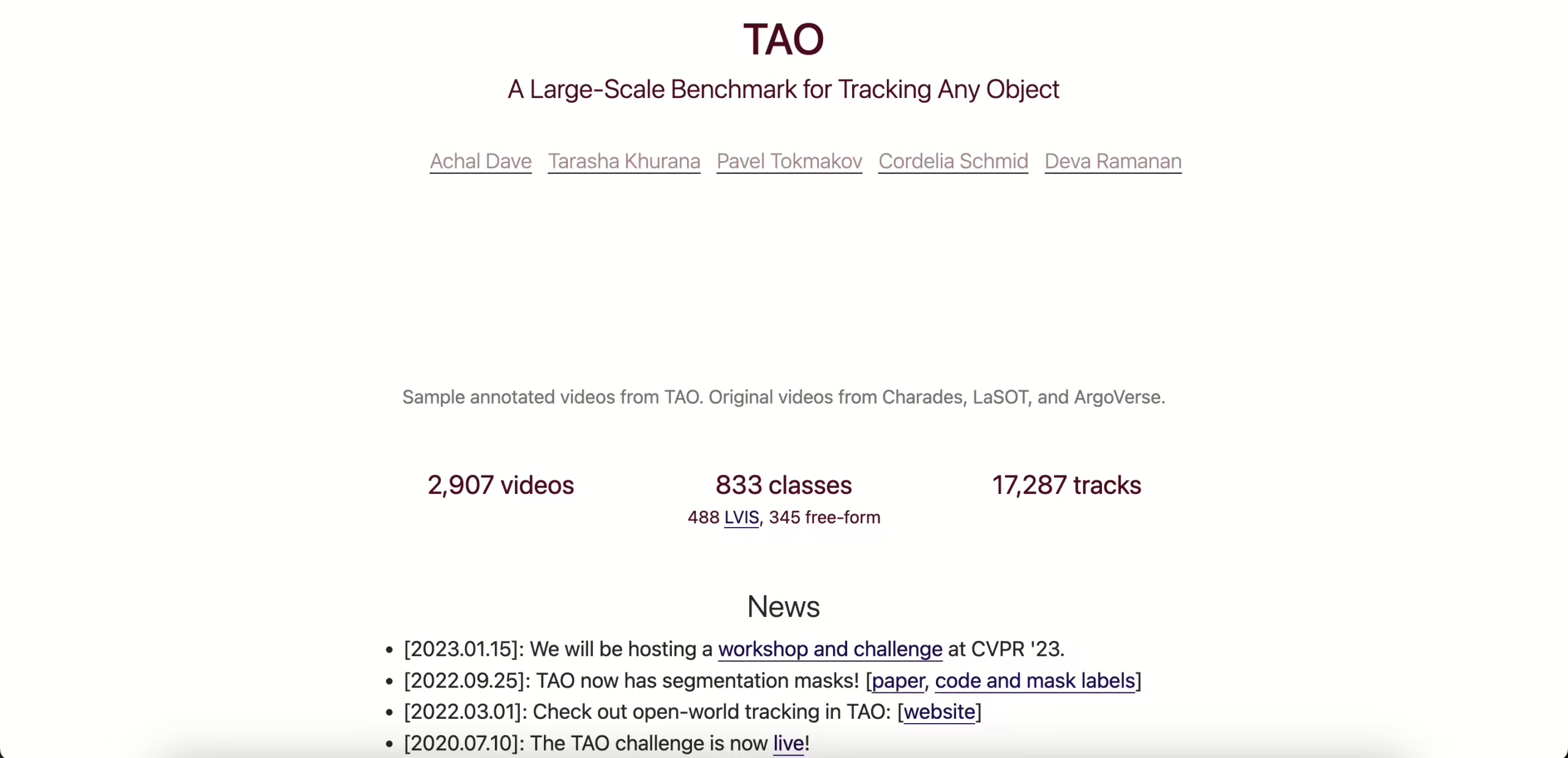

3. TAO—Tracking Any Object

- Format: Bounding boxes in long, high-res videos

- Volume: 2,900 videos, 800+ categories

- Access: Free, official site

- Task Fit: Long-term tracking, rare-object detection

2,900 long videos, 800+ categories, and a brutal long-tail that punishes flaky trackers. TAO isn’t about a one-frame spot — it’s about staying locked when the object shrinks, turns, or leaves and re-enters. If your model drifts, TAO will make it obvious — fast.

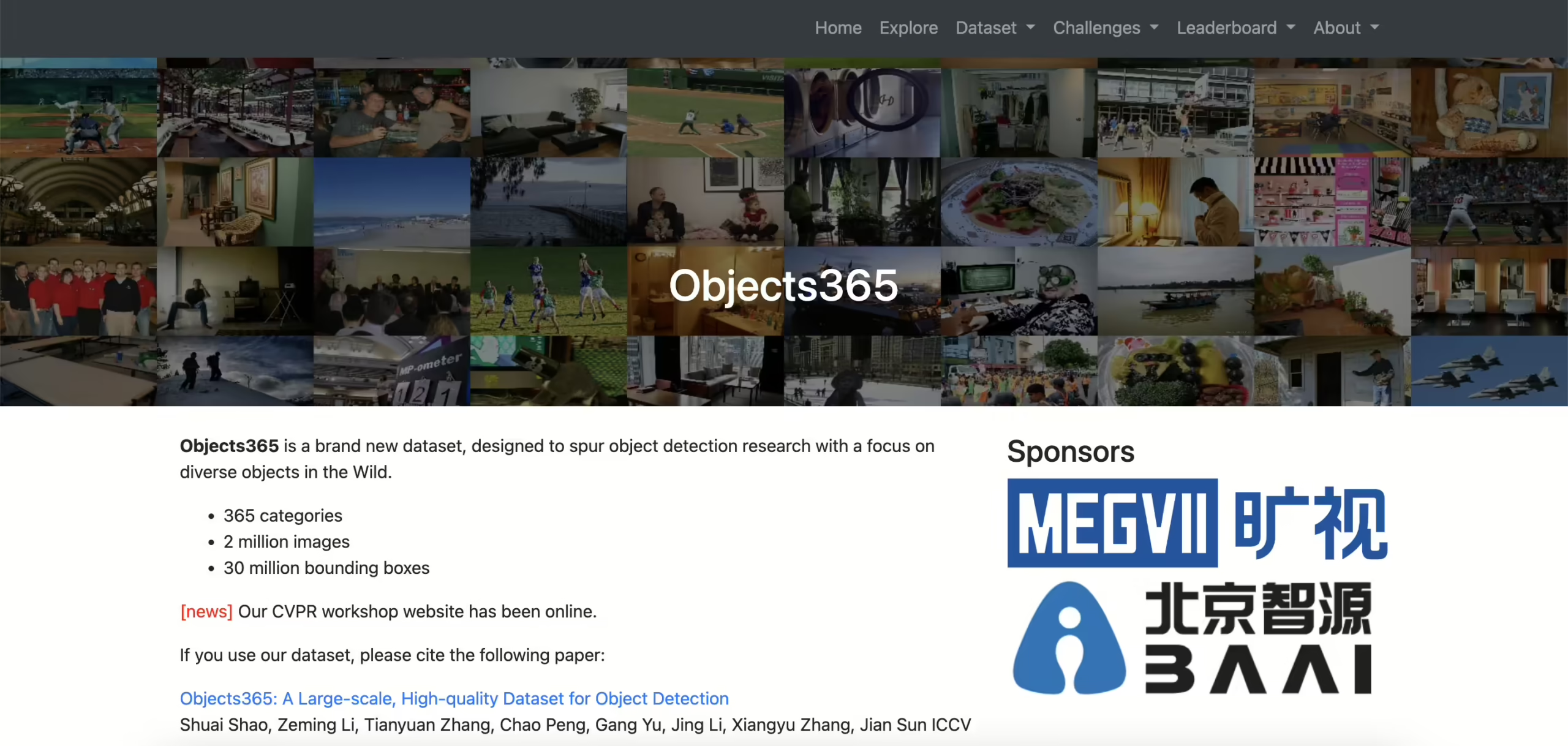

4. Objects365

- Format: Bounding boxes

- Volume: 600K images, 10M+ objects, 365 categories

- Access: Free after request

- Task Fit: Large-scale object detection

Done with COCO’s 80 classes? Scale up to 365 categories, 10M+ boxes, 600K images and watch your recall assumptions shatter. Objects365 is where vocabulary breadth meets real-world clutter — great for open-world and retail-style catalogs. Prepare for longer training and bigger wins.

5. LVIS (Large Vocabulary Instance Segmentation)

- Format: Bounding boxes + segmentation masks

- Volume: 1,200+ categories, 2M masks

- Access: Free, official site

- Task Fit: Long-tail detection and segmentation

The uncommon-object gauntlet: 1,200+ categories, 2M masks, and a ruthless long-tail distribution. If your product fails on “weird but real” items, LVIS will surface it in minutes. Nail LVIS and your model stops fearing edge cases.

6. Open Images V7

- Format: Bounding boxes, masks, attributes, relationships

- Volume: 9M+ images, 16M+ boxes

- Access: Free via Google

- Task Fit: Context-aware detection, large-scale training

9M images, 16M boxes — but the magic is the extra metadata: attributes and relationships. You’re not just labeling “dog”; you’re learning “dog on sofa,” “person holding phone.” Train here when you want detectors that grasp context, not only rectangles.

7. COCO

- Format: Bounding boxes, masks, keypoints, captions

- Volume: 330K images, 1.5M instances, 80 classes

- Access: Free, official site

- Task Fit: General-purpose detection, segmentation

The dataset that refuses to retire. 330K images, 1.5M objects, 80 everyday categories — all captured in clutter, occlusion, and real-world mess. Every new detection model, from YOLO to transformers, gets judged here first. Passing COCO isn’t bragging rights, it’s table stakes. If your detector fails COCO, don’t bother deploying it.

8. nuScenes

- Format: 3D boxes with LiDAR, radar, 360° cameras

- Volume: 1.4M annotated objects, 1,000 driving scenes

- Access: Free via Motional

- Task Fit: 3D detection, sensor fusion, AV research

Think 2D is enough? nuScenes brings the full stack: LiDAR, radar, and six cameras capturing 360° urban scenes with 1.4M labeled objects. It’s tailor-made for AV perception that needs depth and motion, not just snapshots. If you’re serious about 3D detection and sensor fusion, nuScenes is the benchmark that proves your model can survive outside the lab.

9. Argoverse 2

- Format: 2D/3D bounding boxes, trajectories, HD maps

- Volume: 1,000 hours, 6M+ tracked objects

- Access: Free, official site

- Task Fit: Motion forecasting, 3D detection

Detection is good. Prediction is better. Argoverse 2 pairs 6M tracked objects with HD maps and trajectories, making it a benchmark for motion forecasting as well as perception. It forces your model to think about where that car, cyclist, or pedestrian will be seconds from now. For autonomous driving research, it’s the leap from recognition to anticipation.

10. BDD100K

- Format: Bounding boxes, segmentation, lanes, tracking

- Volume: 100K driving videos

- Access: Free via Berkeley

- Task Fit: Autonomous driving, multi-task perception

The Swiss army knife of driving datasets: 100K videos annotated for detection, tracking, segmentation, and lane markings. Instead of piecing together multiple sources, BDD100K gives you an end-to-end playground. It’s perfect for building perception stacks that stay consistent across tasks. If your AV pipeline needs coherence, this is where you start.

11. KITTI

- Format: 2D/3D bounding boxes with LiDAR, stereo

- Volume: 15K images, 200K+ labels

- Access: Free after registration

- Task Fit: AV benchmarks, prototyping, 3D baselines

The original AV proving ground: 15K images with LiDAR, stereo, and 200K+ 3D labels. Smaller than today’s giants, but perfect for fast iteration and rock-solid baselines. Nearly every perception stack cut its teeth here before graduating to Waymo or nuScenes. If your model can’t look good on KITTI, it’s not ready for prime time.

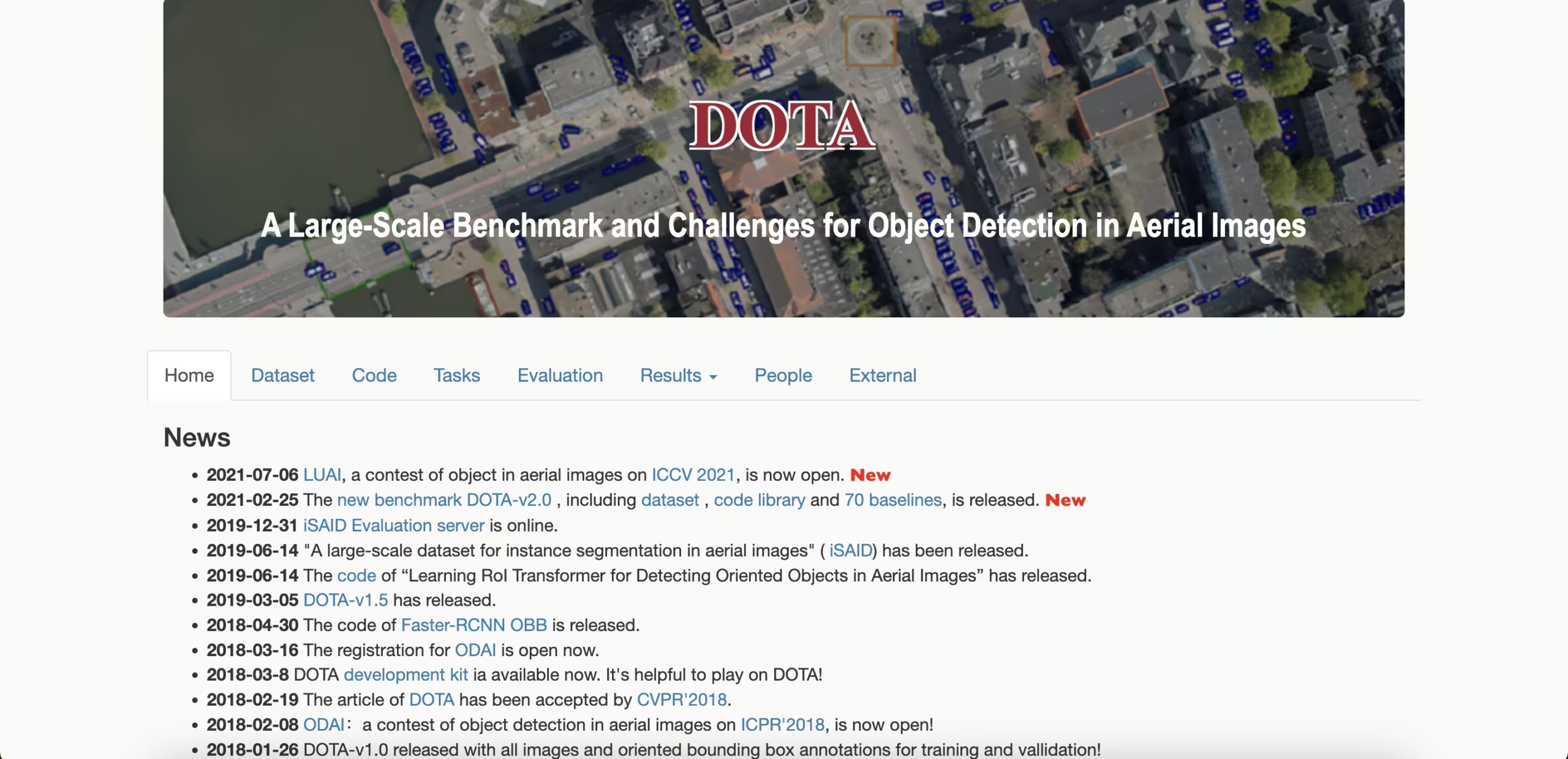

12. DOTA (Aerial)

- Format: Oriented bounding boxes

- Volume: 2,800 aerial images, 1M+ instances

- Access: Free, official site

- Task Fit: Remote sensing, aerial detection

Ships, planes, cars — seen from above with oriented bounding boxes that actually respect rotation. Expect brutal scale variance and tiny targets that vanish on naive pipelines. DOTA is the go-to remote sensing benchmark for defense, logistics, and mapping. Pass DOTA, and your detector stops confusing rooftops for runways.

13. VisDrone

- Format: Drone images with bounding boxes

- Volume: 10K+ images, 250K+ objects

- Access: Free, official site

- Task Fit: Low-altitude detection, UAV edge-AI

Low-altitude reality check: 10K images, 250K+ objects shot from drones with blur, glare, and dense crowds. It’s the dataset for edge-AI on UAVs, where compute is tight and angles are unforgiving. If your model only works from eye level, VisDrone will expose it in seconds. Train here to survive real skies, not just lab lights.

14. CrowdHuman

- Format: Full-body, visible-body, head bounding boxes

- Volume: 15K images, 470K+ human instances

- Access: Free, official site

- Task Fit: Pedestrian detection in crowded scenes

470K labeled people packed into 15K busy street scenes — occlusion is the default, not the exception. It’s a ruthless test for pedestrian detection where NMS, tracking, and association get pushed to their limits. Retail analytics, safety, surveillance — if you care about people in crowds, you need this benchmark. Fail CrowdHuman, and your “city-ready” claim doesn’t fly.

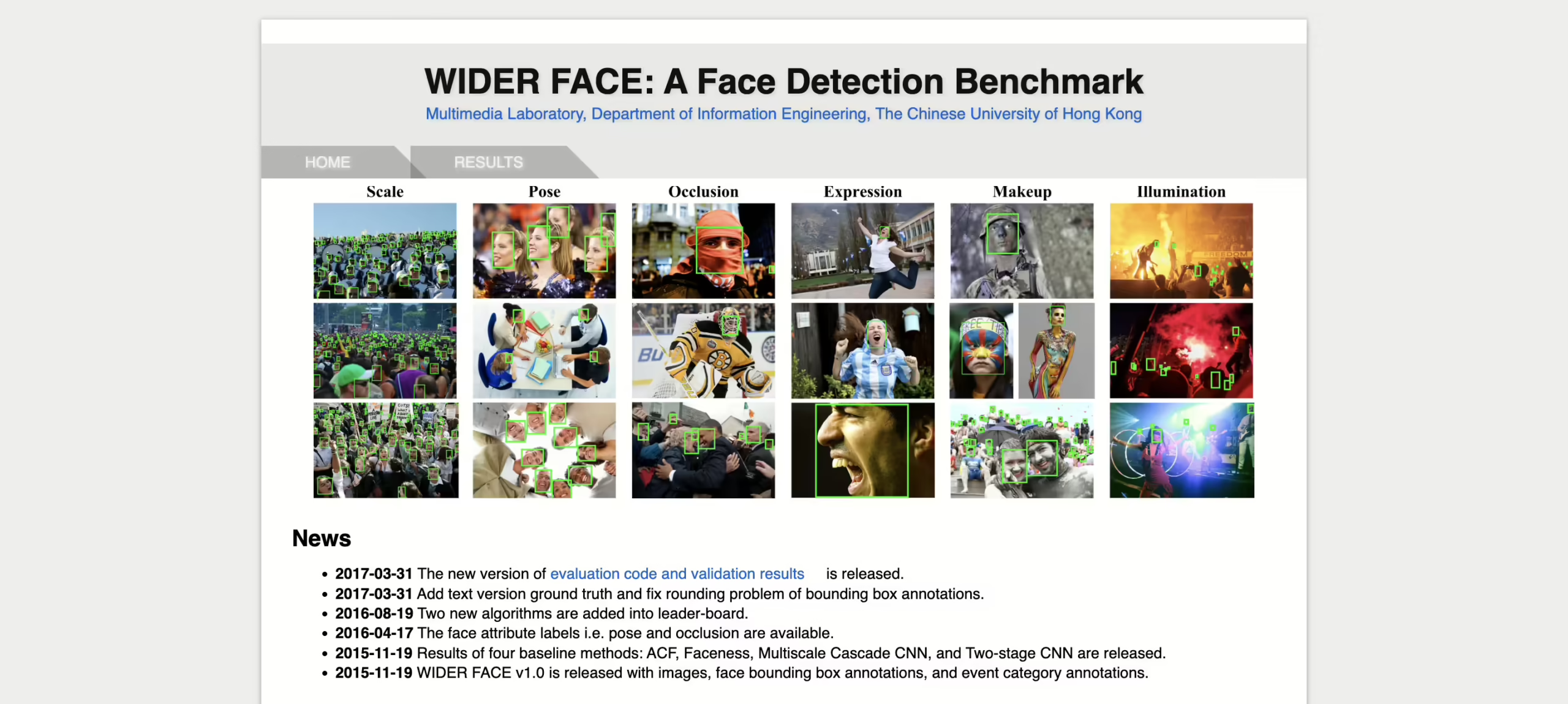

15. WIDER FACE

- Format: Face bounding boxes in the wild

- Volume: 32K images, 393K faces

- Access: Free, official site

- Task Fit: Face detection, small-object detection

The face detection stress test: 393K faces, many tiny, tilted, or occluded behind sunglasses, masks, and crowds. It punishes shortcuts and rewards robust anchors, feature pyramids, and smart augmentations. Clear WIDER FACE and you’ve earned real-world credibility for kiosks, access control, and mobile capture.

16. ADE20K

- Format: Pixel-level segmentation masks

- Volume: 20K training images, 150+ categories

- Access: Free via MIT

- Task Fit: Scene parsing, semantic segmentation

Not just objects — entire scenes. ADE20K delivers pixel-level annotations for 150+ categories, from walls and windows to people and pets. It’s the backbone of the MIT Scene Parsing Challenge and a favorite for training context-aware models. Use it when you want your detector to grasp the whole picture, not just isolated boxes.

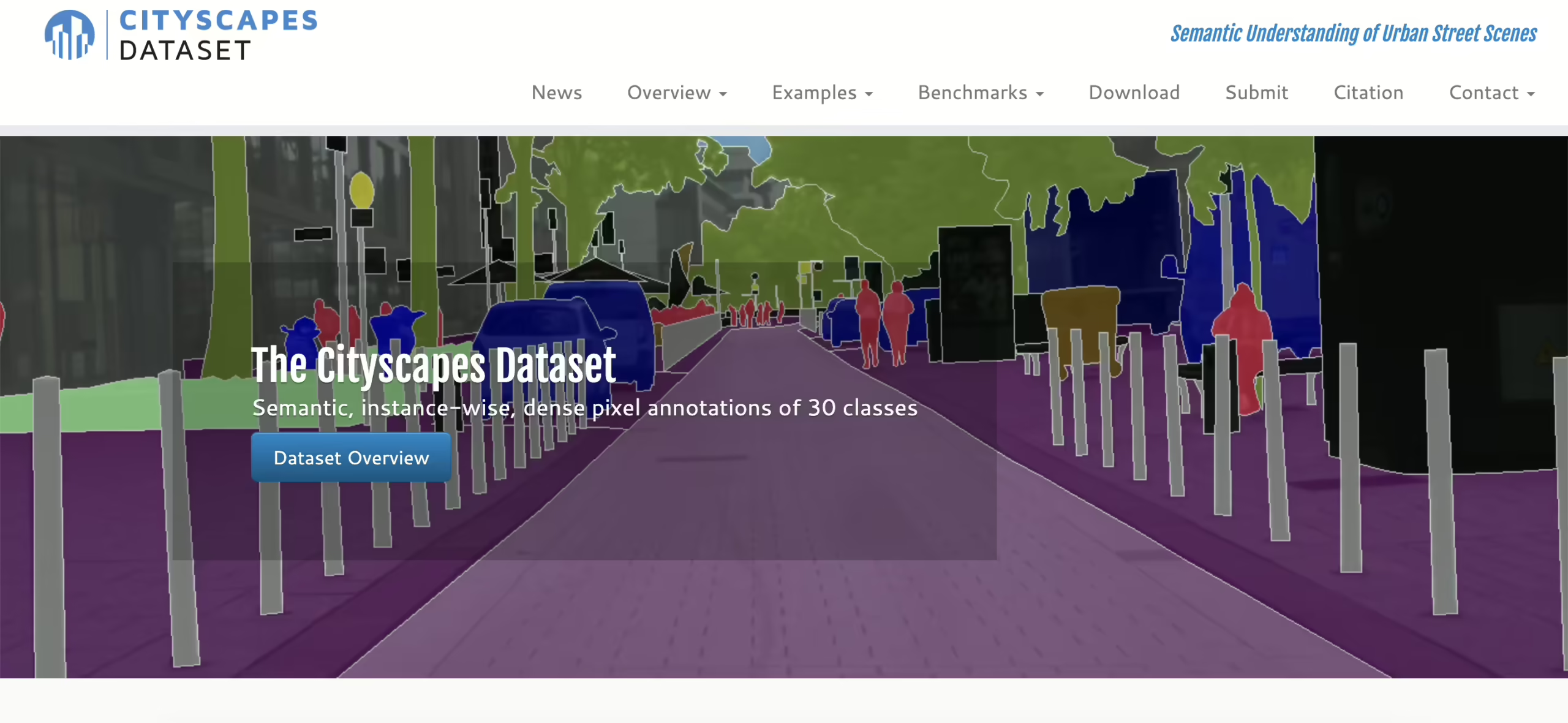

17. Cityscapes

- Format: Pixel-perfect semantic + instance segmentation

- Volume: 5K fine, 20K coarse urban images

- Access: Free after registration

- Task Fit: Street-scene understanding, AV

The urban gold standard. With 5K finely annotated street images (plus 20K coarse), it provides pixel-perfect masks for cars, cyclists, and pedestrians. Every AV perception stack gets tested here, because if you fail Cityscapes, you’ll fail on real roads. It remains the cleanest reference for street-scene understanding.

18. ImageNet (Detection Subset)

- Format: Bounding boxes on ImageNet subset

- Volume: 500K+ objects, 200 categories

- Access: Free via ImageNet

- Task Fit: General detection, pretraining

Everyone knows ImageNet for classification, but its detection subset adds half a million labeled objects across 200 categories. It’s leaner than COCO but perfect for pretraining, transfer learning, or rapid prototyping. Old name, still a workhorse — great for warming up models before bigger datasets.

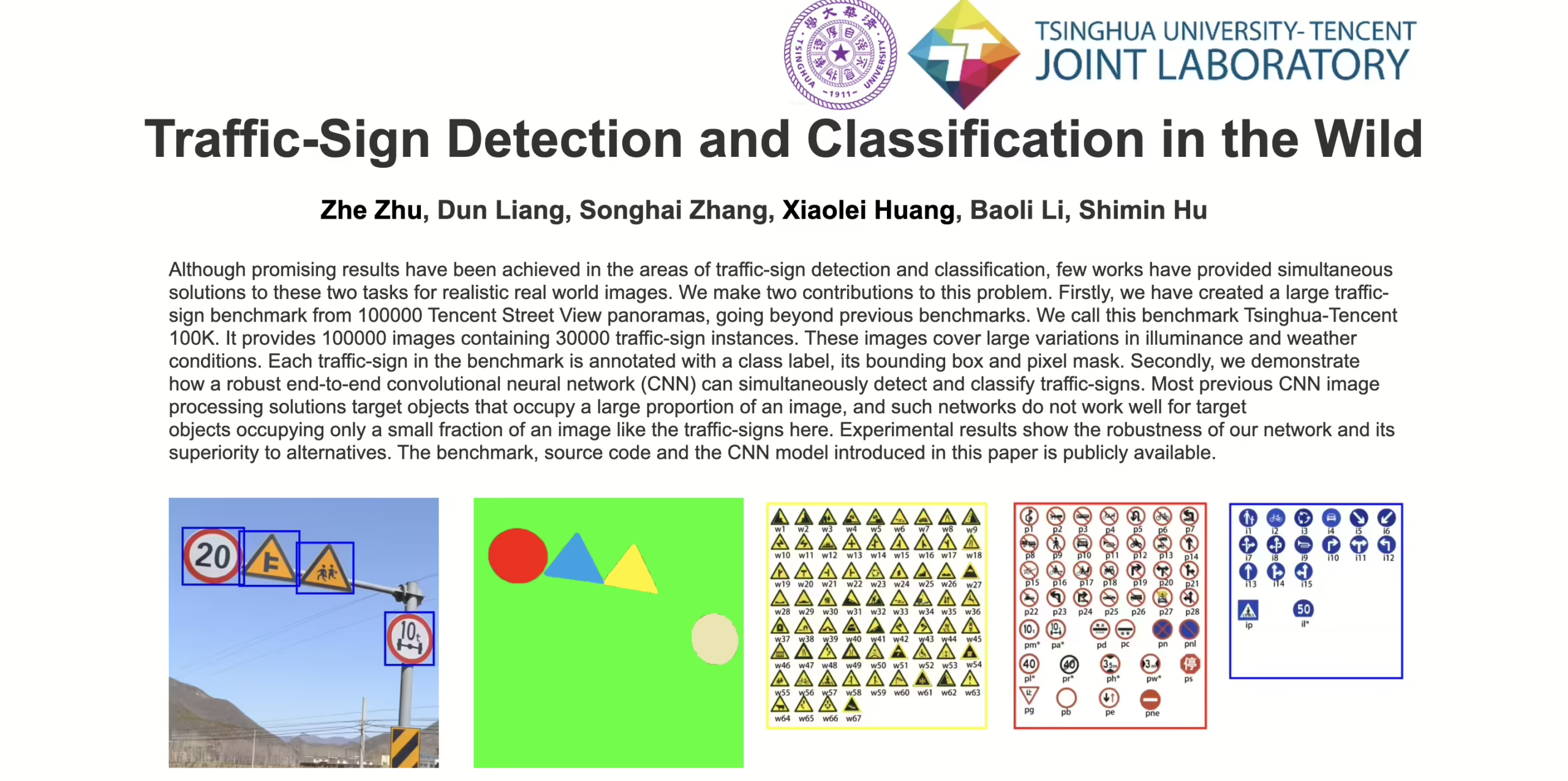

19. TT100K (Traffic Signs)

- Format: Bounding boxes for traffic signs

- Volume: 100K images, 300+ classes

- Access: Free via TT100K

- Task Fit: Traffic sign detection, AV safety

Traffic sign detection is a survival skill for AVs, and TT100K covers it with 100K images and 300+ sign classes. Rain, dusk, glare — it captures all the conditions that cause real-world systems to stumble. If your model can’t handle TT100K, it’s not roadworthy.

20. Pascal VOC

- Format: Bounding boxes + segmentation masks

- Volume: 11K images, 27K objects

- Access: Free via VOC site

- Task Fit: Baselines, teaching, prototyping

The dataset that launched a field. 11K images, 27K annotated objects, clean bounding boxes, and segmentation masks. Too small for production today, but still invaluable for teaching, benchmarks, and sanity checks. Pascal VOC is where modern object detection began — and it’s still the best place to start learning.

Conclusion

No single dataset rules them all. The most competitive pipelines today combine multiple datasets — pretraining on COCO or Open Images, then fine-tuning on domain-specific sets like KITTI or DOTA. That strategy balances scale with specialization and gives your model the best shot at real-world performance.

High-quality data is the lifeblood of computer vision. Choose wisely, mix strategically, and your detectors won’t just work in the lab — they’ll thrive in the wild.