What Is Content Moderation?

Content moderation is the process of reviewing user-generated content (UGC) on online platforms — such as social media, forums, and websites — to determine whether it aligns with the platform’s guidelines, community standards, or legal requirements. This ensures that harmful, offensive, or illegal content is filtered out, helping to maintain a safe, trustworthy, and respectful digital environment.

Importance of Content Moderation in the Digital Age

In today's digital landscape, the large volume of user-generated content has made moderation essential for internet platforms. With billions of users engaging daily, platforms face financial and reputational risks stemming from inappropriate or harmful content. Content moderation ensures a safe user experience, upholds community standards, and shields platforms from liabilities. It serves as a mechanism for overseeing the array of constantly evolving online content.

Types of Content That Should Be Moderated

Content moderation encompasses various types of media, each posing challenges that call for specific moderation approaches.

1. Text

Textual content, including comments, posts, and messages, is the most common form of UGC. Moderation efforts focus on screening out hate speech, offensive language, misinformation, and other harmful content. Textual analysis, often aided by natural language processing (NLP) algorithms, helps identify and manage such content.

2. Image

When it comes to image moderation, the process includes checking photos that users upload to spot any offensive content – like nudity, violence, or hate symbols. Advanced methods, such as image recognition and machine learning, are used to automate this task. Still, human oversight is necessary for a nuanced understanding.

3. Audio

Audio content, such as voice messages and music, needs moderation to filter out offensive language, copyrighted material, or disturbing sounds. Tools like speech-to-text algorithms and sound analysis help in screening audio content. However, human intervention is often needed for context.

4. Video

Video moderation is complex as it involves analyzing visual content, auditory elements, and textual descriptions to ensure compliance with platform standards. Given the rich, multifaceted nature of video content, this type of media often requires sophisticated AI tools alongside human moderators’ efforts.

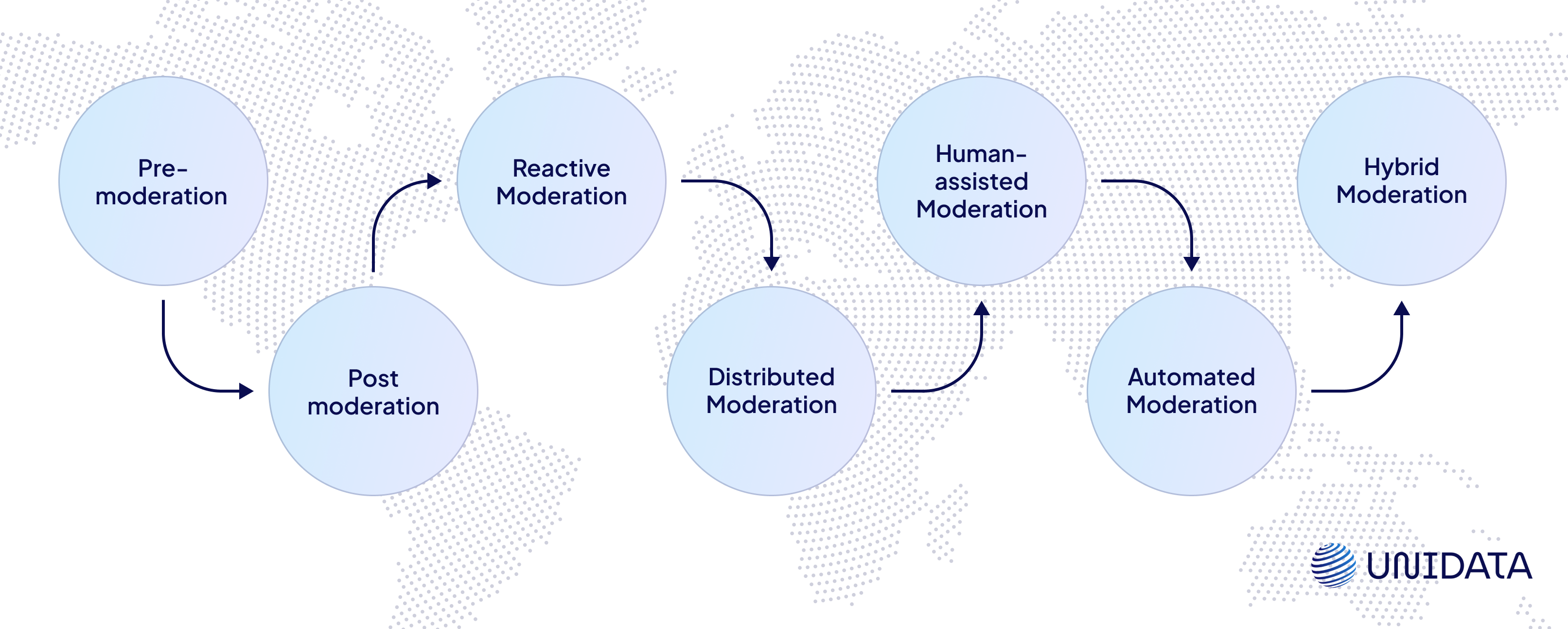

Types of Content Moderation

Different moderation types serve various needs and operational scales, blending automated systems with human judgment.

Pre-moderation

With pre-moderation, content is reviewed and approved before being published on a platform. This approach is commonly used in settings where quality control is especially important – educational platforms, professional forums, or children’s websites. It ensures that only appropriate and safe content is visible to the public, minimizing the risk of exposing users to harmful material. However, pre-moderation can slow down the content publication process and require significant manpower.

Post-moderation

Post-moderation involves publishing content immediately and reviewing it afterward. This method is preferred by platforms that prioritize real-time user interaction, such as Telegram channels, social media, and live-streaming services. Post-moderation allows for dynamic engagement but carries the risk of inappropriate content being temporarily visible.

According to a Pew Research Center study, 66% of American adults feel that social media companies have a responsibility to remove offensive content after it's posted. This method balances immediacy with content control but requires robust mechanisms to address harmful content swiftly once detected.

Reactive Moderation

Reactive moderation depends on user reports to identify problematic content. Platforms like YouTube and Facebook use this method, where the community flags content they feel is offensive or non-compliant with the guidelines. This approach relies on community vigilance and can effectively manage content on large platforms without immediate pre- or post-moderation.

Still, the majority of the inappropriate content is detected by the machines. For example, during the third quarter of 2023, 8.1 million videos were removed from YouTube – and only 363 thousand videos were detected via flags from users.

Distributed Moderation

This type of moderation is based on users’ voting on content's appropriateness to influence its visibility or deletion. Reddit is a prime example, where user votes can elevate or bury content. Reddit's system allows users to upvote or downvote posts, with highly downvoted content becoming less visible. While this method decentralizes moderation tasks, it can also result in creating echo chambers or mob censorship in polarized communities.

Human-assisted Moderation

AI-supported moderation combines intelligence with human judgment, commonly seen on social media platforms where dealing with complex content decisions is a daily job. Teams of human moderators review flagged content for violations of community guidelines and decide if they should be removed or allowed to remain on the platform. Also, websites such as eBay, Amazon, and Etsy employ human moderators to review product listings and user-generated content. They check that listings comply with the platform's policies regarding prohibited items, counterfeit goods, and inappropriate content. This approach strives to strike a balance between efficiency and nuanced understanding, especially when addressing contextual sensitivities.

Automated Moderation

Automated moderation involves AI algorithms to monitor, identify, and take action on content that violates policies. This approach is mostly used on large-scale platforms. For instance, Instagram utilizes image recognition and text analysis technologies to scan posts and stories for guideline breaches when moderating millions of posts. This system continuously learns from tagged and user-reported content to enhance accuracy and efficiency.

Hybrid Moderation

This is the most popular approach to content moderation. Hybrid moderation combines various methods to optimize the moderation process. For example, Twitch uses a hybrid model, employing automated tools to flag content for human review, while also allowing community reporting. This mixed approach enables Twitch to manage the 15 million daily active users and their interactions.

Chat Message Annotation for Toxic Content Filtering

- E-commerce and Retail

- 100.000 messages

- Ongoing project

Industries That Rely on Content Moderation

Content moderation plays an essential role across various industries in ensuring safety, compliance, and user engagement.

Social Media

Popular platforms such as Facebook, Instagram, and Twitter heavily rely on content moderation to manage the volume of user-generated content they receive daily. With billions of users, these platforms utilize AI algorithms in combination with human moderators’ overviews to sift through and remove harmful content.

For instance, on Facebook, a combination of “integrity signals” is used to rank each post. “Integrity signals” show how likely a piece of content is to violate Meta’s policies and include signals received from users from surveys or actions they take on Facebook – like hiding or reporting posts. This approach helped remove over 45.9 million pieces of harmful content from the platform in the second quarter of 2022 alone.

E-commerce

Major online marketplaces like Amazon and eBay employ content moderation practices to ensure that product listings meet legal and ethical standards. This involves monitoring for items such as counterfeit goods, inappropriate product images, or deceptive descriptions. Due to the scale of operations, these platforms often use a blend of automated tools and human supervision to handle the content.

Content moderation in e-commerce brings tangible results. Product Safety Policy employed by eBay uses AI and image detection to identify potentially unsafe products: in 2022, these algorithms blocked 4.8 million harmful listings.

Online Forums and Communities

Online forums like Reddit and Quora maintain a safe environment by implementing a combination of community-driven interactions and official moderation mechanisms. These platforms often rely on a mix of pre-moderation, post-moderation, and reactive moderation, with community members also having the power to report inappropriate content.

Reddit serves as a valuable example of how a well-rounded moderation ensures compliance with guidelines. On this platform, content can be removed by moderators, admins, and via user reports. In Reddit’s 2023 transparency report, it’s stated that content removals initiated by moderators accounted for 84,5%, while 72% of content removal actions by mods were the result of proactive Automod removals. 15,5% of removals were performed by admins. Moreover, in the first half of 2023, Reddit received 15,611,260 user reports – admins took action in response to 9.9% of these reports.

Gaming

The gaming industry, which includes platforms such as Twitch and Steam, implements content moderation to oversee streams, user comments, and forum posts to prevent harassment, hate speech, and other negative behaviors. Due to the real-time nature of gaming and streaming, these platforms often use live monitoring and moderation systems to address any issues that may arise.

Gaming platforms use a combination of machine detection, user reporting, review, and enforcement in their content moderation process.

News Outlets

Content moderation on news websites is crucial for managing user comments and discussions to deal with misinformation while fostering respectful dialogue. Major outlets like The New York Times and The Guardian have dedicated moderation teams and mechanisms in place to review user comments before they go live in order to ensure alignment with community standards and guidelines.

While AI mechanisms can ease content moderation, The New York Times still employs a team of moderators who manually review nearly every comment submitted across various articles. This is how a comment makes it to The New York Times website: first, a machine learning system called “Perspective” assigns it a score based on its appropriateness. Next, 15 trained moderators, all with a background in journalism, read the highlighted comments and either approve or deny them for publication. The decision is based on whether they meet The Times’s standards for civility and taste.

Education and Learning Platforms

Online learning platforms like Coursera and Khan Academy employ content moderation practices to uphold the quality and credibility of course discussions and user-generated content. The goal is to create a safe and respectful learning environment for students of all ages.

Learning platforms also employ a hybrid approach to content moderation.

Khan Academy, for instance, uses a mix of automated filters and human moderators. According to their Help Center, Khan Academy's moderation team actively monitors discussion forums and user comments to remove inappropriate content and address violations of their community guidelines.

Challenges in Content Moderation

Content moderation, essential for digital platform integrity, deals with various complex challenges.

Data Scale

One major challenge is the sheer scale of user-generated content (UGC) on major social media platforms such as Facebook and YouTube, with YouTube alone experiencing an upload of over 500 hours of video per minute. To manage this scale, platforms use advanced machine learning algorithms to filter and assess content automatically. However, automated solutions alone are insufficient due to the potential for errors and the need for context-sensitive judgment.

To address this issue, companies are expanding their teams of moderators, integrating AI with human review processes. For example, Facebook employs thousands of content moderators worldwide to collaborate with their AI systems striking a balance between automated efficiency and nuanced human decision- making.

Cultural and Contextual Nuances

Another critical challenge revolves around contextual nuances, influencing content appropriateness in global settings. Platforms encounter difficulties in understanding and moderating content that may be perceived differently across various cultures and regions.

The solution involves assembling a group of moderators who possess insights into cultural norms and language nuances. Moreover, platforms like Twitter and TikTok customize their moderation policies and guidelines to align with traditions and legal stipulations. They do this by establishing moderation hubs staffed with local experts who offer culturally relevant perspectives on the moderation process.

| Region | Cultural and Contextual Nuances |

|---|---|

| Europe (EU) | GDPR enforcement – strict privacy & data removal rights |

| United States | DMCA, Section 230 – copyright & free speech protection |

| China | State censorship – strong content control (Great Firewall) |

| India | Platform bans – TikTok & data regulations |

| Middle East | Strict moral content filters – regional sensitivities |

| Africa | Emerging frameworks – low transparency, rising social platforms |

| Southeast Asia | Language & culture-specific moderation – local teams matter |

Legal Considerations

Legal challenges in content moderation stem from the diverse legal landscapes across jurisdictions. Platforms need to adhere to a range of laws from copyright issues to hate speech regulations, which can differ significantly from one country to another.

To address this, platforms seek guidance from legal experts, employ geolocation technologies to enforce region-specific moderation policies. For instance, in Europe, companies have enhanced their data privacy measures and procedures in response to the GDPR, showcasing their commitment to upholding regional standards while moderating content.

Balancing Free Speech and Safety

Maintaining a balance between freedom of speech and ensuring a safe online environment poses a significant dilemma. Platforms must clearly distinguish between harmful content and free expression.

Achieving this balance necessitates having transparent policies and an effective appeals process for fair content removal decisions. Social media giants like Meta have set up community guidelines and independent review panels to evaluate moderation cases, striving to uphold this intricate equilibrium.

Ethical Considerations

Ethical issues related to content moderation encompass concerns about privacy, bias and accountability. Protecting user privacy involves implementing stringent data access controls and ensuring that moderation processes comply with global data protection regulations.

To tackle bias, platforms are increasingly emphasizing diversity training for moderators, conducting audits on AI systems for impartiality. In terms of promoting transparency and accountability, numerous platforms are releasing transparency reports that outline their moderation actions and the standards applied. This level of openness fosters trust among users and offers a glimpse into the platform’s content moderation initiatives and obstacles.

Technologies Used in Content Moderation

The development of content moderation has been greatly influenced by technological advancements, aimed at efficiently managing the sheer volume of user-generated content (UGC) while addressing the complexities of context, legality, and cultural differences.

AI and Machine Learning Algorithms

AI and ML play important roles in automating content moderation processes, allowing for the analysis of extensive datasets. ML models are trained on large amounts of data to recognize patterns and anomalies like hate speech, explicit material, or spam.

To improve these systems, further continuous learning is implemented by updating AI models with new data to enhance their decision-making abilities. For instance, AI can be trained to adapt to evolving internet slang and new forms of disruptive behavior, ensuring up-to-date moderation standards.

Image and Video Analysis Tools

As online multimedia content continues to grow in popularity, tools for analyzing images and videos have become essential for content moderation. These tools utilize computer vision techniques to detect inappropriate or copyrighted materials in images and videos. Thanks to the development of CNN and other feature-based deep neural networks, the accuracy level of image recognition tools is approximately 95%.

Sophisticated algorithms can recognize explicit content as well as subtle nuances, such as contextually inappropriate imagery or branded content that violates copyright laws. Companies such as YouTube leverage video analysis technologies to assess content at a scale, flagging videos that need further human inspection.

Natural Language Processing (NLP)

NLP is used to understand, interpret, and manipulate human language in content moderation. Natural language processing aids in analyzing content for harmful or inappropriate language, conducting sentiment analysis, and identifying nuanced policy violations like hate speech or harassment. NLP tools are trained on vast text datasets to grasp context and meaning accurately, enabling precise moderation of user comments, posts, and messages. For instance, Facebook's moderation system utilizes NLP to sift through and prioritize content for review, while distinguishing between harmless interactions and those that are offensive.

User Behavior Analysis

Understanding user behavior also plays a role in content moderation efforts. Tools for user behavior analysis track and evaluate patterns of activity to identify malicious or suspicious actions, such as spamming, trolling, or coordinated misinformation campaigns. These tools analyze data points – login frequency, message patterns, and interaction rates – to flag users who may be violating community guidelines. By integrating user behavior analysis into their processes, platforms can proactively address harmful activities thereby enhancing the safety and integrity of the online environment.

Best Practices in Content Moderation

Maintaining a welcoming online environment requires implementing effective content moderation practices. Here are some key recommendations based on industry standards and insights.

| Strategy | Description |

|---|---|

| Develop Clear Guidelines and Policies | Establish clear, accessible, and enforceable guidelines that define what is acceptable and what is not on the platform. These guidelines should align with the platform's values, adhere to requirements, and be adaptable to changing content trends. |

| Employ a Multilayered Moderation Approach | Combine pre-moderation, post-moderation, and reactive moderation strategies to address different types of content and interaction scenarios. This comprehensive approach helps effectively manage user-generated content. |

| Leverage Advanced Technologies | Integrate AI and ML for content screening to handle large volumes efficiently. Still, don’t disregard human moderators – they are essential to interpreting context and nuances, ensuring a fair and balanced moderation process. |

| Promote Transparency and Communication | Transparent moderation processes and decisions foster trust among users. Provide explanations for content removal or account actions along with an opportunity for users to challenge moderation decisions through an appeal process. |

| Monitor and Adapt to Legislative Changes | Stay informed about the legal landscape related to digital content and privacy. Adapt moderation practices to comply with laws like GDPR, DMCA, and others that affect content and data management. |

| Foster Community Engagement | Encourage community participation in the moderation process by offering reporting tools and clear community guidelines. An involved community can serve as a frontline defense against inappropriate content. |

| Use Data Analytics for Insights | Examine moderation data to uncover patterns in content, user interactions, and effectiveness of moderation efforts. This data-driven approach aids in refining moderation tactics and policies. |

Future of Content Moderation

The future of content moderation is shaped by the continuous evolution of online platforms and the complexities of managing diverse, global user-generated content (UGC).

Advancements in Technology

The progress in AI and machine learning technologies is expected to revolutionize content oversight. We can anticipate algorithms that can grasp context, sarcasm, and cultural subtleties thereby reducing errors in judgment. For example, advancements in deep learning can enable systems to analyze content with near-human understanding, enhancing the accuracy of automated moderation. Moreover, with augmented reality (AR) and virtual reality (VR) becoming more integrated into content, platforms’ new methods of oversight will be required to handle interactive and immersive experiences too.

Regulatory Changes

Changes in legislation worldwide will continue to impact how content moderation is carried out. Laws such as the Digital Services Act from the European Union or revisions to Section 230 of the Communications Decency Act in the United States demonstrate governments' efforts to hold platforms accountable for managing content. Platforms will need to navigate between meeting standards and upholding freedom of expression.

The European Commission's Digital Services Act (DSA), proposed in December 2020, outlines new rules for digital platforms operating in the EU, focusing on a more accountable and transparent content moderation process. The DSA aims to standardize content moderation practices across EU member states, with significant implications for global tech companies.

The Growing Role of Community in Self-Moderation

There's a shift towards empowering communities to play a larger role in content moderation. Platforms such as Wikipedia and Reddit have long shown the effectiveness of community-led moderation. This trend is expected to grow, with platforms trusting users with tools and authority to report, assess, and oversee content. This method not only benefits from the judgment and diversity of the community but also nurtures a feeling of ownership and responsibility among users.

Conclusion

Content moderation is an essential aspect of maintaining the integrity and safety of online platforms. As digital spaces evolve, so will the strategies and technologies for managing user-generated content. By grasping the current landscape and anticipating future trends, platforms can establish a safer, more inclusive, and engaging online environment for all users.