SWE-Bench Coding Tasks Dataset

SWE-Bench Coding Tasks Dataset is an extended programming languages dataset that builds on the original SWE-Bench benchmark with broader language coverage, golden/test patches, and real-world coding tasks like bug fixing, code completion, and automated code review. It supports coding agents, language models, and developer tools with verified benchmark scores and multi-language test sets.

-

- files

- 8,712

-

- programming languages

- 6

- Programming languages

- Machine Learning

- Automated Code Review

- Bug Fixing

SWE-Bench Coding Tasks Dataset is an extended programming languages dataset that builds on the original SWE-Bench benchmark with broader language coverage, golden/test patches, and real-world coding tasks like bug fixing, code completion, and automated code review. It supports coding agents, language models, and developer tools with verified benchmark scores and multi-language test sets.

- Programming languages

- Machine Learning

- Automated Code Review

- Bug Fixing

-

- files

- 8,712

-

- programming languages

- 6

Dataset Info

| Characteristic | Data |

| Description | An extended benchmark of real-world software engineering tasks with enhanced artifacts and broader language coverage |

| Data types | Text |

| Tasks | Bug fixing, code completion, pull request generation, automated code review |

| Total number of files | 8,712 |

| Total number of people | 30 |

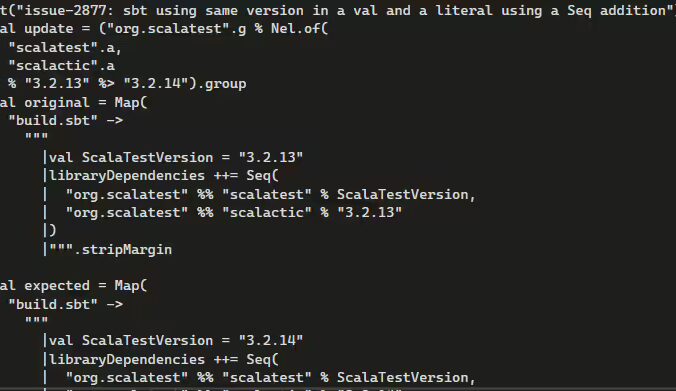

| Labeling | Annotated with golden patches, test patches, post-patch reference states, and metadata stored in parquet files (e.g., repository name, issue/PR identifier, diffs, test results) |

| Programming languages | C#, Go, PHP, Rust, Kotlin, Ruby |

Technical

Characteristics

| Characteristic | Data |

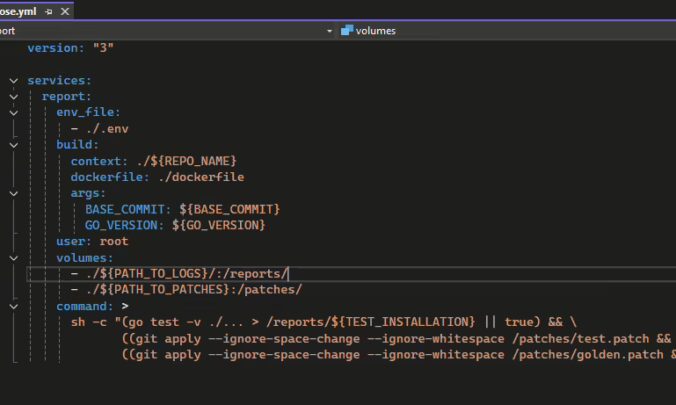

| Files Extensions | parquet (metadata), .patch (golden/test patches), .txt/.xml (reference outputs), .yml (docker-compose), Dockerfile, Makefile, .env |

| Models | Compatible with original Multi-SWE-Bench execution tools and models designed for code understanding and generation |

| File Size | 8.85 GB |

Dataset Use Cases

FAQs

Unidata Cases

Similar Datasets

Why Companies Trust Unidata's Datasets

Share your project requirements, we handle the rest. Every service is tailored, executed, and compliance-ready, so you can focus on strategy and growth, not operations.

What our clients are saying

UniData

Our Clients Love Us

Ready to get started?

Tell us what you need — we’ll reply within 24h with a free estimate

- Andrew

- Head of Client Success

— I'll guide you through every step, from your first

message to full project delivery

Thank you for your

message

We use cookies to enhance your experience, personalize content, ads, and analyze traffic. By clicking 'Accept All', you agree to our Cookie Policy.