Task:

The client needed annotated data for training a sentiment analysis model. The task was to classify each text snippet by its emotional tone — positive, negative, or neutral — while considering the nuances of informal language, sarcasm, and context.

Key challenges included:

-

- Subtle sentiment cues: Sentiment was often implied rather than explicit, especially in short-form content like tweets or support chats.

- Ambiguity and subjectivity: Many texts were borderline in sentiment, requiring annotators to apply consistent interpretation rules.

- Domain variation: The dataset spanned multiple domains (e.g., e-commerce, tech support, entertainment), each with its own tone, jargon, and sentiment indicators.

Solution:

-

- 01

-

Preparation and guidelines

- Created domain-specific sentiment annotation guidelines with real-world examples

- Defined detailed rules for handling sarcasm, negation, and mixed signals

- Provided initial batches with expert-reviewed annotations as reference sets

- Conducted remote training sessions with interactive exercises and QA discussion

-

- 02

-

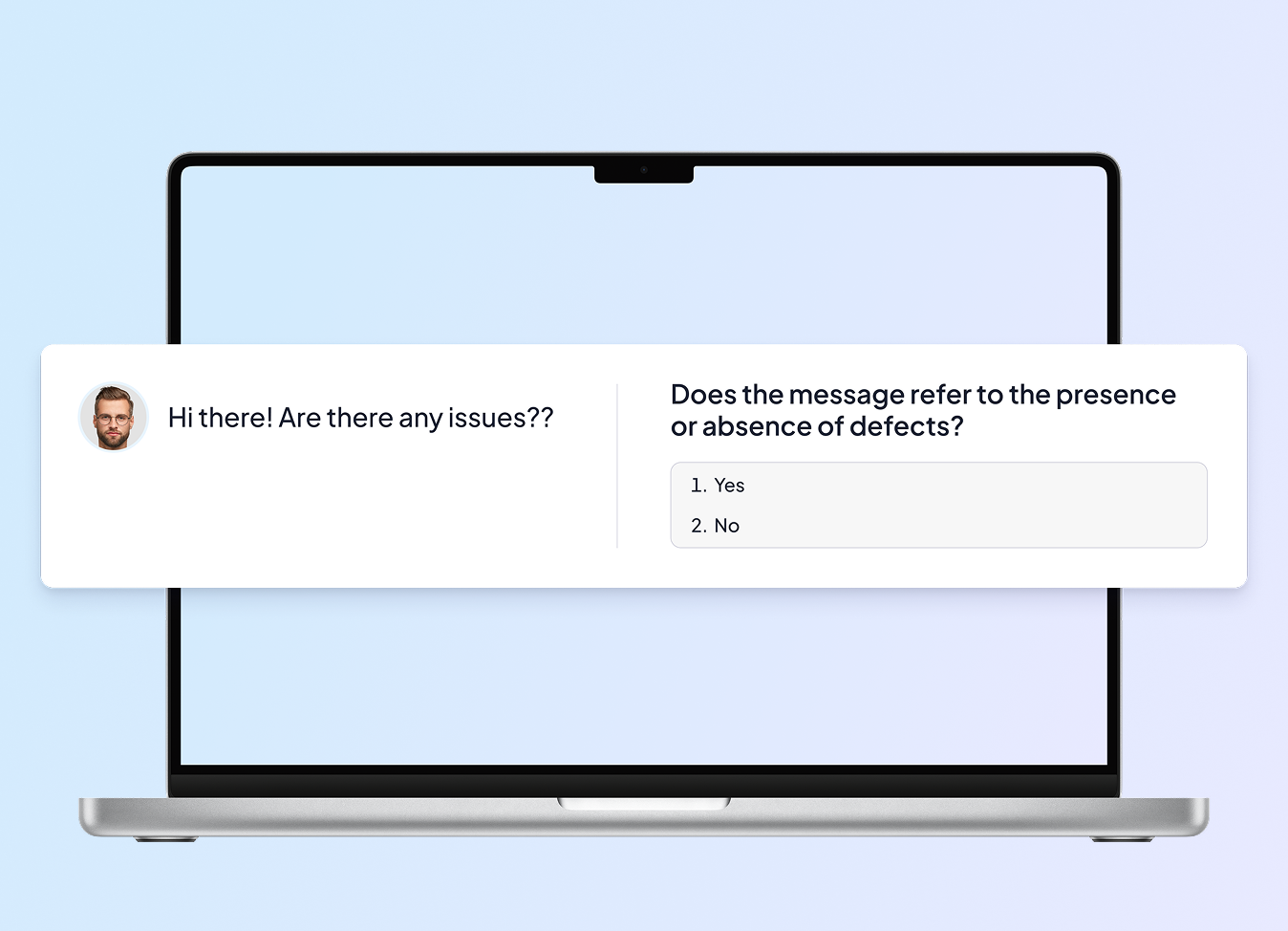

Annotation process

- Annotators labeled text samples using a structured 3-class system (positive, negative, neutral)

- Borderline or uncertain cases were flagged for team review

- Domain shifts were handled by tagging each sample with context metadata for future fine-tuning

-

- 03

-

Quality control

- Weekly quality audits were performed on random samples by expert validators

- Implemented a double-review process for low-agreement cases

- Annotators received regular feedback based on error patterns and validation reports

Results:

Accurately annotated 12,000 text samples with sentiment polarity

Achieved inter-annotator agreement of over 92% on final batches

Developed scalable sentiment labeling workflows adaptable to new domains

Enabled the client to improve their model’s performance on noisy, real-world text data