Ever wondered how Siri answers your questions? Or how Gmail filters out spam? Natural language processing (NLP) makes this possible. It even helps businesses analyze customer reviews quickly.

In this guide, we'll explore NLP’s history and key concepts. You'll also learn about uses and future trends. Whether you're new to NLP or already experienced, you'll find useful insights here.

1. Introduction

Language is how we connect. It shapes our thoughts, our culture, and the way we understand each other. But it’s not always simple. The same word can mean different things depending on tone, context, or who’s speaking—just try making sense of Gen Z slang if you’re not in on it.

That’s where Natural Language Processing, or NLP, steps in. NLP blends computer science, linguistics, and AI to help machines make sense of human language. It’s what powers chatbots, translates text, analyzes reviews, and so much more.

In this guide, we’ll explore how it all works—from the basics like tokenization and part-of-speech tagging to advanced models like BERT and GPT. Whether you're new to the field or brushing up on the latest trends, consider this your go-to introduction to the world of language and machines.

2. The Evolution of NLP

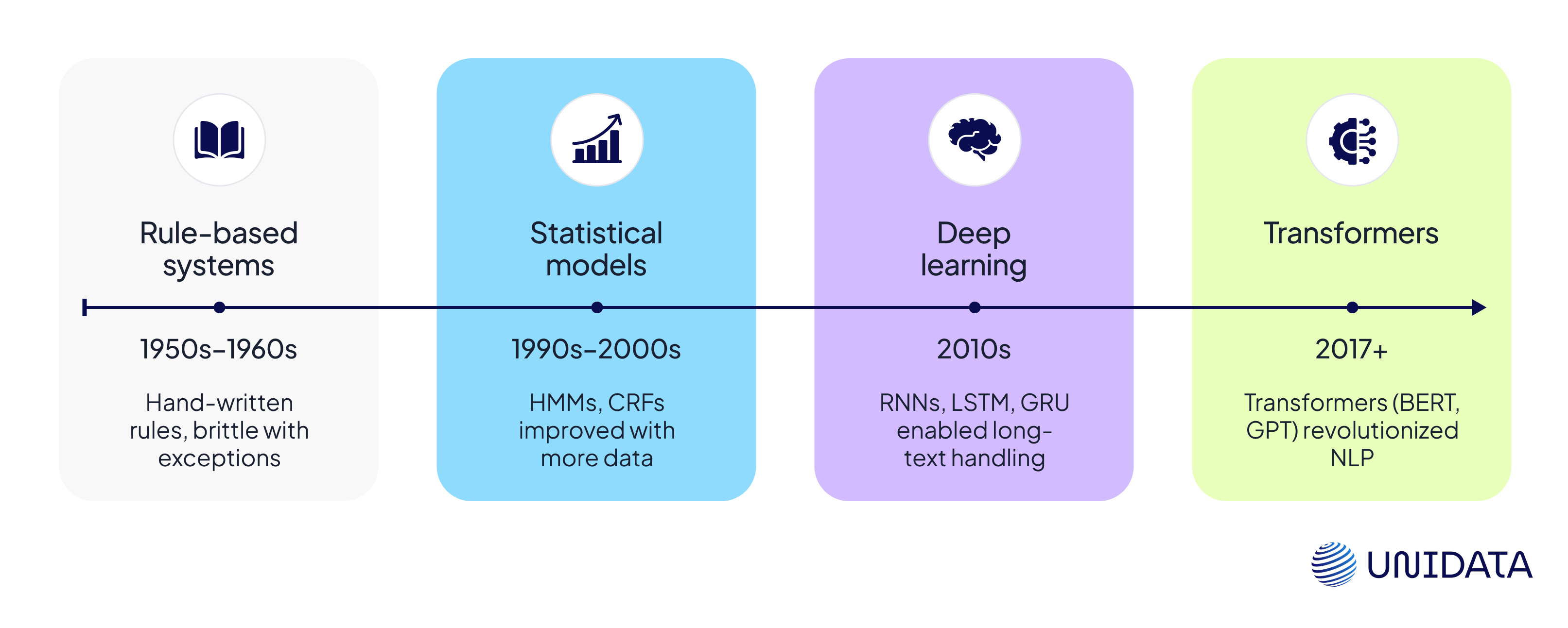

NLP has been around for a while. It started back in the 1950s and 60s, when pioneers like Alan Turing and Noam Chomsky wondered if machines could understand human language. Early systems were rule-based. They used hand-written instructions to process text. But because language is full of exceptions, these systems often broke down.

Then came statistical methods. Models like Hidden Markov Models and Conditional Random Fields became popular. They helped with tasks like tagging parts of speech or finding names in text. As more data became available, these models improved.

A major breakthrough came with deep learning. Neural networks—especially RNNs with LSTM and GRU units—made it easier to work with longer texts. But the real leap forward was the transformer model. Introduced in 2017, it changed everything. Models like BERT and GPT pushed NLP to a whole new level.

Today’s models can understand many languages, write text, answer questions, and more. And the better we understand how NLP got here, the better we can build what comes next.

3. What Is Natural Language Processing (NLP) Used For?

NLP is already behind many things you use every day—even if you don’t realize it. From smart replies to real-time translations, here’s how NLP is quietly powering the tools that make life a little easier.

3.1 Understanding Emotions (Sentiment Analysis)

Ever left a review or tweeted about a product? NLP reads those emotions. It helps companies figure out if people are happy, annoyed, or just plain confused—at scale. Whether it’s a viral tweet or a customer complaint, NLP helps brands stay in tune with what people are feeling.

3.2 Finding Important Details (Named Entity Recognition)

Need to pull out names, dates, or places from a wall of text? NLP’s got it covered. Named Entity Recognition, or NER, acts like a smart highlighter—it picks out the most important bits so news sites, legal platforms, or support tools can work faster and smarter.

3.3 Summing Up Long Texts (Text Summarization)

Nobody has time to read everything. NLP-powered summarizers cut through the noise, turning long reports and articles into short, digestible overviews. It’s like having a colleague who reads everything for you—and gives you just the highlights.

3.4 Breaking Language Barriers (Machine Translation)

Whether you’re translating a product review, a message from a customer, or a dinner menu abroad, NLP helps you understand it in your own language—instantly. Tools like Google Translate are powered by NLP models that are getting faster, smoother, and smarter by the day.

3.5 Grammar Detective (Part-of-Speech Tagging and Syntax Analysis)

Words can be tricky. “Run” can mean a sprint, a tear in your sock, or managing a company. NLP figures out which is which by analyzing sentence structure. That’s what helps chatbots understand you properly—and reply in a way that makes sense.

3.6 Discovering Topics (Topic Modeling)

Got hundreds of survey responses or research papers to sort through? NLP can spot themes and group related content together. It’s like giving your documents a brain—so you can see what people are talking about without reading every word.

3.7 Conversational Wizards (Question Answering & Chatbots)

Virtual assistants like Siri or Google Assistant rely on NLP to understand your questions and give answers that feel natural. Whether you’re asking about the weather or checking an order status, NLP makes those conversations possible—and helpful.

3.8 Automated Data Extraction (Information Extraction)

Sometimes, the details matter—dates, addresses, totals, names. Instead of searching manually, NLP can scan documents and pull them out automatically. It’s used in everything from invoices to support emails, helping teams save time and avoid mistakes.

4. Key Concepts in NLP

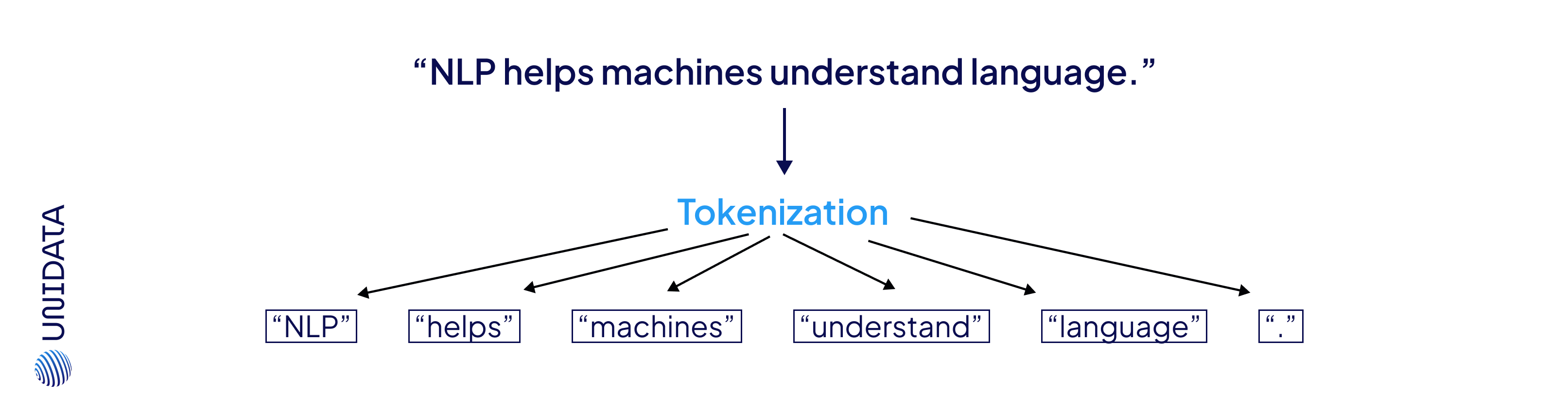

4.1 Tokenization

Every NLP system starts by breaking down text into smaller pieces called tokens. These tokens are usually words, but they can also be subwords or characters—depending on how fine-grained the analysis needs to be. For instance, the contraction “it’s” might be split into "it" and "’s", giving the model a clearer sense of grammar and structure.

Why does this matter? Because almost every downstream NLP task—translation, classification, summarization—relies on well-tokenized input. If the split is off, your model’s understanding is already starting on shaky ground.

4.2 Lemmatization & Stemming

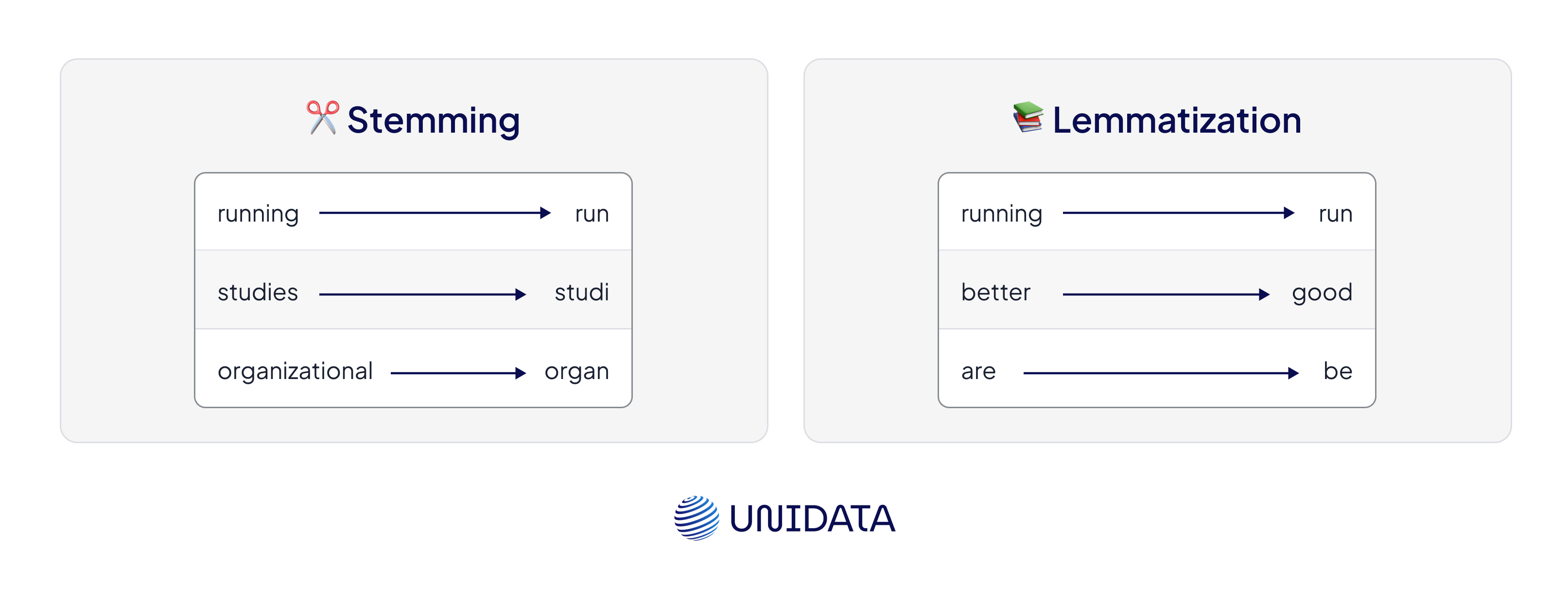

Once text is tokenized, we often want to reduce words to their base forms. This helps the model group variations of the same concept—like “runs,” “running,” and “ran”—under one root idea.

There are two main techniques. Stemming chops off suffixes to get a crude root ("walked" becomes "walk"), while lemmatization uses dictionaries and linguistic rules to return a proper base form ("better" becomes "good", "are" becomes "be").

This process is essential when you want your model to focus on meaning—not just word forms.

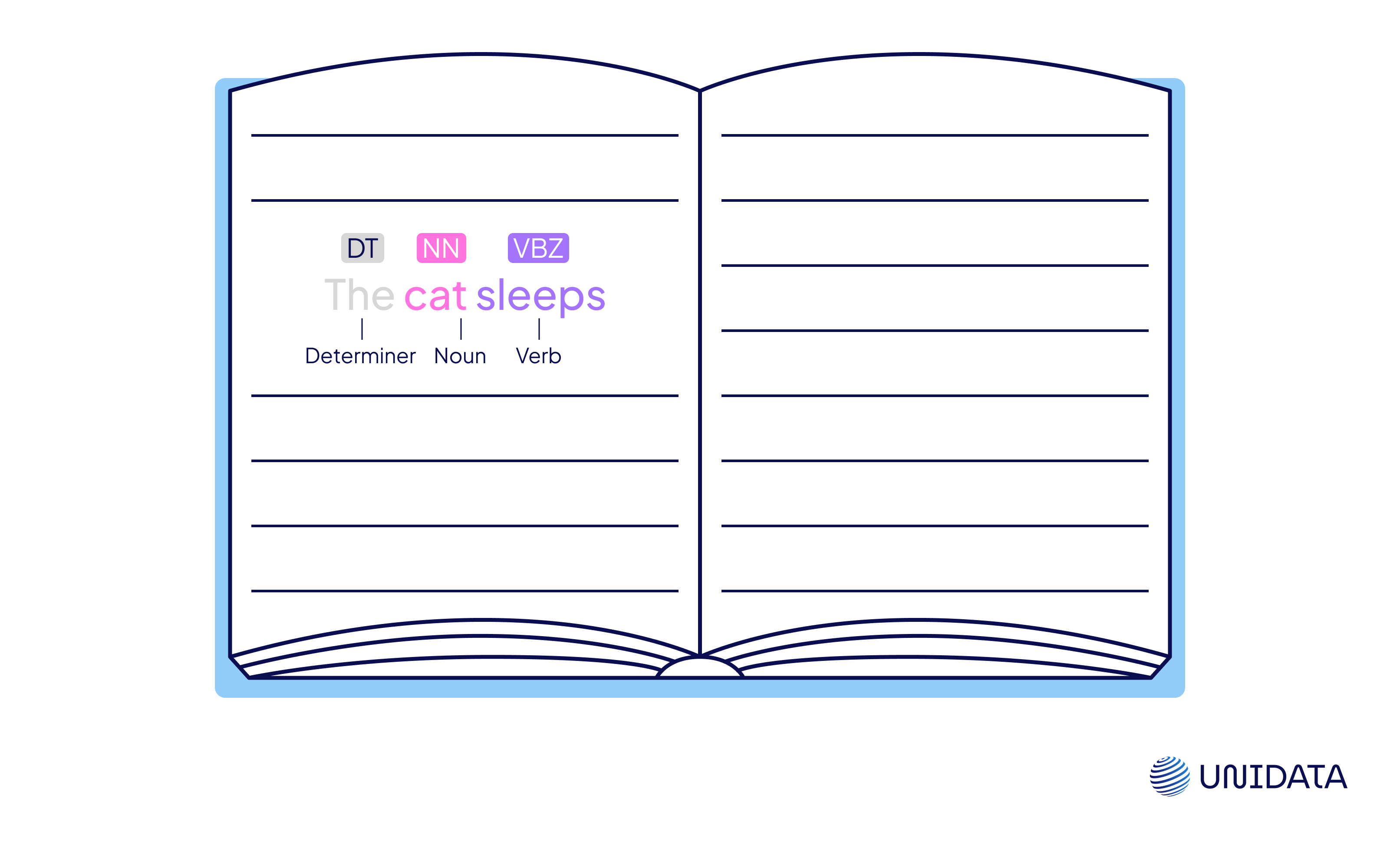

4.3 Part-of-Speech Tagging

Words can wear many hats. Take the word “watch”—it can be something you wear or something you do. Part-of-speech (POS) tagging tells the model whether a token is a noun, verb, adjective, or something else, based on its role in the sentence.

By understanding grammar, models can build better sentence structures, parse intent, and reduce confusion. POS tagging is like giving each word its own role in a linguistic play.

4.4 Named Entity Recognition (NER)

Named Entity Recognition is all about spotting the who, what, and where in a sentence. It picks out people, organizations, dates, brands, and locations—things that anchor text in the real world.

For example, in the sentence “UNESCO added Petra to its heritage list,” a good NER model would tag “UNESCO” as an organization and “Petra” as a location. This kind of labeling powers everything from smart search to automatic news tagging.

NER helps systems go from reading text to understanding what it's talking about.

4.5 Sentiment Analysis

Not all text is neutral. Sentiment analysis looks beneath the surface to figure out if a sentence is glowing with praise, dripping with sarcasm, or simply stating facts.

It’s the engine behind social listening tools, product review analysis, and even financial forecasting. A tweet like “That update just ruined my whole workflow” might not sound angry—but to a well-trained model, it screams negative sentiment.

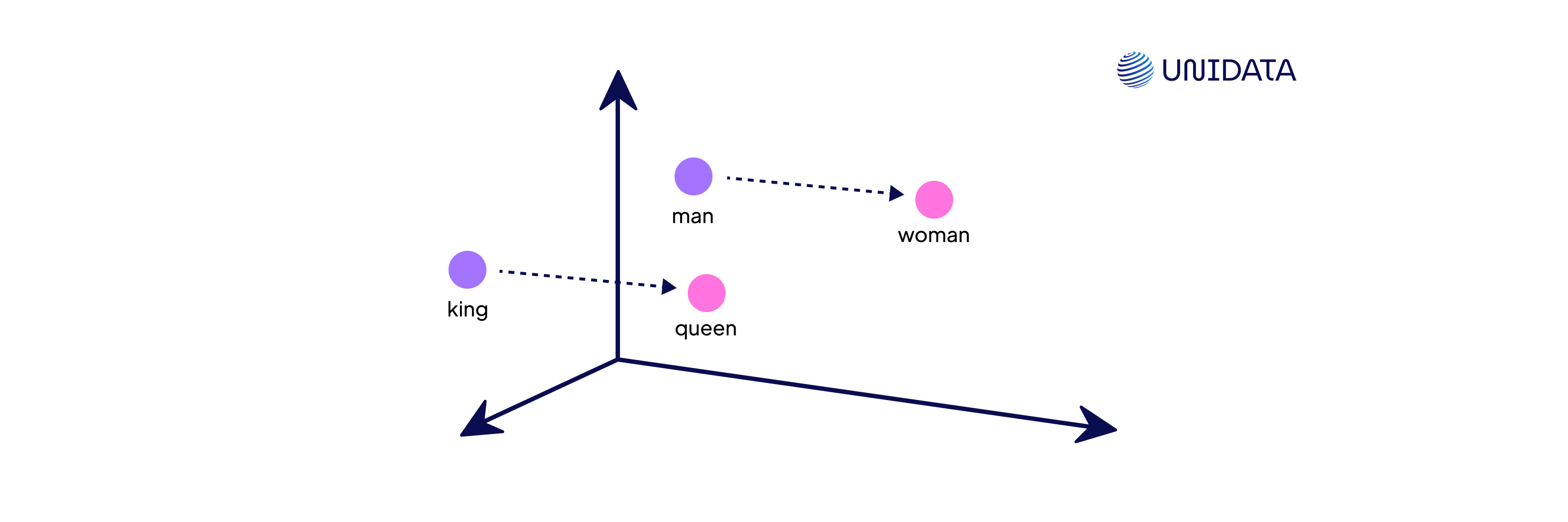

4.6 Word Embeddings & Transformers

Traditional models see words as isolated symbols. But modern NLP uses word embeddings to map words into rich, multi-dimensional vector spaces. This allows the system to understand that “king” and “queen” are related.

Then came transformers—like BERT and GPT—which revolutionized NLP by using self-attention to understand not just word meaning, but word context. Instead of reading left to right, these models read the entire sentence at once, picking up nuance, tone, and even ambiguity.

This leap has powered everything from Google Search to ChatGPT—and it all started with how words are represented.

5. What Is Annotation in NLP?

Annotation is how we teach machines to understand human language. It’s the process of labeling text—manually or semi-automatically—so models can learn what’s important: emotions, topics, names, grammar, and more.

Behind the most powerful NLP systems is a mountain of annotated data—and human expertise guiding every label.

Common Types of NLP Annotation

| Annotation Type | What It Does | Example |

|---|---|---|

| Text Classification | Assigns a label to an entire sentence or message | “Can I return this if it doesn’t fit?” → Customer Support |

| Sentiment Annotation | Labels emotional tone as positive, negative, or neutral | “Totally worth the price” → Positive “The fabric feels cheap” → Negative |

| Named Entity Recognition (NER) | Identifies real-world entities like people, brands, and places | “Zara just launched a new line in Tokyo.” → Zara: Brand, Tokyo: Location |

| Part-of-Speech Tagging | Tags each word with its grammatical role | “Leaves fall early this year.” → Leaves (Noun), fall (Verb), early (Adverb) |

| Dependency Parsing | Maps relationships between words in a sentence | “The barista handed Mia her coffee.” → ‘barista’ = subject, ‘Mia’ = indirect object |

| Intent & Slot Labeling | Tags what the user wants to do + key info | “Leaves fall early this year.” → Leaves (Noun), fall (Verb), early (Adverb) |

| Coreference Resolution | Links different expressions that refer to the same thing | “Leo dropped his phone. He picked it up quickly.” → He = Leo, it = phone |

Annotation Work We Do

At our company, we provide expert human annotation services across a wide range of NLP and audio-focused tasks. Our team of linguists and data specialists has hands-on experience with:

Intent and slot labeling for smart assistants

We annotated voice commands like “play music” or “turn off the lights” to train systems that power smart homes, identifying both the intent and the entities within real user speech.

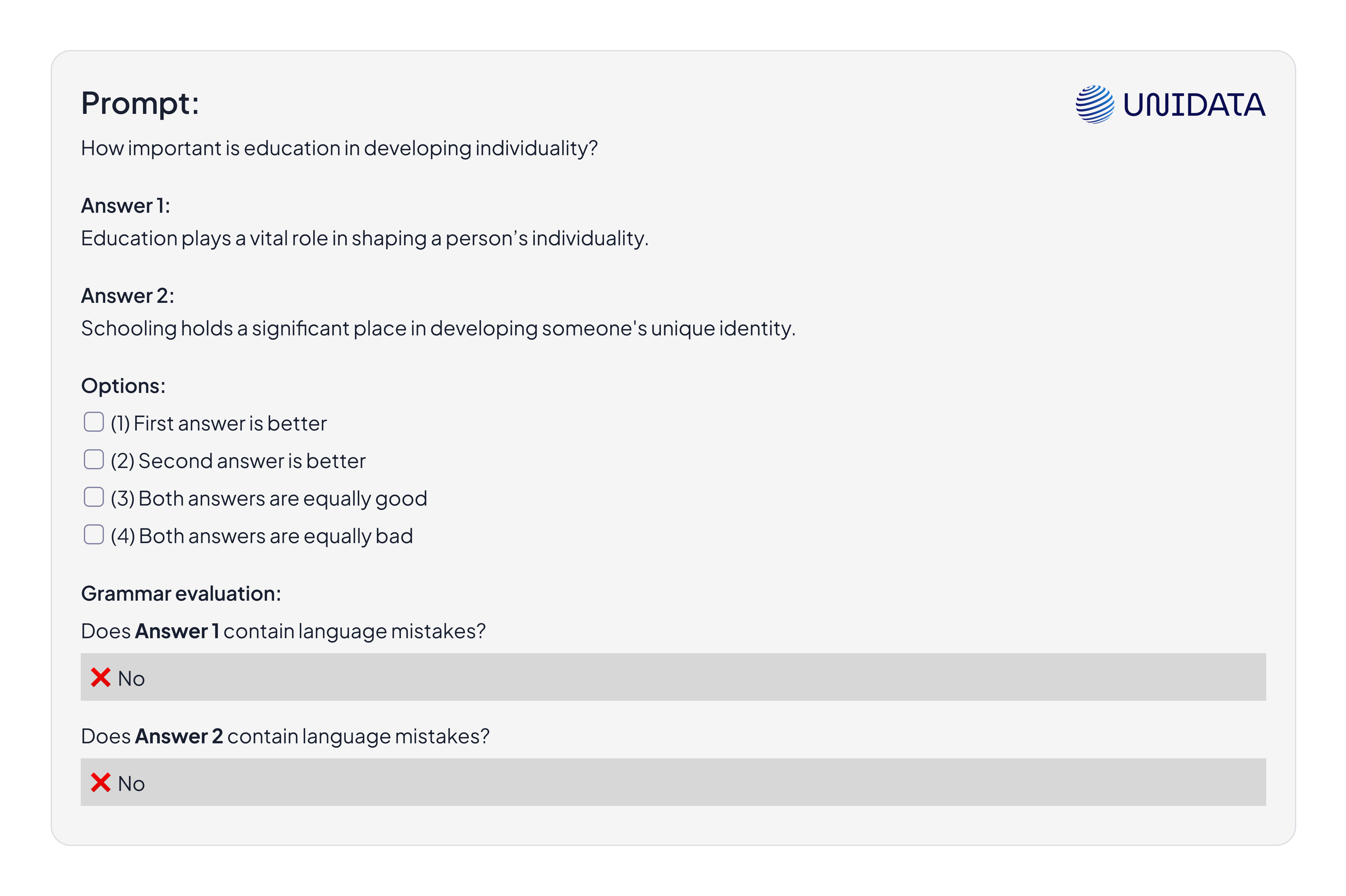

Side-by-side LLM response evaluation

We compared answers from language models to assess quality, helpfulness, and alignment—helping improve chatbot reliability and response ranking.

Morphological tagging for complex languages

We labeled grammatical categories such as gender, number, and case to support accurate parsing and generation in morphologically rich languages like Russian.

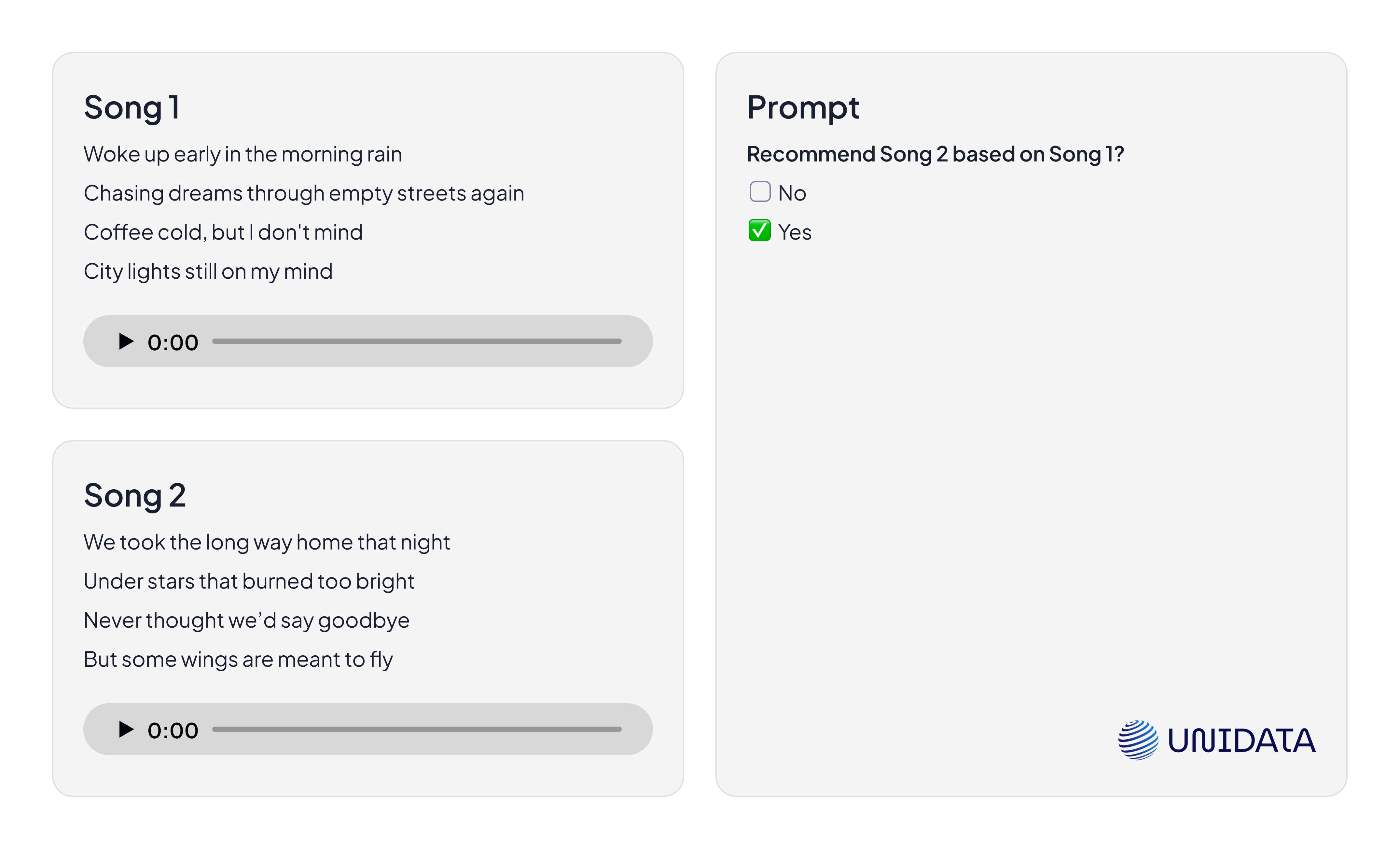

Music recommendation annotation

In a creative multimodal task, we read the lyrics of two songs and listened to both tracks to decide whether the second could be recommended based on the first—merging textual and audio understanding.

Chatbot safety annotation

We categorized sensitive content related to politics, national laws, and profanity—helping filter harmful language and align responses with legal and ethical standards.

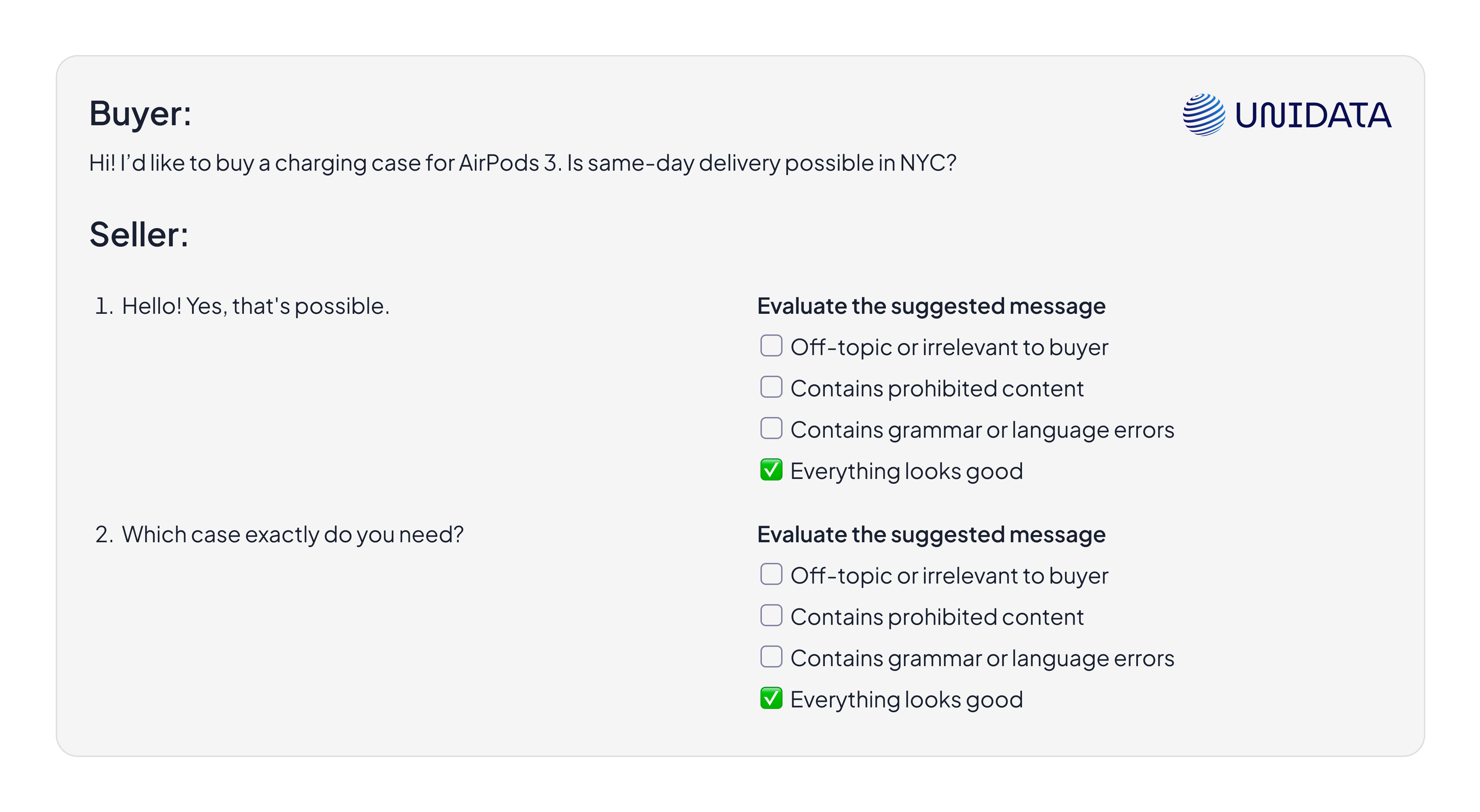

Smart reply suggestions

We annotated dialogue data to train chatbots on generating context-aware suggestions—so buyers and sellers on marketplaces receive relevant, pre-filled response options during conversations.

6. Challenges in NLP

For all its progress, NLP still faces some tough roadblocks. Human language is messy, flexible, and constantly changing—which makes teaching machines to understand it a serious challenge. Here are a few of the biggest hurdles developers and researchers are working to overcome.

Language Ambiguity

The same word can mean different things depending on where, how, or by whom it’s used. Add sarcasm, irony, or slang, and things get even more confusing. Machines still struggle with the kind of nuance we handle without thinking.

Data Quality

If the training data is biased, inconsistent, or noisy, the model will be too. And with massive datasets, questions about privacy, consent, and ethical sourcing become harder to ignore.

Multilingual Complexity

Languages differ in structure, alphabet, and cultural context. A model trained on English might completely miss the mark when working with Japanese, Swahili, or Arabic.

Computational Load

Advanced models come with a cost—literally. Training and running large language models requires serious computing power, making them expensive and less sustainable.

Language Drift

The way we speak and write changes fast. New slang, expressions, and references pop up constantly. Without regular updates, models can quickly sound outdated—or simply fail to understand what’s being said.

7. Real-World Use Cases

From triaging patients to flagging toxic tweets, NLP has moved well beyond labs and into the fabric of everyday operations across industries. Here’s how it's making a real impact in the real world.

Healthcare

In hospitals and clinics, NLP is being used to analyze electronic health records, identify potential symptoms early, and even automate patient triage. Systems can flag mentions of adverse drug reactions buried in doctors’ notes or detect high-risk patients based on their medical history.

The Mayo Clinic has shown how NLP can help pinpoint at-risk individuals by mining vast amounts of clinical text.

Finance

Banks and financial institutions use NLP to parse lengthy contracts, assess loan risk, and monitor news sentiment in real time. Algorithms scan headlines and market updates to predict stock movement before most humans can even react.

Bloomberg employs NLP-driven models to distill financial news into actionable insights within seconds.

E-commerce

Scroll through reviews on any marketplace, and NLP is probably behind the scenes—analyzing sentiment, ranking products, and helping chatbots guide your shopping experience. By understanding user feedback at scale, platforms fine-tune recommendations and spot issues faster.

Companies like Amazon use NLP to make sense of millions of customer reviews and refine what you see first.

Social Media Monitoring

On platforms like Twitter or TikTok, NLP is the engine that keeps feeds clean and relevant. It moderates toxic language, flags policy violations, and highlights trending topics by scanning millions of posts in real time.

These tools help platforms fight spam, elevate positive engagement, and react quickly to viral conversations.

Legal

In the legal world, time is money—and there’s never enough of either. NLP speeds up contract review, categorizes documents, and streamlines e-discovery processes. Instead of sifting through thousands of pages manually, lawyers can zero in on relevant info in seconds.

Law firms and legal tech platforms now rely on NLP to cut research time and boost case preparation efficiency.

8. Ethical and Societal Implications

As NLP systems become more integrated into daily life, ethical concerns grow:

Bias and Fairness: Models can inadvertently learn and amplify societal biases. If a model is trained on biased data, it can discriminate against certain groups in decisions like loan approvals or job screenings.

Privacy: Large-scale text analysis can reveal sensitive personal information. Strict data handling practices are crucial.

Misinformation: Advanced text generation models can produce highly realistic but false narratives. Tools like GPT have sparked discussions on AI-generated misinformation campaigns.

Regulations: Governments and regulatory bodies are starting to draft guidelines for responsible AI, including how NLP systems should be audited or monitored.

Reference: The European Commission’s guidelines on AI ethics (https://digital-strategy.ec.europa.eu/) emphasize transparency, accountability, and robustness as key principles.

9. Future Trends in NLP

NLP is moving beyond words on a screen. One major shift is toward multimodal learning—where models learn not just from text, but also from images, audio, and video. Think of a system that understands a tweet better because it also “sees” the video attached or hears the speaker’s tone.

At the same time, we’re seeing the rise of massive multilingual models. These systems can handle over 100 languages with impressive fluency, bringing us closer to AI that feels truly global and culturally aware.

Another big step forward is few-shot and zero-shot learning. These techniques allow models to learn new tasks with just a few examples—or even none at all. It’s a faster, more flexible way to train systems without needing endless data.

We’re also entering the age of personalized NLP. Instead of a one-size-fits-all voice, future models will adjust to the way you write, speak, and search—becoming more helpful by sounding more like you.

And as privacy concerns grow, federated learning is gaining traction. This approach lets models train directly on your device, so your data stays local. It’s a major step toward building smarter systems without compromising user control or security.

Together, these trends are shaping a future where NLP is more adaptive, more human, and far more powerful than ever before.

10. Conclusion

NLP has come a long way. What started with basic rules has grown into powerful tools that can understand, generate, and work with language in real time. Today, NLP is used everywhere—from helping doctors read patient notes to making customer chats faster and smarter. And it’s becoming more useful every day.

But as the technology improves, it’s important to use it wisely. Good data, fairness, and clear goals matter just as much as smart models. The future of NLP is bright—and it’s already changing how we read, write, and connect.