Why is Training Data Important?

Training data is the foundation of Machine Learning (ML) models. Without good-quality training data, even the most advanced algorithms may fail to deliver meaningful results. In fact, 80-90% of the time spent on ML projects is devoted to handling and preparing data. This statistic emphasizes the vital role of high-quality training data in developing effective AI systems (Forbes).

Training data refers to datasets used to teach machine learning models how to process information, recognize patterns, and make predictions. High-quality, well-labeled datasets enable ML/AI models to understand the relationships between features and outcomes, directly impacting their performance and accuracy. Diverse and representative data not only helps in capturing the complexities of real-world scenarios but also minimizes biases that can lead to flawed predictions.

For instance, a model trained on thousands of images of cats and dogs will learn to distinguish between these animals.

This article walks you through the entire process of preparing training data for ML and AI projects, helping you understand each step, even if you're new to the field.

Types of Training Data

Real vs. Synthetic Data

Real Data

Real data is collected from actual sources in the real world, such as user interactions, sensor readings, or financial transactions. It reflects genuine, often complex conditions, making it essential for models needing real-world applicability. However, collecting real data can be costly and may involve privacy or regulatory challenges.

Synthetic Data

Synthetic data is artificially generated using algorithms or simulations. It’s useful when real data is scarce, expensive, or sensitive. While it may not fully capture real-world complexity, advancements in data generation techniques (e.g., GANs) are improving its realism, making it a cost-effective and scalable alternative for training models.

Structured vs. Unstructured Data

| Type | Description | Use Cases |

|---|---|---|

| Structured Data | Organized and formatted, found in databases or spreadsheets. | Fraud detection, financial analysis |

| Unstructured Data | Lacks a specific format; includes text, images, and videos. | NLP, computer vision, sentiment analysis |

| Semi-Structured Data | Contains some organizational structure, but not as rigid as structured data. | XML, JSON files |

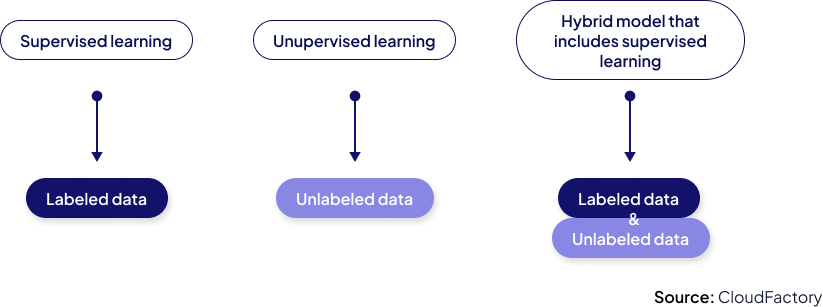

Labeled vs. Unlabeled Data

Labeled Data

Labeled data consists of input data annotated with meaningful labels, providing context for machine learning models. Each data point is associated with specific outputs, essential for supervised learning. While crucial for training models to recognize patterns, creating labeled data can be time-consuming and costly.

Unlabeled Data

Unlabeled data lacks annotations, making it more abundant and easier to collect. Used in unsupervised learning, it allows models to identify patterns without predefined outputs. Although it can reveal hidden structures, extracting meaningful insights often requires advanced techniques. Unlabeled data can enhance model performance, especially when combined with limited labeled data in semi-supervised learning.

Time-Series and Sequential Data

Time-Series Data

Time-series data is a sequence of data points indexed in time order. It’s commonly used to track changes over time, such as stock prices, sensor readings, or temperature measurements. In machine learning, models trained on time-series data learn to recognize trends, cycles, or irregular patterns.

Sequential Data

Sequential data refers to any data where the sequence of events matters, but it’s not strictly tied to a time index. This type of data is common in text (NLP) or genomic sequences (the complete sets of DNA), where the order of words or nucleotides affects the overall meaning or function.

Characteristics of High-Quality Training Data

| Characteristic | Description |

|---|---|

| Relevance | Training data must directly relate to the problem being solved. Relevant data enables the model to learn meaningful patterns that improve its performance. |

| Accuracy | Accurate labeling is crucial for supervised learning models. Errors in data can mislead the model, reducing its effectiveness and reliability. |

| Consistency | Uniform data formatting and labeling ensure smooth learning for the model. Inconsistent data can confuse the model, lowering its generalization ability. |

| Diversity and Representativeness | Diverse data helps the model handle various real-world scenarios, avoiding overfitting and improving adaptability to new situations. |

| Size and Scalability | A dataset should be large enough for the model to learn effectively. While bigger datasets often lead to better performance, they require careful management to ensure scalability with available resources. |

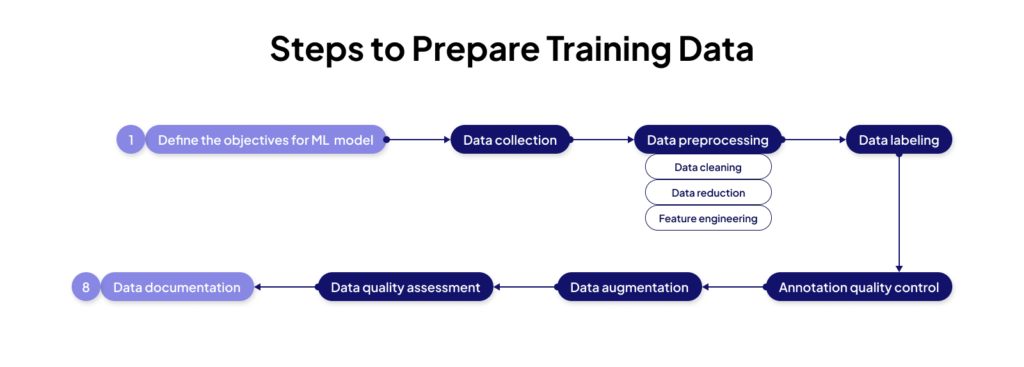

How Do We Prepare Training Data: Steps

Here is a brief overview of the steps to prepare high-quality training data. We will take a closer look at each step.

Step 1: Define the Problem and Objectives

Before embarking on a machine learning project, it is essential to clearly define the problem that the ML model will address and establish specific objectives. This step sets the scope of the project and guides the selection of data and models. A well-defined problem ensures that resources are focused on relevant tasks and prevents potential misalignment between the business goals and the AI solution.

Steps Involved:

- Identify the Business Challenge: Specify the issue to be resolved, such as customer churn prediction, fraud detection, or product recommendation enhancement.

- Set Measurable Objectives: Determine the goals, such as improving customer retention by a certain percentage or enhancing the accuracy of a recommendation system.

Example:

For an e-commerce company aiming to improve its product recommendation system, the objective may be to increase the accuracy of recommendations by analyzing past customer behavior and purchase history.

Product Grouping for E-commerce

- Major online classifieds platform

- 20,000 listings with triple annotation overlap

- 2 months

Step 1: Data Collection

Data collection is the first critical step in preparing training data for Machine Learning models. This process involves gathering relevant information from various sources that will serve as the input data for the model. The quality and relevance of the collected data are crucial as they directly impact the model's ability to learn and generalize effectively.

Why is Data Collection Important?

The model can only be as good as the data it learns from. If the data is incomplete, unrepresentative, or biased, the model's performance will reflect these limitations. Proper data collection ensures that the model is trained on diverse and relevant information, allowing it to perform well across various scenarios.

Types of Data Collection:

- Internal Sources:

- Data from within the organization, such as customer transactions, sales records, or user activity logs.

- Example: An e-commerce platform may collect customer purchase history, website navigation behavior, and product ratings to enhance its recommendation system.

- External Sources:

- Data from public datasets, APIs, or external organizations that offer relevant information. Government reports, social media data, and industry-specific datasets are common external sources.

- Example: For natural language processing (NLP) tasks, large corpora of text data may be collected from news websites, online forums, or public social media posts.

- Web Scraping and APIs:

- Automated tools can scrape publicly available data from websites, or APIs can be used to retrieve data from online platforms in a structured format.

- Example: A company building a price comparison model may scrape product prices from different e-commerce websites using automated web scraping tools or APIs.

- Data Brokers and Third-Party Providers:

- In many industries, specialized companies collect and sell data for particular use cases, such as financial transactions, healthcare records, or geolocation data.

- Example: A financial institution may purchase consumer credit data from third-party providers to develop a model predicting default risks.

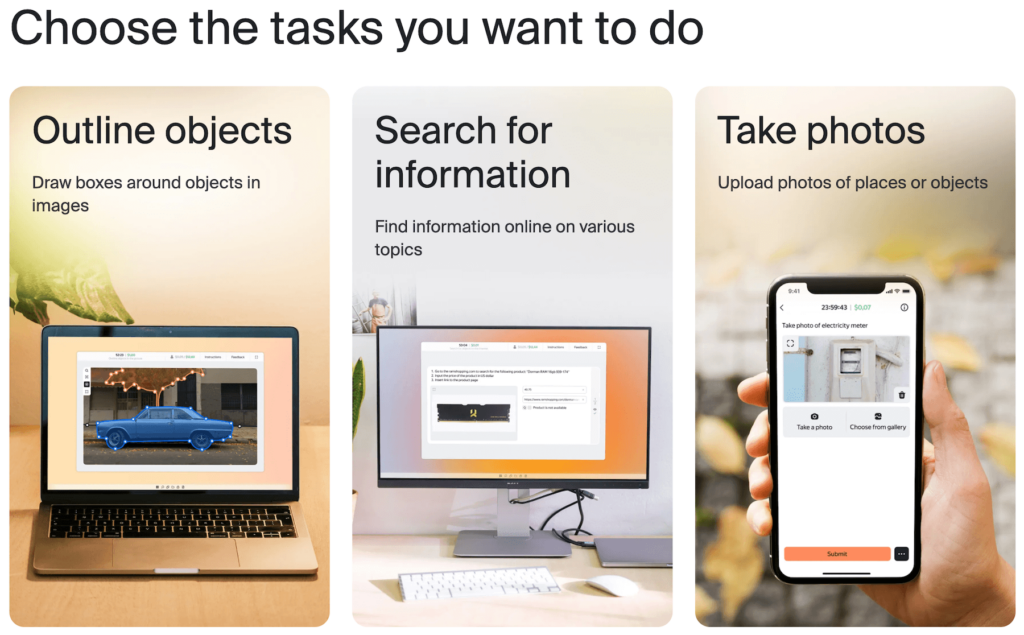

- Crowdsourcing

- Crowdsourcing involves collecting data from a large group of people, typically through online platforms. It's useful for tasks like data labeling or quickly collecting diverse information.

- Example: A company may crowdsource image labeling for its AI model by having contributors annotate thousands of images on platforms like Amazon Mechanical Turk or Toloka.

Step 2: Data Preprocessing

Data preprocessing refers to the steps taken to clean, transform, and prepare raw data before feeding it into a machine learning model. The goal is to ensure the data is structured, complete, and ready for the model to learn from it efficiently.

What Makes Data Preprocessing Essential?

Real-world data is rarely clean or in the right format for machine learning models. Data preprocessing ensures minimizing errors, biases, and inconsistencies in data. Without proper preprocessing, even the most sophisticated models can produce inaccurate results or fail to train effectively.

Key Data Preprocessing Steps

a. Data Cleaning

This involves identifying and correcting inaccuracies in the data, such as missing values, duplicates, or incorrect entries. Data Cleaning includes:

- Outlier Detection:

Outliers, or extreme values that deviate significantly from the rest of the data, can skew model training. Detecting and handling outliers (either by removing them or adjusting their impact) helps ensure that the model doesn’t learn patterns that are unrepresentative of the overall data.

Example: A few unusually large purchases in an e-commerce dataset might be outliers that should be treated differently to prevent the model from being biased. - Normalization and Scaling:

Ensuring that numerical data is on a consistent scale is essential for models, especially algorithms like support vector machines (SVM) or gradient-based methods. Normalization (scaling values between 0 and 1) or standardization (adjusting data to have a mean of 0 and a standard deviation of 1) are common techniques. However, normalization does not apply to all types of data.

Example: In financial data, dollar amounts for transactions may need to be scaled down to avoid overwhelming the model with large values. - Handling Missing Data:

Missing data can be addressed in several ways, such as imputation (filling in missing values based on statistical methods), removing rows with missing values, or using algorithms that can handle missing data.

Example: In medical datasets, where missing values for certain tests may be common, imputing the missing data with averages or using other techniques can help preserve useful information for model training.

b. Data Reduction

Data reduction simplifies large datasets by removing redundant or less important features, making models faster and more efficient. Dimensionality reduction techniques like Principal Component Analysis (PCA) or feature selection are commonly used to focus on the most critical variables.

Example: In genomics, datasets often contain thousands of genes. Using feature selection, only the most relevant genes linked to disease are retained, reducing dimensionality and improving the accuracy of disease classification models.

c. Feature Engineering

Feature engineering is a key preprocessing step where new features are created or existing ones are transformed to enhance model performance. This process can involve encoding categorical data, creating interaction terms, or extracting new meaningful features from raw data.

Example: In customer transaction data, raw timestamps can be transformed into useful features such as "time of day" or "day of the week," providing more insights into customer purchasing behavior.

Step 3: Data Labeling

Data labeling is the process of annotating or tagging raw data to provide meaningful information to machine learning models. For supervised learning tasks, data must be labeled so the model can learn the relationships between the input data (features) and the expected output (labels).

Why is Data Labeling Important?

In supervised learning, the model must learn to map inputs to specific outputs. Without labeled data, the model cannot be trained to make accurate predictions. Labeling provides the model with the context needed to identify patterns in the data.

Types of Data Labeling:

- Manual Labeling:

- Human annotators manually tag the data. This is common for tasks that require high accuracy or deep understanding, such as labeling medical images for disease detection or categorizing customer support emails by topic.

- Example: A radiology dataset might require experts to manually label X-ray images as "healthy" or "diseased" to train an AI model for diagnostic purposes.

- Automated Labeling:

- In some cases, automated algorithms can generate labels. For instance, sentiment analysis tools can assign "positive," "negative," or "neutral" labels to text data based on pre-defined rules.

- Example: Automated labeling systems may classify thousands of product reviews based on customer satisfaction, allowing a model to analyze customer feedback efficiently.

- Human-in-the-Loop Labeling:

- This approach combines automated labeling and human validation to ensure higher accuracy. Automated systems can process large datasets quickly, but human oversight ensures that errors or misclassifications are corrected.

- Example: In image recognition, an automated system might label 90% of images correctly, but humans review the remaining 10% to correct any mistakes, especially in edge cases.

Importance of High-Quality Labeled Data:

- Impact on Accuracy: High-quality, accurate labels improve the performance of ML models significantly, ensuring they can generalize better to new, unseen data.

Quality Control in Annotation

Quality control ensures that annotations are correct and consistent across the dataset. Methods to achieve this include:

- Consensus Labeling: Multiple annotators work on the same data, and the final label is based on majority agreement.

- Spot Checks: Randomly reviewing a subset of labeled data to assess accuracy.

- Automated Validation: Using algorithms to verify the consistency and correctness of labels.

- Training Annotators: Providing clear guidelines and continuous feedback to annotators to minimize human error.

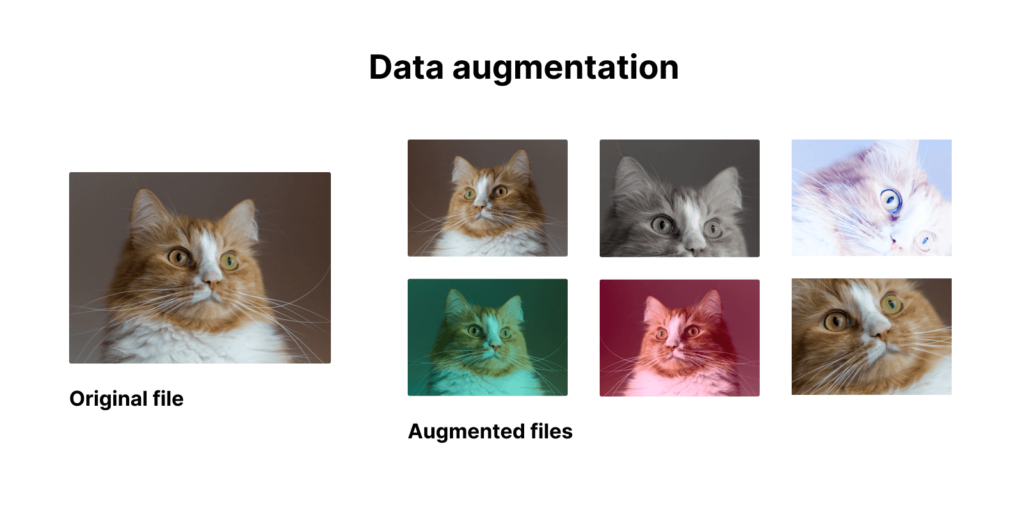

Step 4: Data Augmentation (Optional)

Data augmentation is an optional but powerful technique used to artificially increase the size and diversity of a dataset. It is particularly useful in situations where the available data is limited or imbalanced. The process involves creating modified versions of existing data to help models generalize better.

Why is Data Augmentation Essential?

In many real-world scenarios, the amount of labeled data may be insufficient to train a model effectively. Additionally, imbalanced datasets, where certain classes are overrepresented, can cause models to struggle with minority classes. Data augmentation addresses these issues by creating new training examples and providing models with more varied data to learn from.

Common Data Augmentation Techniques:

- Image Data Augmentation:

- Techniques like flipping, rotating, cropping, and applying color filters to existing images are common. These transformations allow the model to see the same image from different perspectives.

- Example: For a model tasked with recognizing handwritten digits, augmenting the images by rotating or stretching them can help the model generalize better to different handwriting styles.

- Text Data Augmentation:

- In natural language processing (NLP), text augmentation can involve rearranging sentences, replacing words with synonyms, or generating new text from existing samples.

- Example: In a sentiment analysis model, replacing words like "happy" with synonyms like "joyful" or "content" can create additional training samples without changing the overall meaning of the text.

- Audio Data Augmentation:

- Techniques such as adding background noise, altering the pitch or speed of audio clips, and shifting the start and end points of audio can provide more training samples for models that work with speech or sound data.

- Example: In a speech recognition system, slightly altering the pitch or speed of audio recordings can help the model learn to recognize speech under varying conditions.

- Synthetic Data:

- Synthetic data can be particularly useful in domains where acquiring large amounts of real data is expensive or time-consuming, such as autonomous vehicle training.

- Example: In a factory setting, to prevent extreme situations such as accidents or malfunctions, real-world data might be scarce or difficult to obtain, as incidents are rare and sensitive. Instead of relying on actual data, synthetic data can be generated to simulate these events, allowing for better training and preparedness without compromising safety or privacy.

Benefits:

- Increased Diversity: Data augmentation allows models to learn from a broader range of examples, which improves generalization to new, unseen data.

- Cost-Effective: Rather than collecting new data, augmenting existing data is a cost-effective way to expand datasets.

- Handling Imbalanced Datasets: In situations where one class is significantly underrepresented, data augmentation can help balance the dataset, leading to better model performance.

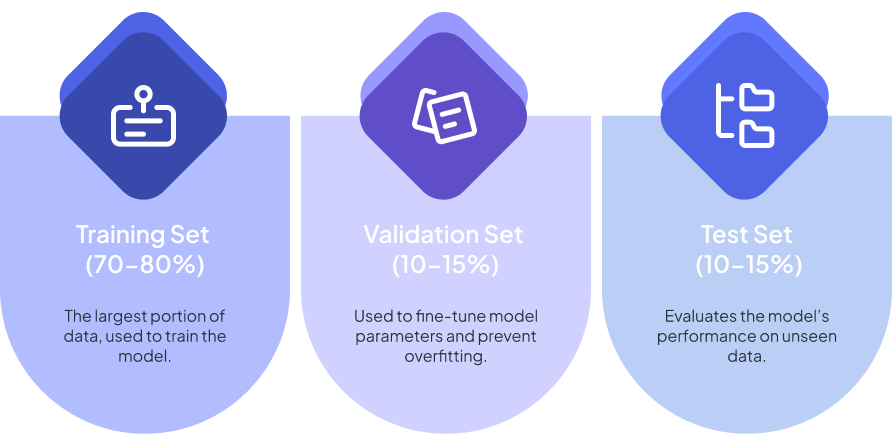

Step 5: Data Splitting

To evaluate the performance of a machine learning model, it is essential to divide the data into training, validation, and test sets.

Data Split:

Best Practice:

Ensure that the data split is representative of the overall dataset to avoid biased model performance. Stratified sampling may be required if the data contains imbalanced classes.

Step 6: Data Quality Assessment

The quality of the data is crucial for the success of the ML model. Data that is incomplete, biased, or unrepresentative can lead to incorrect predictions and biased outcomes.

Common Issue:

- Bias can occur when certain classes or demographic groups are overrepresented in the data, and when the dataset is imbalanced, with one class dominating (e.g., fraudulent vs. non-fraudulent transactions), the model may struggle to accurately predict minority classes, reinforcing biased patterns.

Ensuring data quality through proper checks and adjustments helps the model generalize better, reducing the risk of overfitting and improving performance on new data.

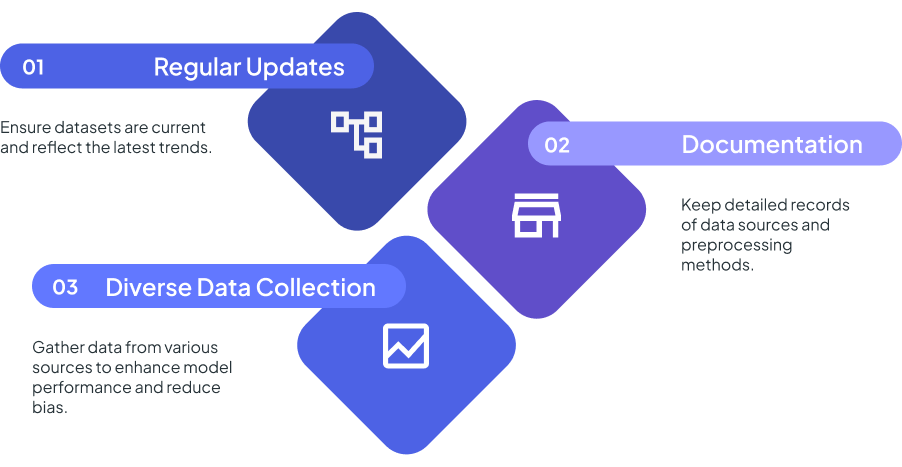

Step 7: Data Documentation

Documenting the data preparation process is essential for ensuring transparency, reproducibility, and collaboration. Clear documentation allows teams to track decisions, methods, and changes throughout the project lifecycle.

Key Documentation Points:

- Data Sources: Clearly outline where the data was collected from and any preprocessing steps taken.

- Preprocessing Methods: Document how the data was cleaned, transformed, and labeled to provide context for future model improvements.

Best Practices for Managing Training Data

Effective management of training data includes:

Challenges in Training Data Preparation and Their Solutions

Collecting and preparing high-quality training data involves several challenges:

| Challenge | Description | Impact | Solutions |

|---|---|---|---|

| Data Bias and Fairness | Biases can occur due to non-representative sampling, flawed data collection methods, or biased annotations. This results in models that may perform well for some groups but poorly for others. | Results in skewed outcomes and ethical issues. | Implement bias detection tools, use diverse datasets, and apply fairness algorithms. |

| Privacy and Ethical Concerns | Handling sensitive or personal data involves adhering to privacy regulations and ethical standards. Failure to do so can lead to breaches of confidentiality and misuse of data. | Requires strict compliance with data protection regulations. | Use data anonymization, secure data storage, and comply with regulations like GDPR. |

| Data Drift and Concept Drift | Data Drift: Refers to changes in the statistical properties of the input data over time. Concept Drift: Occurs when the relationship between input data and the target variable changes. | Can lead to decreased model performance and reliability. | Implement continuous monitoring, adapt models with incremental learning, and retrain periodically. |

| Scalability Issues | Managing and processing large volumes of data can be challenging, requiring significant computational resources and efficient data management strategies. | Challenges in processing speed and resource management. | Utilize scalable data processing frameworks and cloud-based solutions for efficient management |

The Future of Training Data: Key Data Types and Trends

The future of training data is driven by innovations aimed at improving data collection, scalability, and quality. To meet the growing demands of artificial intelligence and machine learning systems, a variety of new data types and emerging trends are transforming the field.

1. Key Data Types in the Future of Training Data

1.1 Synthetic Data

Synthetic data replicates real-world data and is increasingly used due to its scalability and cost-effectiveness. It reduces reliance on scarce or expensive real-world data and addresses privacy concerns, making it valuable in fields like autonomous vehicles and healthcare. However, ensuring that synthetic data is realistic and captures real-world complexity remains a challenge.

1.2 IoT Data

Data from IoT devices, like smart appliances and wearables, will be key for training adaptive AI models. In smart homes, IoT data can improve energy efficiency, while in healthcare, wearables provide real-time health insights. The challenge lies in processing the vast amount of data generated and dealing with the lack of standardization across devices.

1.3 Multimodal Data

Multimodal data, which integrates text, images, and audio, is critical for improving model performance in areas like healthcare and autonomous vehicles. For example, healthcare models can analyze medical records and images together, while autonomous vehicles combine video and sensor data. However, integrating these data types requires advanced architectures and computational resources.

2. Key Trends Shaping the Future of Training Data

2.1 Privacy-Preserving Data Techniques and Ethics

As data privacy regulations like General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the U.S. continue to evolve, organizations must adopt privacy-preserving techniques for collecting and using training data. Techniques such as federated learning and differential privacy are being developed to balance the need for high-quality training data with the protection of individual privacy.

- Federated Learning: Enables training on decentralized data across devices without transferring the data to a central server. This reduces the risk of data exposure and privacy breaches.

- Differential Privacy: Adds statistical noise to datasets to ensure individual data points cannot be traced back to their origin, enhancing user anonymity.

Ethical AI Development: In addition to privacy, there is a growing emphasis on developing fair and unbiased AI systems. Ensuring that training data is diverse and representative of different populations is crucial to preventing biased outcomes in AI applications.

- Bias Audits: Companies are increasingly conducting bias audits of their training datasets to identify and address potential sources of bias before training their models.

2.2 Feature Stores

Feature stores are becoming a key innovation in the management of training data, enabling better reuse and organization of data features across different models. Feature stores allow data scientists to store, manage, and share features—pre-engineered variables used to train models—across teams, enhancing collaboration and consistency.

- Benefits:

- Improves efficiency by allowing teams to reuse features rather than create new ones for each project.

- Ensures consistent feature use across models, reducing discrepancies in model performance.

- Challenges: Implementing feature stores at scale requires robust infrastructure and collaboration between data scientists and ML engineers.

2.3 Synthetic Data Expansion

As the demand for large-scale datasets grows, synthetic data will become increasingly prevalent.

Emerging Applications include Robotics. Synthetic data enables robots to be trained on tasks such as object recognition, grasping, and manipulation without requiring extensive real-world experimentation.

2.4 Data Marketplaces and Data Versioning

In the future, data marketplaces are expected to grow, providing platforms where organizations can buy and sell high-quality datasets. These marketplaces will offer pre-labeled, industry-specific datasets, streamlining the data collection process.

- Data Versioning: As datasets become more complex, data versioning—the ability to track and manage different iterations of datasets—will be critical for reproducibility and collaboration. This allows organizations to update their models with new data while retaining access to earlier versions for comparison and verification.

2.5 Expansion of Interdisciplinary Collaboration

Collaboration of experts from various fields is expected to grow—such as data scientists, domain specialists, ethicists, and software engineers—so teams can address various aspects of data collection, annotation, and validation more effectively.

For instance, in the medical imaging sector, radiologists can provide insights into the nuances of diagnostic imaging, ensuring that the annotated data accurately reflects critical features like tumor boundaries.

Conclusion

High-quality training data is essential; without it, ML models can perform poorly, much like a ship without a compass. Ensuring high quality, diversity, and accuracy in training data is crucial for developing robust AI systems. As technology advances, the demand for quality training data will continue to drive innovations in machine learning.