Text Labeling services for ml

Unidata offers professional Text Labeling Services, delivering precise and comprehensive annotations of text data to enhance natural language processing (NLP) models and text-based applications across various industries. Our expert annotators meticulously label text with relevant tags, categories, and annotations, ensuring the creation of high-quality training datasets that drive optimal model performance

24/7*

- 6+

- years experience with various projects

- 79%

- Extra growth for your company.

What is Text Labeling?

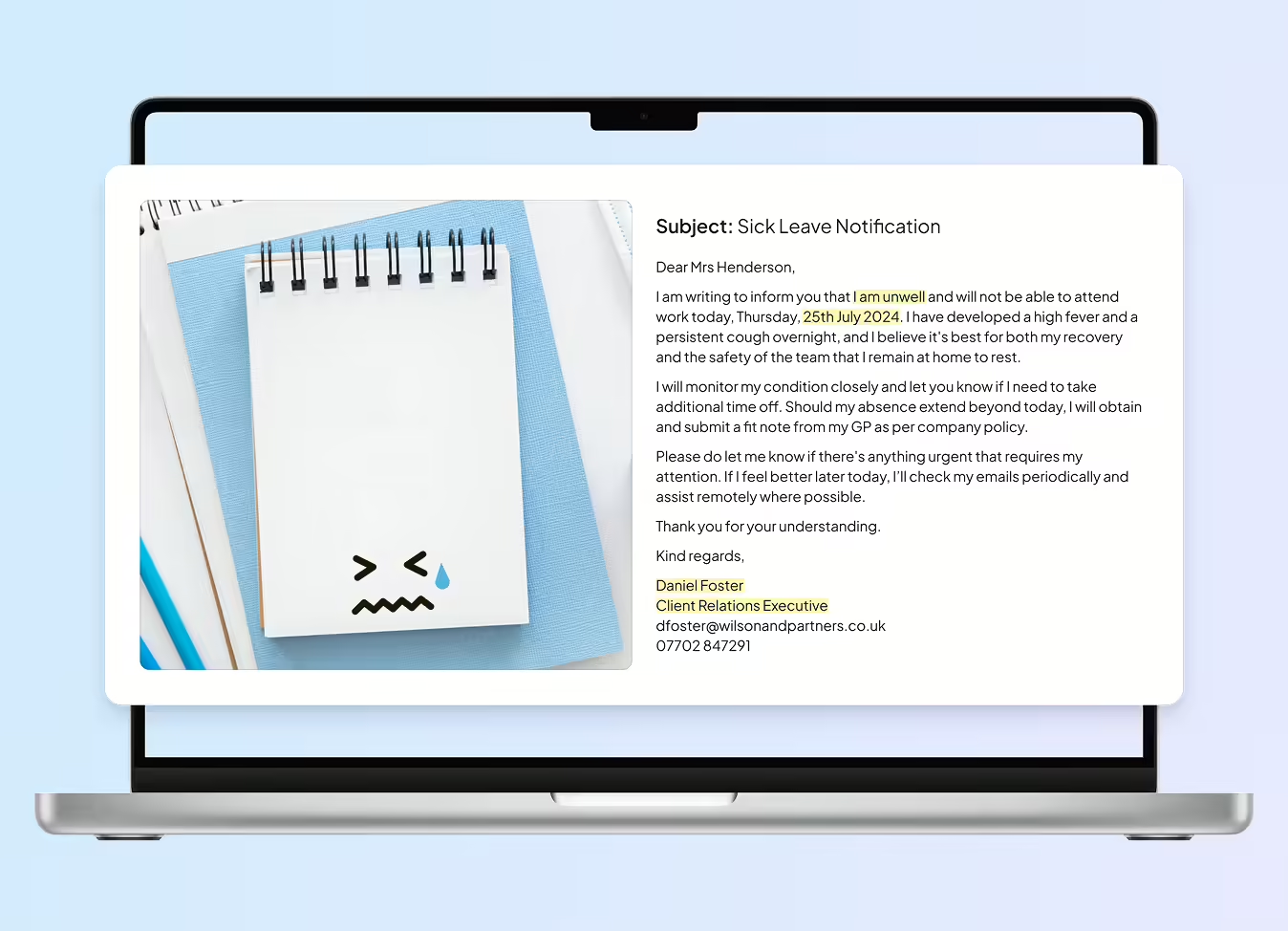

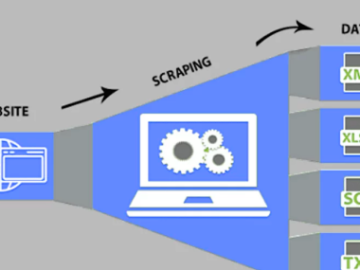

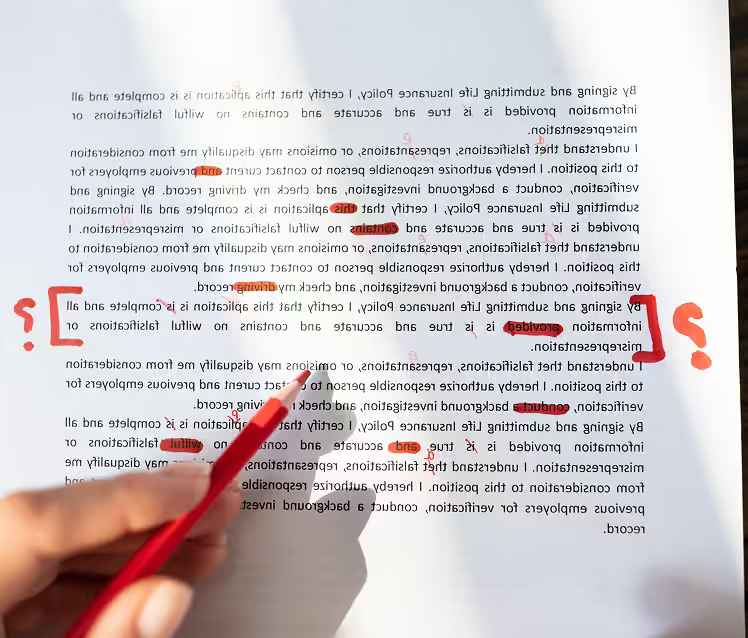

Text labeling is the process of annotating text data with relevant tags, categories, or labels to prepare it for machine learning and natural language processing (NLP) applications. This essential step involves identifying key elements within the text, such as entities, sentiments, and topics, enabling algorithms to learn from and understand the underlying information. High-quality text labeling is crucial for improving the performance of NLP models, enhancing tasks like sentiment analysis, text classification, and information extraction.How We Deliver Text Labeling Services

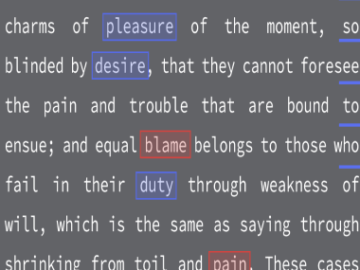

Best software for text labelling tasks

Types of Text Labeling Services

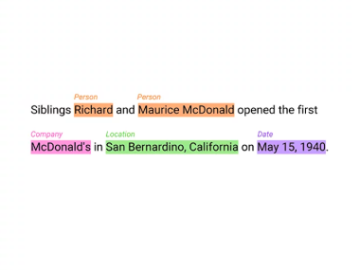

Named Entity Recognition (NER)

Named Entity Recognition involves identifying and labeling entities such as names of people, organizations, locations, dates, and other specific information within text. This form of labeling is widely used in tasks like document analysis, customer support systems, and legal document processing.

Text Classification

Text classification is the process of assigning a predefined category or label to entire texts or sections of text. This service is commonly used for spam detection, sentiment analysis, topic categorization, and document organization.

Sentiment Analysis

Sentiment analysis involves labeling text to identify the emotional tone expressed, such as positive, negative, or neutral sentiment. It is often used in customer feedback analysis, product reviews, and social media monitoring.

Part-of-Speech (POS) Tagging

POS tagging is the process of labeling words in a text based on their grammatical role (e.g., noun, verb, adjective). It is used in natural language processing (NLP) tasks like syntactic parsing and machine translation.

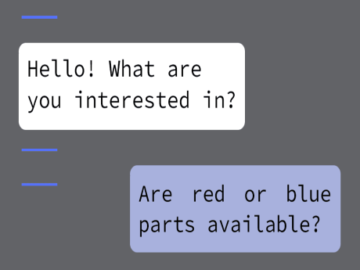

Intent Classification

Intent classification is used in conversational AI systems to label text inputs according to the user’s intent, such as booking a flight, asking for information, or placing an order. It’s critical for chatbot training and voice assistant development.

Keyword and Keyphrase Labeling

This type of labeling involves identifying important keywords or keyphrases in a text that summarize its main ideas or concepts. It is often used for search engine optimization (SEO), content indexing, and information retrieval systems.

Relation Extraction

Relation extraction identifies and labels relationships between entities in a text, such as connections between people, organizations, or products. This is used in tasks like knowledge graph creation and database population.

Coreference Resolution

Coreference resolution involves labeling words or phrases in a text that refer to the same entity. For example, identifying that “he” and “John” in a sentence refer to the same person. It is useful in improving the understanding of text meaning in NLP applications.

Tokenization

Tokenization involves breaking down a text into smaller units such as words, sentences, or subwords. This is an essential preprocessing step in many NLP tasks such as machine translation, text summarization, and speech recognition.

Document Categorization

Document categorization labels entire documents or large sections of text with specific categories based on content. This service is useful for organizing large datasets, creating content management systems, and sorting legal or academic documents.

Entity Linking

Entity linking is the process of linking identified entities in a text to a specific entry in a database or knowledge base. For example, recognizing that “Apple” refers to the tech company and linking it to its correct entry in a knowledge graph.

Aspect-Based Sentiment Analysis

This service labels specific aspects of a product or service within a text with sentiment. For instance, in a product review, different sentiments may be expressed about the price, quality, or durability. Aspect-based sentiment analysis provides more granular insight into customer opinions.

Topic Modeling

Topic modeling is a more complex form of text labeling that identifies and labels underlying topics or themes within large collections of text. It’s useful for content analysis, document clustering, and summarization in large datasets.

Text Summarization Labeling

Text summarization labeling involves identifying key portions of text that capture the main ideas of a document. This is commonly used in news articles, legal documents, and research papers where a concise summary is needed.Text Labeling Use Cases

-

01

01Finance

AI in finance depends on well-structured text data to detect fraud, process transactions, and analyze financial reports. Labeling bank statements, loan applications, and investment reports helps AI predict creditworthiness and flag unusual activities. Additionally, financial news and market sentiment classification enable smarter investment decisions. -

02

02Legal & Compliance

Legal professionals use this service to organize case files, contracts, and court rulings. AI can quickly scan and categorize legal texts, making it easier to retrieve relevant precedents and clauses. Compliance monitoring also benefits from labeled documents, ensuring businesses meet regulatory standards without manual review. -

03

03Education

Text labeling enhances personalized learning by categorizing textbooks, essays, and study materials based on subject and difficulty level. AI-powered tutoring systems use labeled data to tailor lesson plans to each student’s needs. Additionally, automated grading systems benefit from text annotation, improving feedback accuracy. -

04

04Healthcare

This technology helps train AI to process medical records, research papers, and doctor’s notes more effectively. Categorizing symptoms, diagnoses, and treatments allows AI to identify patterns in patient histories, improving disease prediction and personalized treatment recommendations. Medical chatbot responses also benefit, as AI learns to interpret patient inquiries with greater accuracy. -

05

05Automotive (Autonomous Vehicles)

AI-powered navigation systems rely on text categorization to understand road signs, vehicle manuals, and regulatory documents. Extracting key details from maintenance logs enables predictive maintenance, reducing breakdowns. It also plays a role in self-driving car interactions by helping AI interpret traffic reports and driver feedback, ensuring safer navigation. -

06

06Retail & E-commerce

Labeling is essential for optimizing product listings, customer reviews, and chat interactions. Assigning sentiment labels to customer feedback allows AI to gauge satisfaction levels and suggest improvements. It also enhances product recommendations by analyzing descriptions and user preferences, making online shopping more personalized. -

07

07Entertainment & Media

It improves content organization by tagging articles, scripts, and social media posts with relevant categories. AI-driven platforms use this labeled data to recommend movies, news articles, and music based on user interests. Moderating online discussions also becomes more efficient, as AI detects harmful or inappropriate content in real-time. -

08

08Manufacturing

This technology supports AI-driven quality control by processing inspection reports, equipment manuals, and safety protocols. Identifying patterns in maintenance logs allows AI to predict machinery failures before they happen. Classifying supplier contracts and compliance documents ensures smoother operations and regulatory adherence.

How It Works: Our Process

A Clear, Controlled Workflow From Brief to Delivery

Text Labeling Cases

Why Companies Trust Unidata’s Services for ML/AI

Share your project requirements, we handle the rest. Every service is tailored, executed, and compliance-ready, so you can focus on strategy and growth, not operations.

What our clients are saying

UniData

Other Services

Ready to get started?

Tell us what you need — we’ll reply within 24h with a free estimate

- Andrew

- Head of Client Success

— I'll guide you through every step, from your first

message to full project delivery

Thank you for your

message

We use cookies to enhance your experience, personalize content, ads, and analyze traffic. By clicking 'Accept All', you agree to our Cookie Policy.