Introduction to Named Entity Recognition (NER)

Named Entity Recognition (NER) is a fundamental task in Natural Language Processing (NLP) that involves identifying and categorizing entities within text into predefined classes. These classes typically include names of people, organizations, locations, dates, and other pertinent information. NER plays a critical role in extracting valuable insights from unstructured data, making it easier to analyze and derive meaning from vast amounts of text.

The Importance of NER in NLP

The relevance of NER cannot be overstated. As organizations increasingly rely on data-driven decision-making, the ability to extract structured information from unstructured text becomes crucial.

Whether it's analyzing customer feedback, monitoring social media trends, or streamlining legal documentation, NER empowers businesses to automate processes, uncover insights, and enhance overall efficiency.

What is NER?

Definition of Named Entities Recognition

Named entities can be categorized into various classes, including:

- People: Names of individuals, such as "John Doe" or "Marie Curie."

- Organizations: Names of companies, institutions, or agencies, such as "Google" or "United Nations."

- Dates: Specific dates or time periods, such as "January 1, 2020," or "the 1990s."

- Geographical Locations: Names of places, such as "New York City" or "Mount Everest."

Why Recognize Named Entities?

The practical significance of NER lies in its ability to:

- Automate Processes: By extracting entities, businesses can automate data entry, content categorization, and other manual tasks, freeing up valuable human resources.

- Enable Analytics: NER facilitates the extraction of insights from large volumes of text, allowing organizations to analyze trends, sentiments, and key themes.

- Enhance Information Retrieval: Recognizing entities improves search capabilities, enabling more precise and relevant results in information retrieval systems.

Areas of Application

NER finds applications across various sectors, including:

- Law: Analyzing legal documents to identify parties involved, dates, and relevant statutes.

- Healthcare: Extracting patient information, medical terms, and drug names from clinical notes.

- Finance: Identifying companies, stock symbols, and monetary amounts in financial reports.

- E-commerce: Enhancing product search functionality by recognizing brand names and product categories.

- Search Engines: Improving search algorithms by understanding the context and relationships between entities.

Additional applications include:

- Social Media Monitoring: Analyzing social media content for brand mentions, sentiment analysis, and trend identification.

- Chatbots and Virtual Assistants: Enabling chatbots to understand user queries and provide relevant information based on recognized entities.

- Cybersecurity: Identifying potential threats or malicious entities in security logs and reports.

How Does Named Entity Recognition Work?

Entity Identification

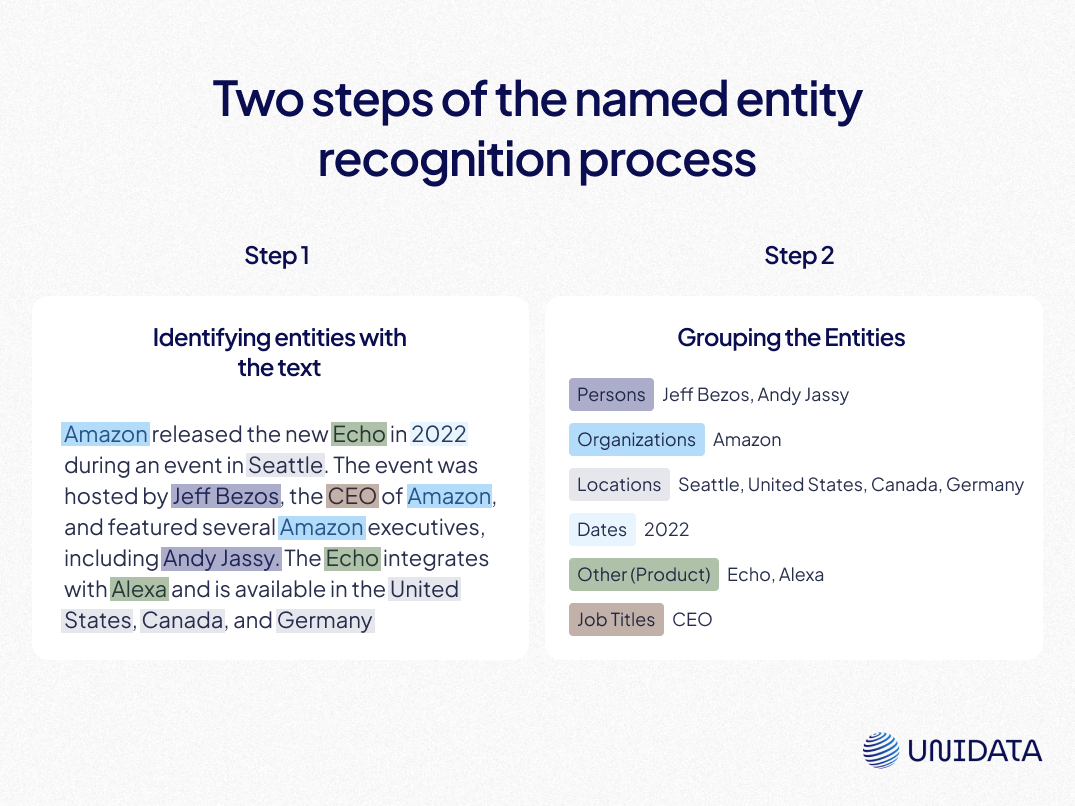

The NER process typically involves two primary steps: identification and categorization.

- Tokenization: The first step in NER is breaking the text into individual words or tokens. This process enables the system to analyze each component of the text independently.

- Part-of-Speech Tagging: After tokenization, the system assigns grammatical categories to each token, identifying whether a word is a noun, verb, adjective, etc. This information aids in determining the function of each token within the sentence.

- Chunking: This step involves grouping tokens into larger phrases or chunks, making it easier to identify entities that consist of multiple words (e.g., "New York City" instead of "New" and "York" separately).

Entity Categorization

Once entities are identified, they are categorized into predefined classes:

- Named Entity Recognition: The system applies machine learning or rule-based methods to classify tokens or chunks as specific entities.

- Entity Disambiguation: This process resolves ambiguity in cases where multiple entities share the same name (e.g., "Washington" can refer to a state, a city, or a person). Advanced NER systems leverage context and additional information to make accurate distinctions.

Example: Entity Breakdown

Consider the following sentence:

"Apple Inc. was founded by Steve Jobs in Cupertino on April 1, 1976."

- Tokenization: ["Apple", "Inc.", "was", "founded", "by", "Steve", "Jobs", "in", "Cupertino", "on", "April", "1", "1976"]

- Part-of-Speech Tagging: [("Apple", NNP), ("Inc.", NNP), ("was", VBD), ("founded", VBN), ("by", IN), ("Steve", NNP), ("Jobs", NNP), ("in", IN), ("Cupertino", NNP), ("on", IN), ("April", NNP), ("1", CD), ("1976", CD)]

- Chunking: [("Apple Inc.", NNP), ("Steve Jobs", NNP), ("Cupertino", NNP), ("April 1, 1976", CD)]

- Named Entity Recognition: The system recognizes and categorizes:

- Apple Inc. → Organization (ORG): This is classified as an organization because "Apple Inc." refers to a corporate entity.

- Steve Jobs → Person (PERSON): This is classified as a person because "Steve Jobs" is the name of an individual.

- Cupertino → Location (GPE): "Cupertino" is recognized as a geographical location (city).

- April 1, 1976 → Date (DATE): This is classified as a date, referring to a specific time.

NER vs. Other NLP Tasks

Comparing NER with Text Classification

While both NER and text classification involve analyzing text, they serve different purposes. Text classification categorizes entire documents into predefined classes (e.g., spam vs. non-spam), whereas NER focuses on identifying and classifying specific entities within the text.

Comparing NER with Entity Linking

Entity linking extends NER by not only identifying entities but also connecting them to their corresponding entries in a knowledge base or database. For example, recognizing "Steve Jobs" as a person is NER, while linking it to an entry with his biography and achievements represents entity linking.

Methods of NER

Dictionary-Based NER

Dictionary-based Named Entity Recognition (NER) relies on predefined lists of entities, such as names of people, organizations, or locations, to identify entities within a text. This is one of the simplest NER approaches, often used in domains where the vocabulary is stable, and the lists of entities remain relatively consistent.

How it works: In dictionary-based NER, the system scans the text and matches tokens (words or phrases) against entries in the predefined dictionary or lookup tables. If a word or phrase matches an entry, it is labeled as an entity. For instance, if “New York” is in the dictionary under locations, any mention of “New York” in the text will be tagged as a location. While easy to implement, this method struggles with variations in naming, such as abbreviations, and cannot recognize new entities not already in the dictionary.

| Pros | Cons |

|---|---|

| Simple and easy to implement | Struggles with variations and misspellings |

| Efficient for specific, static vocabularies | Misses new or previously unseen entities |

| Minimal computational resources needed | Limited scalability and adaptability |

Rule-Based NER

Rule-based NER systems use a set of hand-crafted linguistic rules to identify and label entities. These rules are typically created by linguists or domain experts and are tailored to specific language structures, patterns, or vocabulary, allowing the system to identify entities based on syntax or grammatical cues.

How it works: In rule-based NER, rules are designed to identify patterns in the text that indicate entities, such as capitalized words following titles (“Dr.” or “President”), or certain prepositions that often precede locations. For instance, a rule might tag any capitalized word that follows “President” as a person’s name. Although rule-based systems can be highly accurate within narrow contexts, they require extensive manual tuning and may need frequent updates to adapt to new contexts or evolving language use.

| Pros | Cons |

|---|---|

| High accuracy in controlled contexts | Requires expert knowledge and time to create rules |

| Effective in niche or well-defined domains | Struggles to adapt to new or broad contexts |

| Transparent and explainable | Labor-intensive and difficult to scale |

Machine Learning-Based NER

Machine learning-based NER models use statistical methods and algorithms to learn to recognize entities from labeled datasets. These approaches require supervised learning, meaning they rely on a pre-annotated dataset to “train” the model, teaching it to recognize patterns and features associated with entities.

How it works: Machine learning-based NER typically uses algorithms such as Conditional Random Fields (CRFs) or Support Vector Machines (SVMs) to analyze features of words, such as part of speech, position in the sentence, or surrounding words. The model learns which features are associated with different types of entities and can apply this learned knowledge to identify entities in new text.

Machine learning-based NER provides greater flexibility than dictionary or rule-based approaches, as it can learn complex relationships, but it still depends on high-quality labeled data and may struggle with out-of-vocabulary words or phrases.

| Pros | Cons |

|---|---|

| Can generalize better than rule-based systems | Dependent on high-quality labeled data |

| More adaptable to broader language patterns | May require a significant amount of training data |

| Can handle variation in entity forms | Performance varies with dataset quality |

Deep Learning-Based NER

Deep learning-based NER uses advanced neural network architectures, such as Long Short-Term Memory (LSTM) networks, Convolutional Neural Networks (CNNs), and Transformers, to identify entities in text. These models can automatically learn complex linguistic patterns and dependencies, making them highly effective for NER tasks in varied and complex datasets.

How it works: Deep learning NER models take large amounts of text data and extract entity information by learning patterns in how entities appear within sentences and contexts. For example, LSTMs are adept at capturing sequential relationships, while CNNs can capture local word patterns. Transformer models, like BERT, can process entire sentences in parallel and identify contextual relationships between entities. These models learn to recognize nuanced patterns across vast amounts of data, leading to more accurate and robust NER.

| Pros | Cons |

|---|---|

| High accuracy, especially with large datasets | Requires extensive computational resources |

| Automatically learns complex patter | Needs large amounts of labeled training data |

| Effective in varied and dynamic contexts | Challenging to interpret and explain |

Comparative Table of Models

| Model | NER Application | Key Strength | Example Use Case |

|---|---|---|---|

| LSTM Networks | Maintains word sequence context | Effective for understanding word order | Differentiates "Amazon" (company) from "Amazon" (river) |

| Convolutional Neural Networks (CNNs) | Identifies local text patterns | Good at recognizing context from nearby words | Detects entities like "New York" from close word associations |

| Transformer Models (e.g., BERT) | Captures complex word dependencies | Analyzes entire sentence context at once | Recognizes "Elon Musk" as a person, "Tesla" as a company, and "CEO" as a role within a sentence structure |

Other Deep Learning-Based NER Methods include:

- Unsupervised ML Systems: These systems use clustering to identify entities without labeled data, making them useful for discovering new entities without prior information.

- Bootstrapping Systems: Starting with a small set of labeled data, these systems expand entity recognition iteratively by labeling new entities based on similar contexts.

- Semantic Role Labeling: Beyond identifying entities, this approach captures their roles within sentences (e.g., "who did what"), providing richer contextual understanding.

- Hybrid Systems: By combining multiple NER methods, hybrid systems achieve balanced performance, using rule-based techniques for straightforward cases and machine learning for more complex ones.

What is Text Annotation?

Learn more

Key Aspects of Developing a NER Model

The success of a Named Entity Recognition (NER) model depends heavily on preparing a high-quality, well-annotated dataset. This preparation stage includes critical components:

- Data Collection: Building a robust dataset for NER involves creating a corpora of text that accurately represents the intended use case and linguistic nuances. Here’s a breakdown of effective data collection:

- Creating or Curating Text Corpora: Depending on the domain (e.g., legal, medical, or financial), curate or create a corpus of text sources. For example, legal NER models may require large datasets from case law databases, while social media-focused NER models benefit from Twitter or forum data.

- Diverse and Representative Sources: Gather data from varied sources like news articles, research papers, emails, or social media posts to ensure that the corpus includes a range of language styles, sentence structures, and entity types.

- Domain-Specific Text: To improve the model’s relevance, it’s important to tailor the corpus to include domain-specific terminology and context. For instance, a medical NER model should have texts from medical journals or healthcare records to ensure familiarity with specialized vocabulary.

- Annotation: Once the corpus is collected, annotation converts it into labeled data, marking each entity within the text. High-quality annotation is essential, as mislabeling can negatively impact the model. Annotation involves:

| Annotation Method | Description | Advantages | Disadvantages |

|---|---|---|---|

| Manual Annotation | Human annotators read and mark entities in text | High accuracy and consistency | Time-consuming and labor-intensive |

| Crowdsourced Annotation | Leverages platforms for multiple individuals to annotate | Accelerates the annotation process | Potential variability in quality |

| Automated Annotation | Utilizes pre-trained models to identify and categorize entities | Faster than manual annotation | May lack the accuracy of manual methods |

3. Data Separation: After collection and annotation, the dataset must be split into three distinct subsets:

| Data Separation | Description | Typical Percentage |

|---|---|---|

| Training Data | Used to train the NER model, allowing it to learn to identify and classify entities based on the provided labeled examples. | 70-80% |

| Validation Data | Employed during training to tune hyperparameters and monitor the model’s performance on unseen data, helping to prevent overfitting. | 10-15% |

| Validation Data | Used to evaluate the model’s final performance on completely unseen data, providing an unbiased assessment of its effectiveness in real-world scenarios. | 10-15% |

Overview of Tools and Libraries for NER Model Development

- spaCy: A popular open-source library for advanced NLP tasks, spaCy offers robust support for NER. It includes pre-trained models for various languages and provides an easy-to-use API for custom model training.

- NLTK (Natural Language Toolkit): NLTK is a foundational library for NLP in Python. While it includes basic NER capabilities, it is often used in conjunction with other libraries for more advanced tasks.

- Stanford NER: Developed by the Stanford NLP Group, this tool provides a machine learning-based approach for NER. It allows users to train their models on custom datasets and is widely recognized for its accuracy.

- Transformers: This library has gained immense popularity for its state-of-the-art pre-trained models based on transformers, including BERT, RoBERTa, and GPT. It simplifies the process of fine-tuning these models for specific NER tasks, making it accessible for developers and researchers.

For smaller organizations or those with limited resources, leveraging pre-trained NER models can be more cost-effective than developing models from scratch. By fine-tuning existing models on their data, companies can achieve high accuracy without the extensive labeling and training efforts typically required for custom models.

Intent Annotation for E-commerce

- E-commerce and Retail

- 150,000 user messages

- Ongoing project

Advantages of NER

- Automation of Information Extraction: NER streamlines the extraction of valuable data from unstructured sources, enabling organizations to make informed decisions quickly.

- Insight Extraction from Unstructured Text: By identifying key entities, organizations can analyze trends, customer sentiments, and emerging topics in real time.

- Facilitating Trend Analysis: NER allows organizations to track changes in named entities over time, helping to identify emerging trends and patterns.

- Eliminating Human Error in Analysis: Automated NER reduces the risk of human errors in the analysis process, leading to more reliable outcomes.

- Broad Industry Applicability: NER finds applications in a wide range of industries, from healthcare to finance, enhancing processes across the board.

- Freeing Up Employee Time: Automating the identification of entities allows employees to focus on higher-value tasks that require human intuition and creativity.

- Improving NLP Task Accuracy: By providing a structured understanding of the text, NER enhances the performance of various NLP tasks, including sentiment analysis and content summarization.

Challenges and Issues in Named Entity Recognition

Entity Ambiguity

One of the main challenges in NER is dealing with entities that share the same name but refer to different concepts. For example, "Jordan" can refer to a country, a person, or a brand. Distinguishing between these meanings requires advanced context analysis.

Data Scarcity for Niche Entities

NER systems may struggle with niche entities that are underrepresented in training datasets. This lack of data can lead to lower accuracy for specific applications, particularly in specialized fields.

Multilingual NER

Developing NER systems that work across multiple languages poses a significant challenge. Variations in grammar, syntax, and cultural references require tailored approaches for each language.

Domain Adaptation

Different domains (e.g., finance vs. healthcare) may have unique terminologies and entity types. Adapting NER models to function effectively across various domains is a complex task.

Bias in Data

NER systems can inadvertently inherent biases present in training data. This bias can lead to inaccurate entity recognition and categorization, perpetuating stereotypes or overlooking specific groups.

The Future of NER

Development Prospects

The field of NER is evolving rapidly, driven by advancements in machine learning, deep learning, and natural language understanding. Key trends shaping the future of NER include:

- Integration of Contextual Understanding: As models become better at understanding context and relationships, NER will increasingly be used to extract nuanced information, improving applications like sentiment analysis and relationship mapping.

- Cross-lingual NER: Future developments will likely focus on creating models that can effectively recognize entities in multiple languages, addressing the challenges of multilingual NER and facilitating global applications.

- Real-time NER: With the growing demand for real-time data analysis, NER systems will need to be optimized for speed and efficiency, enabling instant recognition of entities in streaming data sources like social media or news feeds.

- Ethics and Bias Mitigation: As the impact of bias in AI systems becomes more recognized, efforts to mitigate bias in NER models will be paramount. This involves ensuring diverse training data and developing frameworks for ethical AI use.

- Interdisciplinary Applications: The integration of NER with other AI technologies, such as computer vision and robotics, will lead to innovative applications. For example, combining NER with image recognition can enhance understanding in scenarios where visual and textual data intersect, such as social media posts containing both text and images.

Conclusion

Named Entity Recognition is a critical component of modern NLP, enabling organizations to extract valuable insights from unstructured text. As the field continues to advance with the development of more sophisticated models and techniques, NER will play an increasingly vital role in transforming data into actionable knowledge.

By embracing these advancements, businesses can enhance their operations, improve decision-making processes, and gain a competitive edge in an increasingly data-driven world.

References

- Manning, C. D., Surdeanu, M., Bauer, J., Finkel, J. R., Bethard, S., & McCallum, A. (2014). The Stanford CoreNLP Natural Language Processing Toolkit. Proceedings of 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations, 55-60.

- Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2018). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv preprint arXiv:1810.04805.

- Ratinov, L., & Roth, D. (2009). Design Challenges and Misconceptions in Named Entity Recognition. Proceedings of the Thirteenth Conference on Computational Natural Language Learning, 147-155.

- Honnibal, M., & Montani, I. (2017). spaCy 2: Natural Language Understanding with Bloom Embeddings, Convolutional Neural Networks and Incremental Parsing. To appear.

- Brockett, C., & Dolgov, I. (2014). NER in a Crowdsourced Context: When to Use Crowdsourcing and When to Go In-House. Proceedings of the 2nd Workshop on Crowdsourcing for NLP, 1-9.