What Is Sensor Fusion?

Think of sensor fusion as the AI equivalent of having five senses instead of one. Each sensor gives a different view of reality—some see, some feel, some listen. On their own, each is fallible. Together, they form a complete picture that machines can actually trust.

Here’s how it breaks down:

- Cameras provide rich, high-resolution visuals—but they struggle in fog or darkness.

- LiDAR gives you precise 3D maps—but can be expensive and finicky in rain or snow.

- Radar works in harsh weather—but can’t see small details.

- IMUs detect motion, tilt, and acceleration—but can drift over time.

- GPS gives you global position—until you go underground or into a tunnel.

By fusing all these sources, the system compensates for weaknesses and reinforces strengths. The result? More robust, accurate, and context-aware decisions.

If a car sees a cyclist with its camera, confirms the distance via LiDAR, tracks speed using radar, and corrects for road slope using its IMU—it can make the kind of quick, nuanced judgment calls that save lives.

In short: sensor fusion makes machines situationally aware—something raw sensor data can’t do alone.

Why It Matters for AI

Let’s be blunt—real-world data is messy. Roads are wet. Rooms are dim. Humans move unpredictably. One bad reading from a single sensor can break your model’s decision pipeline. That’s why AI systems built for the real world—whether it’s an autonomous vehicle or a smart robot arm—need backup.

Sensor fusion is that backup.

Here’s what it brings to the table:

- Accuracy

Cross-checking data helps reduce false positives and catch outliers. If LiDAR says “wall” but the camera says “glass door,” the system doesn’t panic—it analyzes both and gets it right. - Redundancy

If a sensor fails or gets occluded, others can step in. This kind of fault tolerance is crucial for safety-critical systems. - Environmental adaptability

Different sensors thrive in different conditions. Cameras fail in the dark; radar excels there. GPS can drop out in tunnels; IMUs fill the gap. Fusion lets the system adjust on the fly. - Better decision-making

You’re not just sensing the world—you’re understanding it. That means smarter planning, smoother movement, and fewer mistakes in complex environments.

Whether you're flying a drone through a forest, guiding a robot through a warehouse, or training a model on driving behavior, sensor fusion enables better inputs—and better inputs lead to better AI.

How It Works in Practice

Sensor fusion might sound like magic, but under the hood, it’s math and logic. At its core, fusion is about alignment, synchronization, and integration.

Let’s break it down.

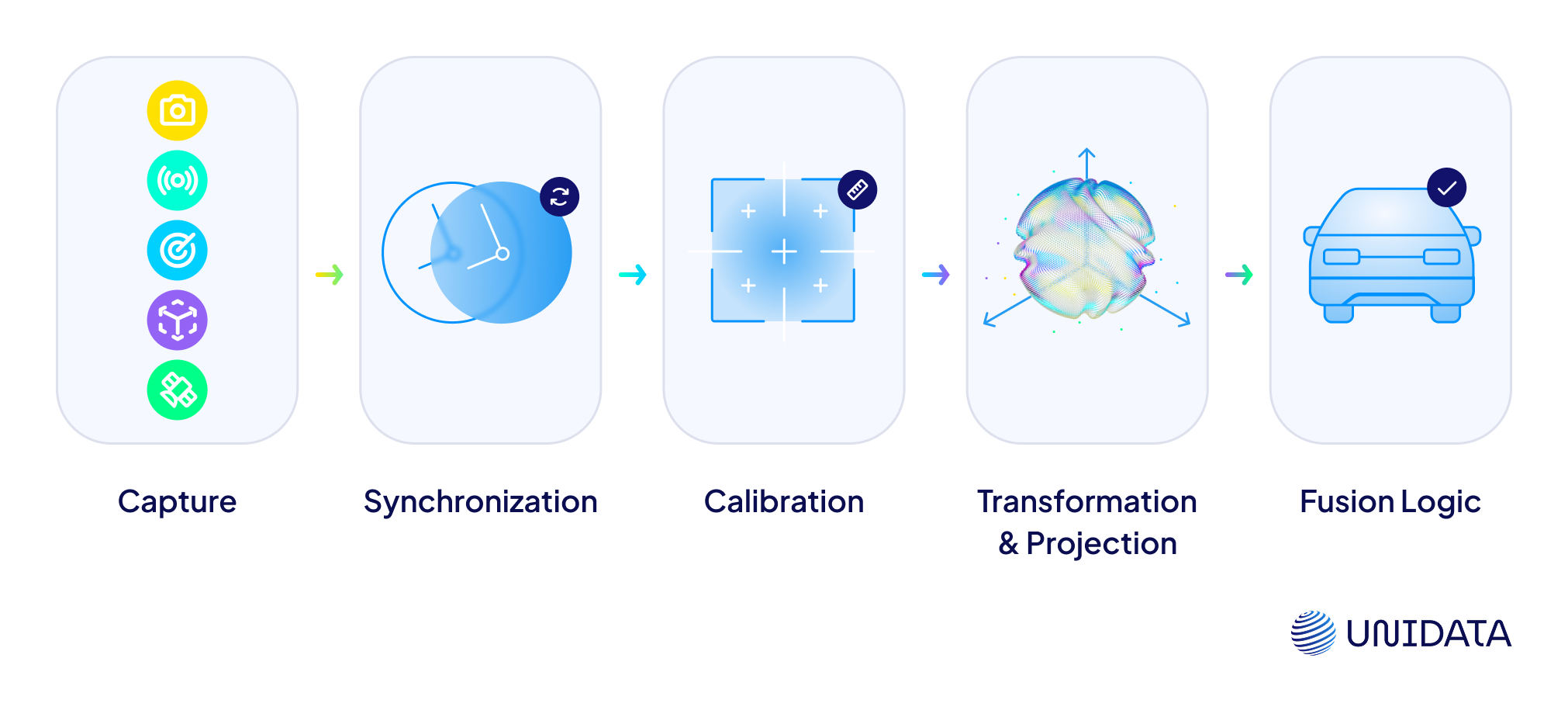

1. Capture

First, sensors gather their respective data:

- Camera → color images

- LiDAR → 3D point clouds

- Radar → object velocities

- IMU → orientation and acceleration

- GPS → geographic coordinates

Each speaks its own “language.” The challenge is getting them all to speak together.

2. Synchronization

Different sensors sample data at different rates. A camera might record 60 frames per second; LiDAR might scan at 10Hz. Without tight timestamping and time alignment, the system fuses misaligned realities—which leads to errors. Time sync is everything.

3. Calibration

Each sensor sees the world from a different place. Sensor fusion starts with calibrating their positions (extrinsic parameters) and internal quirks (intrinsic parameters). A tiny misalignment—say, 5° off in your LiDAR mount—can lead to huge errors down the line.

4. Transformation and Projection

Once aligned, the system transforms all data into a shared coordinate frame—usually tied to the ego vehicle or robot base. This lets a fused model interpret “object at (X, Y, Z)” the same way across all inputs.

5. Fusion Logic

Here’s where fusion strategy kicks in:

- Low-level fusion merges raw data (e.g. overlaying LiDAR points on a camera image).

- Mid-level fusion combines features (e.g. object edges, depth contours) extracted from each modality.

- High-level fusion merges final predictions from each sensor (e.g. “camera says car,” “LiDAR says obstacle”) into one decision.

The fusion logic depends on the use case. In fast-changing scenes, early fusion gives faster reaction time. In safety-critical systems, late fusion offers more interpretability and fallback options.

Types of Sensor Fusion

Sensor fusion isn’t one-size-fits-all. Just like there are different ways to combine opinions in a meeting (group brainstorm vs. voting vs. manager decides), there are different levels of fusion depending on how early you merge your data.

Low-Level Fusion (Raw Data Fusion)

This is the most direct—and most chaotic—approach. You take raw outputs from all sensors and throw them into the model together. Imagine overlaying LiDAR depth maps on camera images, stacking radar vectors on top, and feeding that composite to a neural net.

- Pros: Richest signal. Ideal for deep learning models that can handle noise.

- Cons: Requires perfect synchronization and tons of compute.

Best for: Real-time perception in autonomous vehicles, where every millisecond counts.

Mid-Level Fusion (Feature Fusion)

In this method, each sensor extracts useful features—edges from images, bounding boxes from radar, clusters from LiDAR. Then you combine these features into a unified set before passing them to your decision system.

- Pros: Less noisy than raw fusion, more informative than late fusion.

- Cons: Complex to design. Needs robust feature engineering.

Best for: Robots and drones operating in semi-structured environments.

High-Level Fusion (Decision Fusion)

Here, each sensor makes its own call. Camera says “car ahead.” Radar says “moving object.” LiDAR says “3D blob at 25m.” The system takes these opinions and fuses them into a final decision.

- Pros: Easier to debug. Safer fallbacks—if one sensor fails, the others can still contribute.

- Cons: Loses raw detail. Harder to extract new features later.

Best for: Mission-critical applications like aerospace, medical robotics, or defense.

No method is strictly better than the others—it’s all about your use case, latency tolerance, and how much noise your model can handle.

Sensor Fusion in Real-World Systems

This isn’t just theoretical. Sensor fusion is already running behind the scenes in some of the most ambitious AI systems on the planet.

Autonomous Vehicles

Self-driving cars are the poster child for sensor fusion. They use:

- Cameras for road markings and signs

- LiDAR for lane edges and objects

- Radar for speed tracking in all weather

- IMU & GPS for navigation and motion stability

These sensors work together to build a full 3D map of the surroundings, detect pedestrians, predict other vehicles’ movements, and plan routes—all in real time.

Waymo fuses high-res LiDAR with multiple cameras and radars to achieve centimeter-level accuracy. Tesla, on the other hand, made waves by ditching LiDAR and relying on high-level vision and radar fusion.

Drones & Aerial Systems

In drones, fusion keeps you flying straight and avoiding trees.

- GPS tracks location

- IMUs detect wind drift

- Cameras map terrain

- LiDAR avoids obstacles

Fusion helps drones stabilize during wind gusts, fly pre-mapped routes, and land precisely—even when GPS goes weak.

Robotics & Warehousing

Warehouse robots fuse floor maps, real-time LiDAR scans, and camera feeds to:

- Navigate narrow aisles

- Avoid collisions with humans

- Detect shelves and pick targets

Fusion allows smooth, autonomous movement in dynamic, cluttered indoor spaces—where one wrong move could mean a broken arm (robotic or human).

Smart Cities

Traffic lights, road sensors, CCTV, vehicle counters—all feeding data into a central system. Fusion enables:

- Predictive traffic control

- Pedestrian safety alerts

- Real-time emergency response

In places like Singapore and Amsterdam, sensor fusion powers intelligent transport systems that adjust signals dynamically and detect traffic anomalies before they cause jams.

AR/VR and Spatial Computing

Headsets fuse IMUs, cameras, and depth sensors to understand your position, movements, and surroundings in real-time.

- IMUs track head tilt and movement

- Cameras map the room

- Depth sensors keep virtual objects anchored to real ones

Without fusion, you get nausea. With it, you get seamless immersion.

Challenges and Limitations

Sensor fusion isn’t bulletproof. When it fails, it often fails quietly—and that’s dangerous. Here’s where things get tricky.

Calibration Drift

Sensors can become misaligned over time due to vibration, temperature changes, or mechanical stress. A 1° camera offset can throw off object detection by meters.

Latency & Bandwidth

More sensors mean more data—and more lag. If your LiDAR scan arrives 0.2 seconds late, your “fused” scene is already outdated. Edge fusion can help, but it demands powerful on-board hardware.

Sensor Disagreement

What happens when one sensor says “object” and the other says “nothing”? Fusion logic has to resolve conflicts—without making catastrophic mistakes.

Cost & Power Consumption

Adding sensors boosts accuracy—but also cost, weight, and energy draw. In drones or wearables, that trade-off can be brutal.

The solution? Smarter algorithms, tighter calibration protocols, and fallback strategies when one signal goes rogue.

How Sensor Fusion Enhances AI Training Data

Most people think of sensor fusion as a runtime feature—something that helps robots or vehicles make better decisions on the fly. But there’s a quieter benefit that gets less attention: richer, cleaner, and more context-aware training data.

Why does that matter? Because AI systems are only as good as the data they learn from.

Cleaner Labels

Say your camera sees a blurry blob. Is it a pedestrian or just a shadow? Hard to say. But if LiDAR confirms a solid object at that location, and radar detects motion, you now have enough evidence to confidently label it as a person.

In annotation terms: sensor fusion reduces ambiguity. That means fewer mislabeled frames and more accurate model training downstream.

Multi-Modal Learning

By feeding models synchronized data from vision, depth, and motion sensors, you train them to learn cross-modal correlations—like how a moving object looks in both radar and RGB, or how shadows affect 3D perception.

The result? Models that generalize better, adapt faster, and perform more reliably in edge cases.

Fewer Blind Spots

If your training data comes from a single sensor, it's easy to miss edge cases—like low-light errors, depth confusion, or sensor dropouts. Fusion helps fill those gaps by letting one sensor “cover” for another.

This is especially valuable when collecting data for:

- Autonomous vehicle scenarios (e.g. rain, night, tunnel)

- Human-in-the-loop systems (e.g. hand gestures, eye tracking)

- Robotics in unstructured environments (e.g. rubble, warehouse clutter)

Toolkits and Frameworks for Sensor Fusion

Building a sensor fusion system from scratch isn’t easy—but you don’t have to start from zero. A number of mature tools and frameworks are available to help you prototype, test, and deploy fusion pipelines.

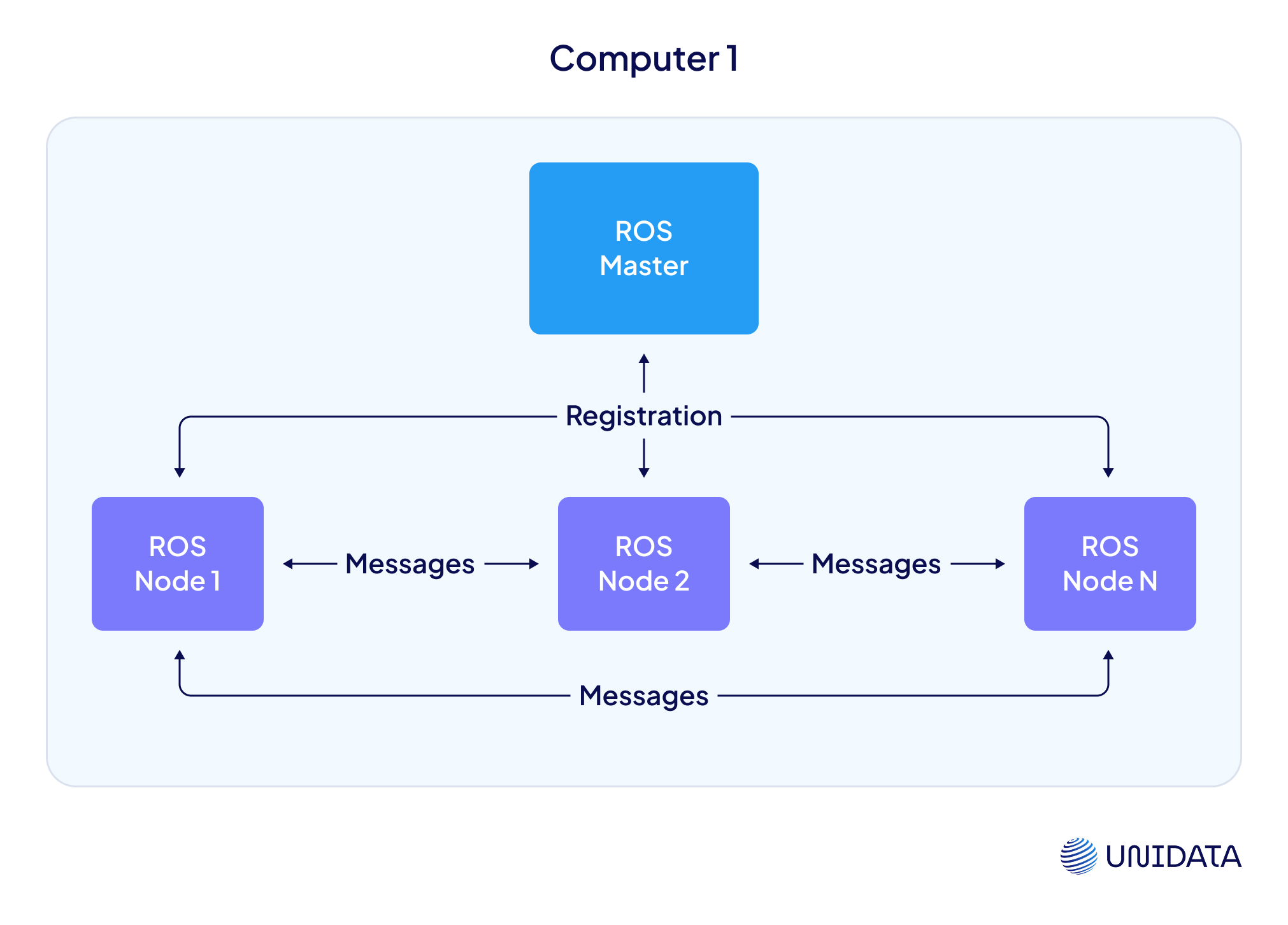

ROS (Robot Operating System)

The go-to open-source middleware for robotics. ROS supports:

- Real-time sensor data streams

- Message passing between nodes

- Integration with LiDAR, cameras, IMUs, and more

ROS2, the newer version, adds better security and real-time guarantees.

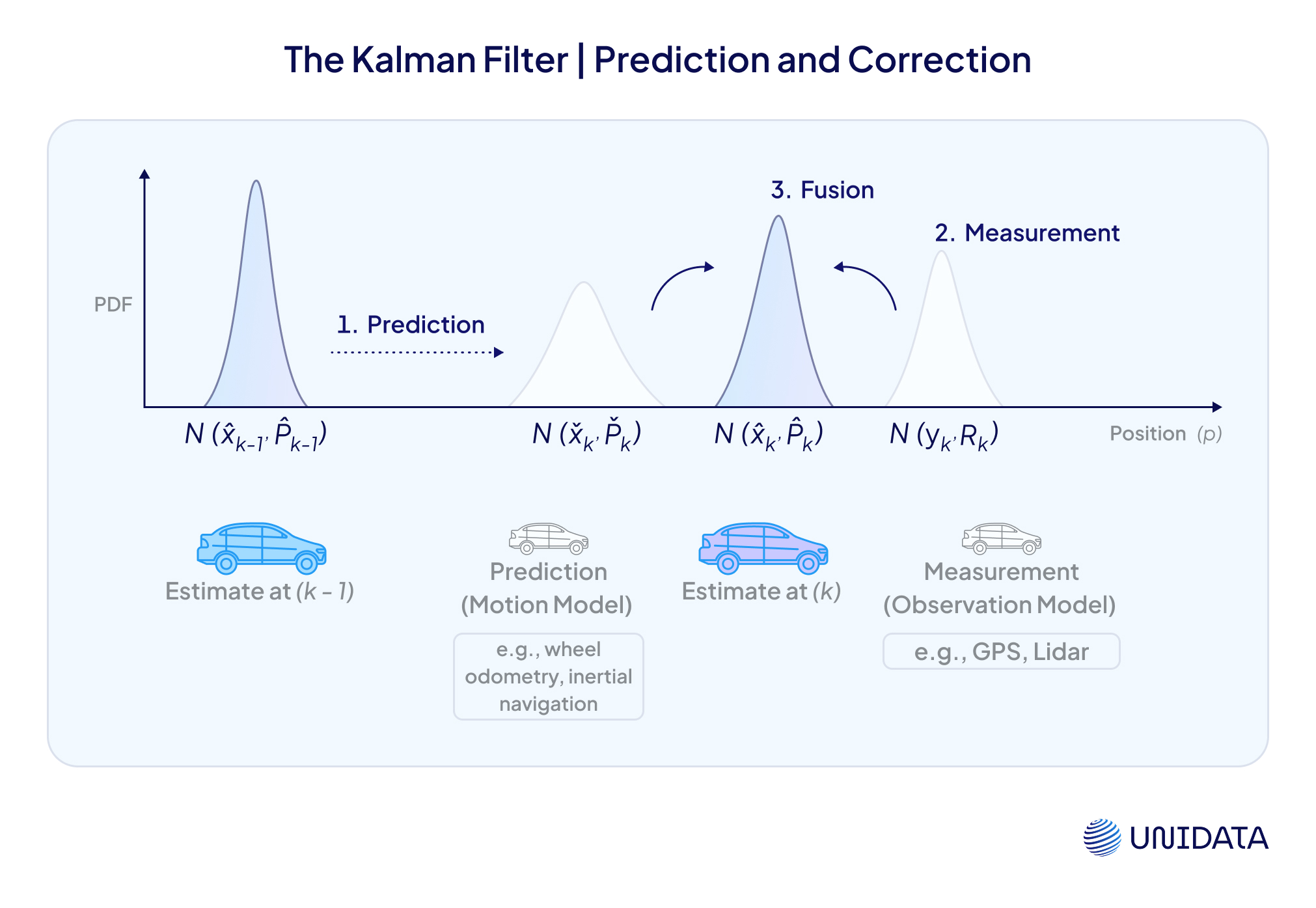

Kalman & Extended Kalman Filters

Classic tools for fusing motion and positional data—especially IMU + GPS. Lightweight and mathematically elegant, they’re still widely used in drones, autonomous systems, and embedded fusion tasks.

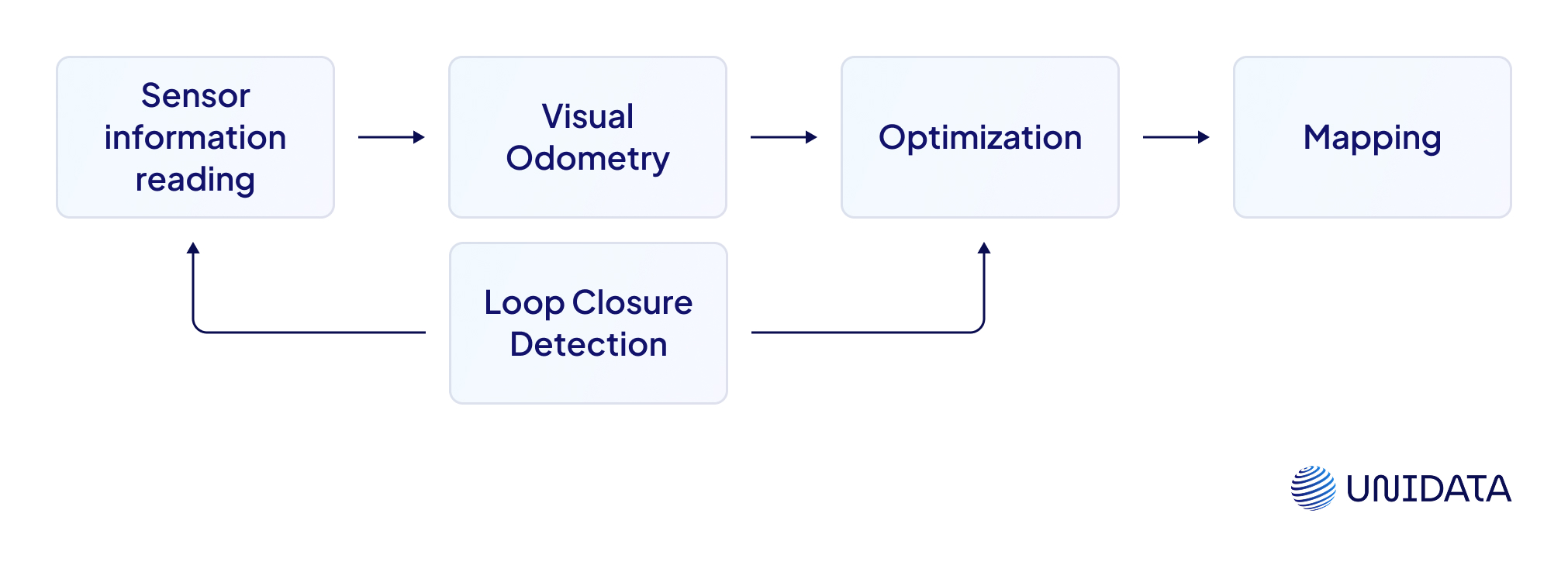

SLAM Frameworks

SLAM (Simultaneous Localization and Mapping) algorithms like RTAB-Map, Cartographer, and ORB-SLAM combine sensor data to build maps of unknown environments while tracking position.

Commercial SDKs

For production-grade pipelines, vendors like NVIDIA, Hexagon, and MathWorks offer fusion libraries that integrate directly with edge hardware, automotive stacks, or simulation environments.

Choosing a framework depends on your application, latency needs, and whether you’re optimizing for speed, scale, or explainability.

The Future of Sensor Fusion

Sensor fusion isn’t static—it’s evolving fast alongside AI. Here's what’s coming next.

Neuromorphic Fusion

Inspired by how the human brain processes multisensory input, neuromorphic approaches use event-based cameras and spiking neural nets to fuse data with ultra-low latency. Ideal for edge devices and real-time robotics.

AI-Driven Fusion Strategies

Instead of hand-tuning fusion logic, researchers are now training neural networks to learn how to fuse data optimally. Meta-fusion layers are emerging in end-to-end models that adapt fusion strategies to the task and environment.

Synthetic Fusion Data

Generating synthetic multimodal datasets using simulation (e.g. CARLA, AirSim) or diffusion models. This helps train models on rare edge cases without needing millions of real-world miles.

On-Device Fusion

As chips get smaller and more powerful, expect sensor fusion to move closer to the edge—running directly on phones, drones, and AR glasses. That means faster decisions, lower latency, and improved privacy.

Final Takeaway

Sensor fusion isn’t just a feature—it’s a survival skill for AI. When one sensor fails, another steps in. When the world turns unpredictable, fused data keeps systems grounded. If your AI needs to make real-world decisions, fusion isn’t optional. It’s the difference between guessing and knowing.