In our increasingly digital world, image classification stands out as a foundational technology that enables machines to understand and interpret visual data. Whether it's your smartphone recognizing your face to unlock the screen or software identifying objects in a photograph, image classification plays a crucial role. At its essence, image classification involves assigning a label to an entire image based on its content, harnessing the power of artificial intelligence and machine learning.

This comprehensive guide will delve into the intricate world of image classification, exploring its types, methodologies, and applications. We’ll also cover how it works, the importance of data preparation, and the algorithms that drive its success.

What Is Image Classification?

Image classification refers to the task of categorizing images into predefined labels or classes. This involves analyzing an image's content and assigning it to one or more categories based on prior training. The training process typically uses a labeled dataset, enabling the model to learn the distinguishing features of each class.

Key Statistics:

- According to recent research, models trained on diverse datasets can achieve accuracy rates exceeding 95% in applications such as facial recognition and object identification.

- In contrast, bias and imbalanced datasets can lead to performance drops of up to 40% in certain machine learning applications.

This remarkable accuracy underscores the potential of image classification to revolutionize various fields, from healthcare to retail. However, achieving such precision relies heavily on high-quality data and well-designed models.

Types of Image Classification

Image classification methodologies can vary widely based on the problem being addressed. Understanding the different types is crucial for selecting the right approach for a given task. Here are the main types:

1. Binary Classification

Binary classification simplifies decision-making by categorizing images into two distinct classes, providing a straightforward yes/no outcome. This method is frequently used when a clear distinction between two categories is required, such as:

- Animal Detection: Determining if a specific animal, like a dog, appears in a photo or not. This is particularly useful for image-based search engines or wildlife monitoring systems.

- Spam Detection: Flagging emails or messages as either spam or not spam. This helps in filtering out irrelevant or harmful content for users.

- Quality Control: Detecting product defects by categorizing items as “acceptable” or “defective” in manufacturing, improving production standards, and reducing wastage.

2. Multiclass Classification

Multiclass classification assigns items to one of three or more distinct categories. This method is widely applicable, such as:

- Weather Prediction: Categorizing weather images into types like "sunny," "cloudy," "rainy," or "snowy."

- Customer Feedback Analysis: Sorting feedback comments into categories like "positive," "neutral," or "negative," helping companies understand general customer sentiment.

3. Multilabel Classification

In multilabel classification, each image or item can belong to multiple categories simultaneously. This approach is useful in cases like:

- Photo Tagging: A single beach photo can be tagged as "ocean," "sunset," and "vacation" all at once, which is helpful for social media and photo organization.

- Movie Genres: A movie can be labeled with multiple genres, such as "comedy," "action," and "sci-fi," to capture the full range of its themes.

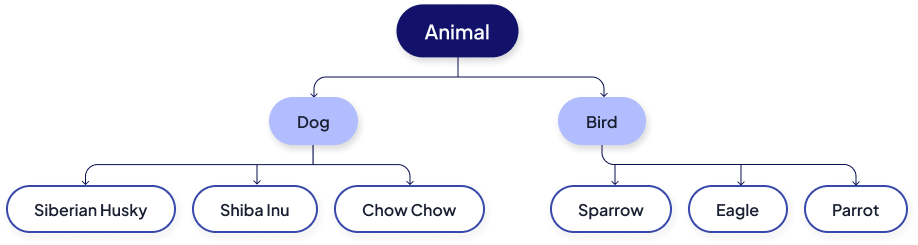

4. Hierarchical Classification

Hierarchical classification sorts items into a structured hierarchy with categories and subcategories. This approach works well for:

- Animal Species Identification: First, a model identifies an animal as a "bird." Then, a second model classifies it into specific species, like "sparrow" or "eagle."

- Product Cataloging: Initially, products are classified as "electronics" or "furniture." Electronics are then divided further into "laptops," "phones," and "tablets" for precise inventory management.

- Medical Diagnosis: A model first categorizes a condition as "infection" or "injury." If it’s an infection, another model might identify it as "bacterial" or "viral."

Overview of Classification Types

| Classification Type | Description | Example |

|---|---|---|

| Binary | Two categories. | Tumor classification: benign vs. malignant. |

| Multiclass | Three or more categories. | Medical diagnosis: various diseases. |

| Multilabel | Multiple labels per image. | Image tagging with multiple descriptors. |

| Hierarchical | Classes organized in a hierarchy. | Classifying fruits into types and subtypes. |

Image Classification vs. Object Detection

Image classification, object detection, and object localization—if these terms feel a bit overwhelming, you’re not alone! They are fundamental concepts in computer vision and image annotation, each with its specific purpose and applications. Let’s break down these terms to make them clearer.

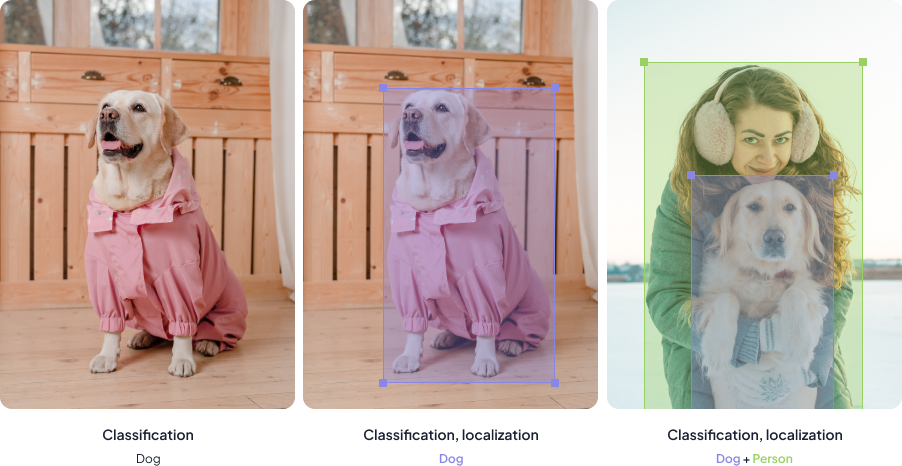

- Image Classification: The Basics

Image classification is the simplest of these tasks. It involves assigning a single label to an entire image, identifying the overall content without distinguishing between multiple objects or their positions. For instance, if the model sees an image of a dog in a park, it might simply label it as “dog,” regardless of where the dog is located within the image. This method works well when only a general overview is needed and is often used in simpler categorization tasks.

- Object Localization: Adding Precision

Moving beyond classification, object localization aims to pinpoint the location of the primary object or region of interest within an image. By drawing a bounding box around the detected object, localization provides spatial data on its position. For instance, in the same image of a dog in the park, object localization would place a bounding box around the dog itself, marking its precise spot within the image. This is useful when focusing on one prominent item within an image while ignoring any surrounding context.

- Object Detection: Combining Classification and Localization

Object detection takes it a step further by combining both classification and localization. Instead of identifying just one main object, object detection recognizes, labels, and localizes multiple objects within an image. This approach allows the model to draw individual bounding boxes around every identified object and assign a label to each. In this scenario, the image will feature both a person and a dog, each identified with its own bounding box and label.

How Image Classification Works

The process of image classification involves several steps, each critical to achieving accurate results. Let's break it down:

Image Pre-processing

Before training a model, pre-processing the images is essential to ensure that they are suitable for analysis. Common pre-processing techniques include:

- Resizing: Adjusting images to a consistent size.

- Image Cropping: Removing unwanted sections of an image to focus on the primary object or region of interest.

- Normalization: Scaling pixel values to a specific range (often 0 to 1).

- Noise Reduction: Eliminating random variations in pixel intensity to create cleaner, more focused images.

- Data Augmentation: Applying transformations (e.g., rotation, flipping) to increase dataset diversity.

Common Image Pre-processing Techniques

| Technique | Purpose |

|---|---|

| Resizing | Standardizes image dimensions for consistency. |

| Image cropping | Helps center the focus on the main object or area of interest, reducing irrelevant background data. |

| Normalization | Improves model performance by reducing input variance. |

| Noise reduction | Minimizes random pixel variations, producing cleaner images. |

| Data Augmentation | Enhances the dataset by creating variations of existing images. |

Feature Extraction

Feature extraction is a vital step in image classification, focusing on identifying and isolating relevant characteristics from images to enhance model performance. In traditional image processing, methods like edge detection and texture analysis were foundational.

- Edge detection highlights image contours and boundaries by identifying abrupt changes in pixel intensity, allowing algorithms to focus on an object’s shape and outline.

- Texture analysis, on the other hand, captures finer details within an image, such as repetitive patterns or surface properties, aiding in distinguishing between materials or patterns (e.g., smooth vs. rough textures).

Together, these techniques help create a basic structural understanding of the image.

With the advent of deep learning, Convolutional Neural Networks (CNNs) have transformed feature extraction by automatically learning intricate, hierarchical features directly from raw images. CNNs use layers of filters to progressively detect edges, shapes, patterns, and complex structures. Early layers in a CNN typically capture low-level features, such as edges and colors, while deeper layers identify high-level patterns like specific object parts or textures. This multi-layered approach enables CNNs to "see" the image more comprehensively and handle complex datasets with minimal manual feature engineering.

Image Annotation for Retail Product Classification

- E-commerce and Retail

- 100,000 annotated images

- 4 months

How to Prepare Data for Image Classification?

Preparing data effectively is paramount for successful image classification. Here are the critical steps involved:

Data Collection

Collecting a diverse set of images is crucial for accurately representing the categories you intend to classify. Potential sources include:

- Third-Party Data Providers: Partner with established companies that specialize in image datasets.

- Proprietary Archives: Utilize your company’s existing image libraries or user-generated content.

- Public Datasets: Access publicly available datasets, such as CIFAR-10 or ImageNet, which contain a wide range of labeled images.

- Crowdsourcing Platforms: Leverage platforms like Toloka to gather images from a broader audience.

Data Annotation

Accurate labeling is essential during the training phase. Annotate each image with the correct label using annotation tools. This process requires diligence and often involves a team of annotators to ensure consistency and precision.

Popular Image Annotation Tools

| Tool | Description |

|---|---|

| CVAT | Open-source tool supporting a wide range of annotations, including bounding boxes, polygons, semantic segmentation, and classification labels. |

| LabelImg | Simple, open-source tool for annotating images with bounding boxes and basic classification. Supports formats like XML (Pascal VOC) and YOLO. |

| VGG Image Annotator | Web-based tool for annotating images and videos with bounding boxes, polygons, regions, and classification tags, with export options in JSON. |

Data Splitting

To evaluate model performance accurately, split the dataset into three subsets: training, validation, and testing.

Typical Data Splitting Ratios

| Subset | Typical Ratio |

|---|---|

| Training Set | 70% |

| Validation Set | 15% |

| Test Set | 15% |

Image Classification Cases

At UniData, we have a large number of Image Classification Cases. Here are some of them:

Classification of Real Estate Construction Photos

For the residential construction stage classification model, the UniData team completed three annotation iterations, processing 2,500 photos in each round. Classification was structured into several stages to simplify the tagging process and reduce the number of tags. Each photo underwent a series of classifications to identify:

- Room Type: Specifies the type of room (e.g., bathroom, hallway).

- Finishing Type: Indicates the type of surface (e.g., walls, ceiling, floor) and classifies the quality and subcategory of the finish.

- Installations: Identifies installed items such as interior doors, entry doors, and other major installations.

- Object Presence: Labels items like toilets, sinks (if missing when expected), air conditioners, and kitchen furniture.

- Fire Safety Systems: Detects the presence of smoke detectors and alarm indicators.

- Piping: Distinguishes between sewer and water pipes, whether located in hallways or within apartments and notes the presence of water meters.

Challenges

- One common issue was overlapping room boundaries in photos; for example, an image taken from the kitchen might also capture parts of another room. This was resolved by calculating which room occupied the majority of the photo area.

- Additionally, wall colors used to differentiate finishing types posed subjective difficulties for annotators. To address these cases, the team consulted closely with the client to determine appropriate annotations.

Vehicle Model Recognition

In this case, the goal was to classify car brands on various images. The annotation project included 300 unique car brands with a dataset of 300,000 images, each focusing on a single car.

Key Issues

- Several challenges emerged due to photo quality and image overlap. For example, occlusions often blocked identifying features, and the black-and-white quality from roadside cameras complicated classification.

- When a car was only partially visible, experts used the visible body structure to identify the brand. Annotators with specific knowledge about car models were essential for this project.

Weapon Detection in Surveillance Footage

This project involved classifying weapons in low-quality night surveillance images, identifying:

- Presence of a Weapon: Determines whether the subject is carrying a weapon.

- Weapon Type: Classifies the weapon as either a firearm or a cold weapon.

- Threat Posture: Identifies the subject's posture as either "dangerous" (e.g., weapon raised) or "non-dangerous" (e.g., weapon holstered).

Approximately 9,000 images were processed in this classification pipeline.

Challenges

Poor image quality, common in night surveillance footage, made it difficult to recognize weapon details. Additionally, people often obscured one another in crowded scenes.

To address ambiguity, the team and client agreed on a “disputed” label for unclear cases.

Day/Night Photo Classification

For this project, the team conducted detailed classification annotation to label various attributes in each image, such as whether it was taken during the day or night, the presence and intensity of glare, and whether faces were partially or fully obscured.

The dataset included approximately 5,000-10,000 images, organized into 5 main classes. These classes were further broken down based on glare intensity (e.g., low, medium, high) to capture subtle differences.

Challenges:

Aligning on the criteria for glare intensity proved challenging, as interpretations of "strong" versus "weak" glare varied.

Solution:

To achieve consistency, the team requested client-approved reference images as labeling guides. This approach helped streamline the process, although periodic adjustments were necessary to ensure alignment.

Data Splitting

To evaluate model performance accurately, split the dataset into three subsets: training, validation, and testing.

Typical Data Splitting Ratios

| Subset | Typical Ratio |

|---|---|

| Training Set | 70% |

| Validation Set | 15% |

| Test Set | 15% |

The Importance of Correctly Annotated Data for Image Classification

The quality of the annotated data is a crucial factor that can significantly affect the performance of image classification models. Correctly labeled data ensures that the model learns the right associations between image features and their corresponding labels. Discrepancies in data labeling can lead to:

- Misclassification: Incorrect predictions due to ambiguous or inaccurate labels.

- Overfitting: The model may memorize the training data without generalizing to unseen data, resulting in poor performance.

Algorithms and Models

Several algorithms are utilized for image classification, each with its strengths and weaknesses. Here’s a brief overview:

1. Traditional Algorithms

- k-Nearest Neighbors (k-NN): A simple algorithm that classifies an image based on the majority label of its k closest neighbors.

- Support Vector Machines (SVM): A powerful algorithm that finds the optimal hyperplane to separate different classes.

2. Deep Learning Algorithms

- Convolutional Neural Networks (CNNs): The backbone of modern image classification, CNNs automatically learn features from images through multiple layers.

- Transfer Learning: Involves taking a pre-trained model (e.g., VGG16, ResNet) and fine-tuning it for a specific classification task, dramatically reducing training time and resource requirements.

Applications of Image Classification

The impact of image classification spans multiple industries, transforming how we interact with technology. Here are some notable applications:

Healthcare

In medical imaging, image classification assists in diagnosing diseases by analyzing X-rays, MRIs, and CT scans. For example, a deep learning model trained on a dataset of 58,000 chest X-rays was able to achieve an accuracy of 94% in detecting pneumonia, outperforming traditional methods. This advancement enables healthcare professionals to diagnose conditions earlier, leading to timely and effective interventions.

Retail

Image classification is pivotal in enabling visual search capabilities in the retail industry. A study by eBay found that image classification models improved the accuracy of visual search by 30%, allowing customers to find similar products based on uploaded images. This capability has led to a significant increase in customer engagement and conversion rates, proving the effectiveness of classification tasks in enhancing the shopping experience.

Social Media and Content Moderation

Social media platforms employ image classification to moderate content and enforce community guidelines. For example, Facebook utilizes image classification algorithms to identify and remove inappropriate content, achieving a 95% accuracy rate in detecting hate speech images. This technology helps maintain a safe online environment, ensuring user compliance with platform policies while reducing the burden on human moderators.

Wildlife Conservation

Image classification helps in monitoring wildlife populations through camera trap images. A project using image classification algorithms to analyze over 1 million camera trap images was able to identify and classify more than 600,000 individual animal occurrences. This data is crucial for conservation efforts, allowing researchers to track species populations and biodiversity changes effectively.

Conclusion

Image classification stands as a cornerstone of machine learning and artificial intelligence, enabling machines to interpret and categorize visual data. From its foundational concepts and methodologies to its far-reaching applications, image classification continues to evolve, driving innovation across various industries.

As we delve deeper into this field, understanding the nuances of data preparation, algorithm selection, and the importance of quality annotations will become increasingly essential for harnessing the full potential of image classification. By doing so, we can unlock new opportunities for advancement and transformation in technology, ultimately reshaping our interaction with the visual world.