Human-in-the-Loop (HITL) is an approach to developing artificial intelligence systems in which human participation is an integral part of the process. Rather than relying entirely on automation, HITL systems use human judgment to handle tasks that require contextual understanding or involve complex ethical considerations.

How It Works

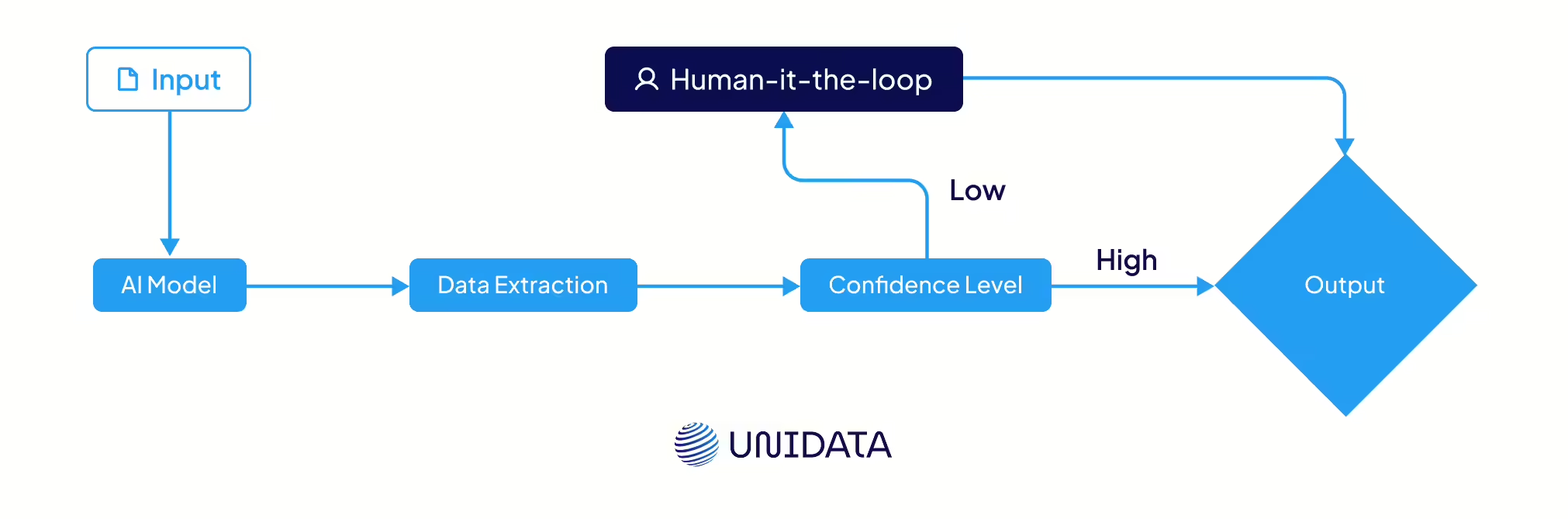

The essence of HITL is a continuous feedback loop in which humans and machines work in tandem. For example:

- The algorithm receives data and generates a prediction — perhaps classifying a tech-support request or recognizing an object in an image.

- If the model’s confidence is below a predefined threshold, the system automatically routes the result to a human expert for review.

- The human checks the output, corrects it when necessary, and returns it. These validated examples then become ground-truth data for further model training.

This collaborative approach increases trust and strengthens system reliability. While HITL doesn’t make an AI model’s inner workings fully transparent, it ensures that final decisions can be monitored and corrected. This is especially important in high-stakes domains—such as healthcare, finance, and law—where mistakes or biased automated decisions carry significant risk.

In short: HITL = an AI model + human involvement and evaluation + fine-tuning informed by expert corrections.

Why Do We Need HITL?

Artificial intelligence systems are powerful, but they’re not infallible. They can make mistakes due to biased data, ambiguous scenarios, or entirely new situations the model wasn’t trained on. The Human-in-the-Loop approach helps offset these risks, acting as a safety net for AI-driven decisions. HITL ensures that high-stakes outcomes are grounded in common sense, ethics, and context — qualities algorithms often lack.

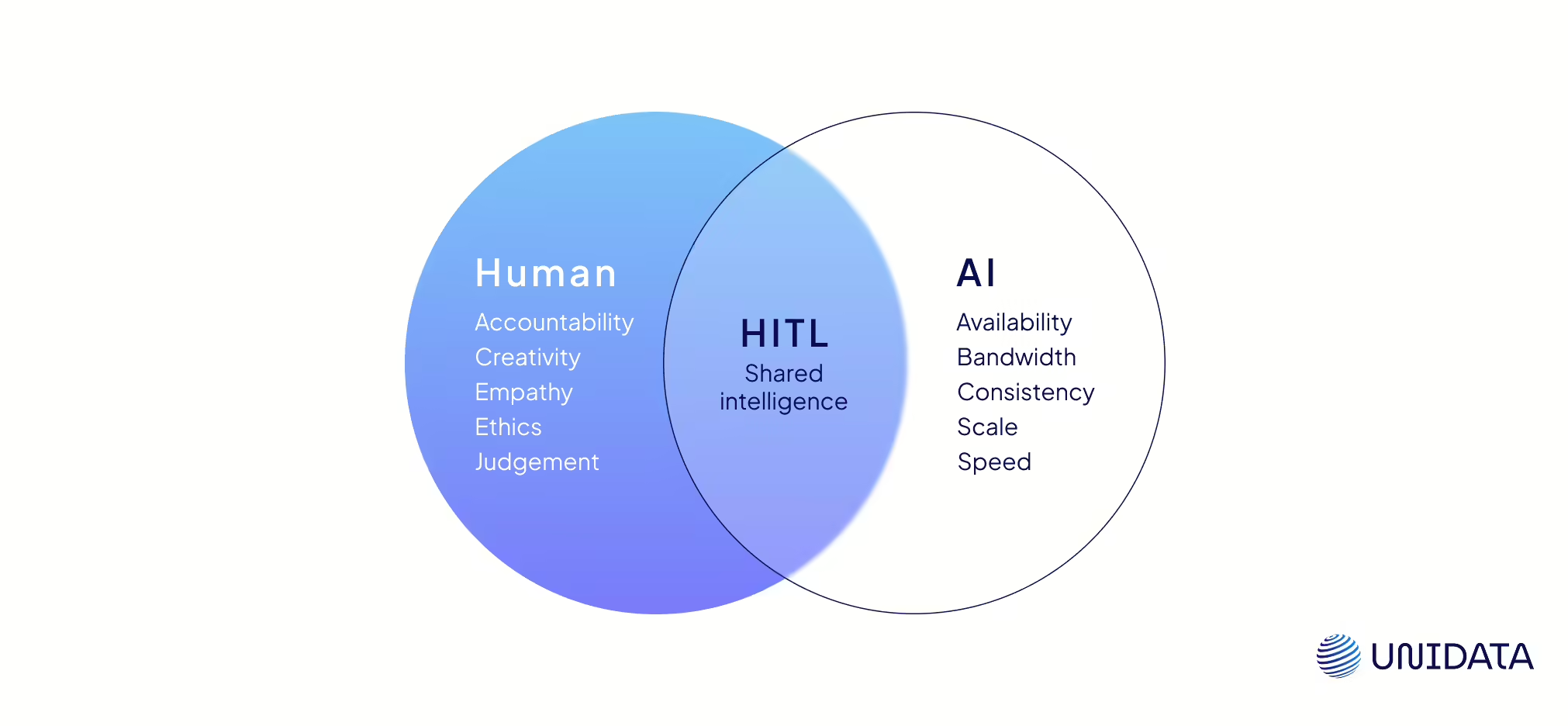

Combining the Strengths of Humans and Machines

HITL brings together the speed, scalability, and computational power of AI with the flexibility, judgment, ethical reasoning, and contextual understanding of humans. When algorithms reach the limits of what they can reliably decide, a human steps in to evaluate the situation and choose the correct outcome. This guarantees that even the most complex cases rely not only on data, but also on human expertise.

Strengthening Trust and Adoption

Implementing HITL has a direct impact on how much people trust AI. When users know that a competent human reviews or validates final decisions, they are far more willing to rely on the technology. HITL helps position AI not as a thoughtless replacement for human labor, but as a powerful amplifier of human expertise and productivity.

The Role of HITL in Regulated Industries

In high-risk domains such as healthcare, finance, or autonomous transport, errors made by AI can have critical consequences. That’s why regulators increasingly require mandatory human oversight over AI-driven decision-making. The EU’s draft AI Act is a prime example: it explicitly states that “high-risk AI systems” must be designed to enable effective human supervision and intervention.

How Human-in-the-Loop Works in Machine Learning

In machine learning, the human-in-the-loop concept is implemented as an interactive, iterative cycle of collaboration between humans and the model. Instead of a “train once and deploy blindly” approach, HITL embeds human oversight into multiple critical stages of AI development and operation. A typical workflow with human involvement might look like this:

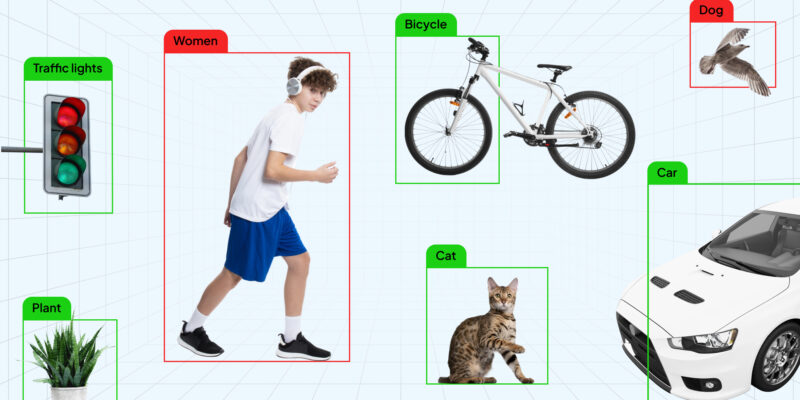

1. Data Labeling

Human annotators start by manually labeling raw data to create the training set. Experts assign correct labels to images (what’s shown in them), mark object boundaries, transcribe audio, and more. These labeled inputs — paired with their expected outputs — are what the algorithm learns from. At this stage, human expertise ensures the quality and accuracy of the underlying data: the model is trained from the very beginning on precise, relevant examples.

2. Model Training

Developers then train the machine learning model on the human-labeled dataset. The algorithm analyzes the manually applied labels and identifies patterns and relationships across the dataset. Human participation continues here as well — data scientists and ML engineers monitor training progress, tune hyperparameters, select features, track performance metrics, and adjust the process when needed. In other words, people guide the training to ensure the model learns correctly and avoids obvious mistakes. The outcome of this step is an initial model version trained on human-provided labels.

3. Testing and Feedback

Once trained, the model begins making predictions on new data — and humans step back in to evaluate and correct them. Specialists review the model’s outputs, especially in cases where the algorithm shows low confidence or appears likely to fail based on domain intuition. Critical or borderline decisions are examined and validated by humans. When inaccuracies are found, the expert provides the correct label or adjusts the model’s conclusion.

This stage implements active learning: the system identifies the examples it is least confident about and requests the human’s authoritative answer. With these corrections (essentially new labels), the model is fine-tuned or retrained to incorporate the updated information. The cycle of prediction → human evaluation → model improvement repeats until performance reaches the desired threshold.

This iterative loop ensures continuous human supervision throughout the training process. Unlike fully automated pipelines, human-in-the-loop enables immediate intervention whenever the model encounters ambiguous or unusual cases. A human steps in, provides the right answer, and prevents the system from reinforcing incorrect behavior. Gradually, step by step, this partnership between people and algorithms produces a model that becomes increasingly accurate and capable.

Comparison of HITL, HOTL, and Full Automation

It is standard to distinguish three primary modes of interaction between humans and automated systems: Human-in-the-Loop, Human-on-the-Loop, and Human-out-of-the-Loop.

Interaction Modes

| Approach | Human Role |

|---|---|

| Human-in-the-Loop (HITL) | A human is directly involved in every cycle of the system’s decision-making or action process. Automation cannot proceed without explicit human input or approval. In practice, the system does not operate autonomously — any critical outcome must be reviewed by a human. Example: A recommendation engine suggests options, but a specialist makes or confirms the final choice. This mode ensures complete human control, making it suitable for scenarios where the cost of error is too high to rely on machine decisions alone. |

| Human-on-the-Loop (HOTL) | A human oversees an autonomous system and intervenes only when necessary. The AI can act independently, but a human has real-time monitoring capabilities and can override or adjust the system’s behavior at any moment. Here, the human is “on standby,” ensuring overall direction and safety without micromanaging every step. Example: An unmanned aerial vehicle conducts autonomous patrols, while an operator supervises and can take full control if required. This mode preserves significant autonomy while still providing a layer of human oversight. |

| Human-out-of-the-Loop | The human is fully removed from the process — the system operates autonomously from start to finish, with no direct human involvement. All decisions are generated automatically based on the algorithm’s logic and available data. This mode is used when rapid response is essential or when trust in the algorithm is high enough that human supervision is unnecessary. Example: Automatic emergency braking in a car, which must activate faster than any human could react. |

Choosing between HITL, HOTL, and full autonomy always depends on the level of risk, the consequences of error, and the required degree of human control.

Advantages and Limitations of HITL

Integrating human feedback into AI systems provides a number of tangible benefits:

Higher Accuracy and Reliability

Human oversight helps identify AI errors. By reviewing and correcting outputs, humans fix misclassifications or anomalous results. Over time, this leads to more stable and dependable AI performance.

Reduced Bias

Humans can spot and correct biased decisions produced by AI systems. A model only sees the data it was trained on, and that data often reflects historical imbalances. A human can detect unfair patterns — for example, a hiring algorithm disproportionately rejecting applications from certain groups — and adjust the system accordingly. While humans are not free from bias either, this additional layer of review helps improve the fairness of AI-driven decisions.

Improved Transparency and Explainability

HITL makes AI decisions easier to interpret and explain. If a credit application is denied by an automated system, a specialist can review the case, verify the reasoning, and clearly communicate the rationale to the applicant. This approach helps mitigate the “black-box” problem and increases the overall transparency of AI systems.

Ethical Judgment and Safety

Some decisions carry moral or life-critical implications that AI cannot fully grasp. For example, an AI system might detect signs of cancer on a medical scan, but a doctor — the human in the loop — will incorporate patient history and additional clinical context before recommending treatment. Human judgment ensures that high-impact decisions remain ethical and safe.

Greater User Trust and Adoption

When people know that AI outcomes are supervised by humans, their trust increases. HITL signals that humans stay in control. For instance, a customer-service chatbot that escalates complex inquiries to a human operator demonstrates the company’s commitment to accuracy and care — leading to higher satisfaction than an entirely automated system that might produce incorrect or unhelpful responses.

These advantages explain why HITL approaches are widely regarded as best practice in today’s AI deployments. However, HITL also introduces challenges that must be managed effectively:

Scalability and Cost

Human involvement naturally limits scalability. Unlike fully automated systems, HITL workflows can become bottlenecks if the human component cannot keep pace with data volume or processing speed. Organizations must carefully determine where human input is essential and optimize workflows to use expert time as efficiently as possible.

Human Error and Inconsistency

Humans make mistakes too: they may mislabel data, overlook details, or disagree with one another. Another challenge is variability — different annotators may interpret the same image differently. Such inconsistencies introduce noise that can confuse the model. This is why quality assurance for human work is a critical part of HITL design, including training, clear guidelines, and cross-review processes.

Best Practices for Implementing HITL

For a Human-in-the-Loop system to be effective, it’s not enough to simply insert a person into the workflow — their involvement must be purposeful and productive. Here are several key recommendations:

Define Clear Triggers for Human Intervention

Set explicit confidence thresholds at which the model must defer to a human reviewer. You can also rely on additional criteria — for example, routing all cases that involve sensitive data or any decisions with significant legal or financial implications for mandatory verification.

Provide a Simple, User-Friendly Interface

The interface used by human experts should be intuitive, enabling them to review data quickly, apply corrections, and leave comments. The easier and faster the workflow, the higher the productivity — and the fewer errors introduced due to human factors.

Regularly Analyze Outcomes and Performance

Monitor what types of errors the model makes, which cases are most frequently escalated for human review, and how much time experts spend correcting them. This analysis helps identify which components of the system require further optimization.

Conclusion

Human-in-the-Loop is a reminder that the human mind remains central even in an era of pervasive automation. Rather than placing humans and AI on opposite sides of the ring, HITL brings their strengths together. Machines excel at computation, pattern detection, and scaling, but only humans can contribute empathy, moral reasoning, intuition, and accountability. By combining these strengths, we create systems that are both powerful and trustworthy.

So, what is Human-in-the-Loop? It’s more than a technical term — it’s a philosophy of technology design. It’s the idea that every intelligent system should keep a human at its core: either guiding and training it, or supervising and taking responsibility for its actions. This approach helps AI systems become more accurate, fair, and safe. And as these systems grow more complex, the importance of HITL will only increase — the smarter the machine, the more thoughtful our guidance must be.

Human-in-the-Loop ensures that AI progress remains aligned with human values and human judgment, keeping technology working for people rather than against them. That is why HITL stands today as a foundational principle of responsible AI, and likely a cornerstone of successful AI integration in society for many years to come.