Imagine you’re tasked with organizing a vast library, where each book represents a unique piece of data. Without a clear system in place, finding the right book when needed becomes a challenge. Data augmentation works similarly - transforming and organizing data to make it more diverse and useful for machine learning models, ensuring they can generalize better.

In this guide, we’ll walk you through the essentials of data augmentation, covering the techniques, methods, tools, and real-world use cases. We’ll also explore how it helps enhance model performance. According to this research, leveraging data augmentation significantly improved ML model performance, making it a powerful tool for boosting accuracy and robustness across various machine learning tasks.

What is Data Augmentation?

Data augmentation is the process of increasing the variety of a training dataset without actually collecting new data. This is typically done by applying a series of transformations that change the data slightly, ensuring the core information remains unchanged but appears new to the model being trained.

Data augmentation is like giving your training data a makeover to make it more diverse, without actually collecting new samples. Imagine you’re teaching a child to recognize apples. If you only show them red apples from one angle, they might struggle to recognize a green apple or one viewed from the side. But if you show them apples of different colors, sizes, and angles, they’ll learn to recognize apples in any situation.

- For images, these transformations might include rotation, flipping, scaling, or color variation in the realm of images.

- For textual data, techniques might involve synonym replacement, sentence shuffling, or translation cycles.

The main goal is to create a richer, more varied dataset, so the model learns patterns instead of memorizing specific examples. This helps prevent overfitting and improves its ability to work well with new, unseen data.

When to Use Data Augmentation?

It’s important to understand when data augmentation can truly enhance a machine learning project and when it might fall short—so here’s a summary of situations where its use is highly effective, as well as cases where it may not offer much benefit.

| When to Use Data Augmentation | When Not to Use |

|---|---|

| Dataset is small, and more varied data is needed. (e.g., augmenting a limited set of images with rotations and flips for a computer vision task) | Dataset is already large and diverse, representing a wide variety of scenarios. (e.g., a comprehensive dataset for image recognition that already includes a wide range of variations) |

| Model is at risk of overfitting due to limited samples. (e.g., using synonym replacement in text data to create additional training examples) | There's a risk of introducing misleading variations that can degrade model performance. (e.g., distorting text data to the point where it no longer maintains its original meaning) |

| Improving model robustness and generalization is a goal. (e.g., adding background noise to audio files for a speech recognition system) | Data characteristics or problem domain does not align with standard augmentation techniques. (e.g., augmenting financial time-series data where temporal relationships are crucial and easily disrupted) |

| Increasing model accuracy by exposing it to a wider range of scenarios. (e.g., altering lighting conditions in images to prepare a model for various environments) | The model effectively generalizes from training to unseen data without additional data variations. (e.g., a well-performing NLP model trained on a large, varied corpus of text) |

Augmented Data vs. Synthetic Data

While data augmentation and synthetic data generation both aim to improve machine learning model performance, they differ in approach and application. Augmented data refers to variations of existing data, created through transformations like rotation, cropping, or text paraphrasing. These modifications help models generalize better without introducing entirely new samples. In contrast, synthetic data is artificially generated using algorithms such as Generative Adversarial Networks (GANs) or Variational Autoencoders (VAEs), creating entirely new data points that mimic real-world distributions.

Augmented data is particularly useful when real data is available but limited in variety. For example, in computer vision, flipping and rotating an image can help a model recognize objects from different angles. However, augmentation has its limits—it can only produce variations of what already exists and cannot generate entirely new scenarios. Synthetic data, on the other hand, is valuable in cases where collecting real data is impractical or expensive. For instance, self-driving car simulations use synthetic environments to train models for rare but critical events, such as accidents or extreme weather conditions.

Both techniques have advantages and drawbacks. Augmented data is often more reliable because it maintains real-world characteristics, while synthetic data may introduce biases if the generation process does not accurately reflect real distributions. Choosing between the two depends on the specific machine learning application, the availability of real data, and the need for diversity in the dataset.

Where to Find Free Datasets: A Beginner’s Guide

Learn more

Challenges and Limitations

Implementing data augmentation effectively comes with its own set of challenges and limitations, including:

- Balancing augmentation levels: Finding the optimal amount of augmentation is crucial. Too little may not make a noticeable difference, while too much can lead to learning artificial noise rather than useful patterns.

- Selection of techniques: Not all augmentation techniques are suitable for every type of data. Selecting the wrong methods can lead to ineffective training or model confusion.

- Increased computational resources: Augmenting data can significantly increase the need for computational resources and extend training times, impacting project timelines and costs.

- Risk of distorting data: There's a fine line between adding useful variability and distorting the data to the point where it no longer represents real-world scenarios.

Ethical Considerations

The use of data augmentation, particularly in generating synthetic data, also introduces several ethical considerations:

- Bias introduction: You need to ensure that augmentation techniques do not inadvertently introduce or perpetuate bias within the dataset.

- Privacy concerns: When augmenting sensitive information, it's essential to ensure that the privacy of individuals is not compromised by the generation of synthetic data that could be traced back to real individuals.

- Transparency and fairness: Ethical data augmentation practices require transparency in how data is augmented and fairness in the representation of diverse groups within the augmented data.

Data Augmentation Techniques

Data augmentation techniques vary widely across different types of data, such as images, text, audio, and tabular data. Each type requires specific methods to effectively increase the dataset's diversity without losing the essence of the data. Here's a rundown of some common techniques used for different data types:

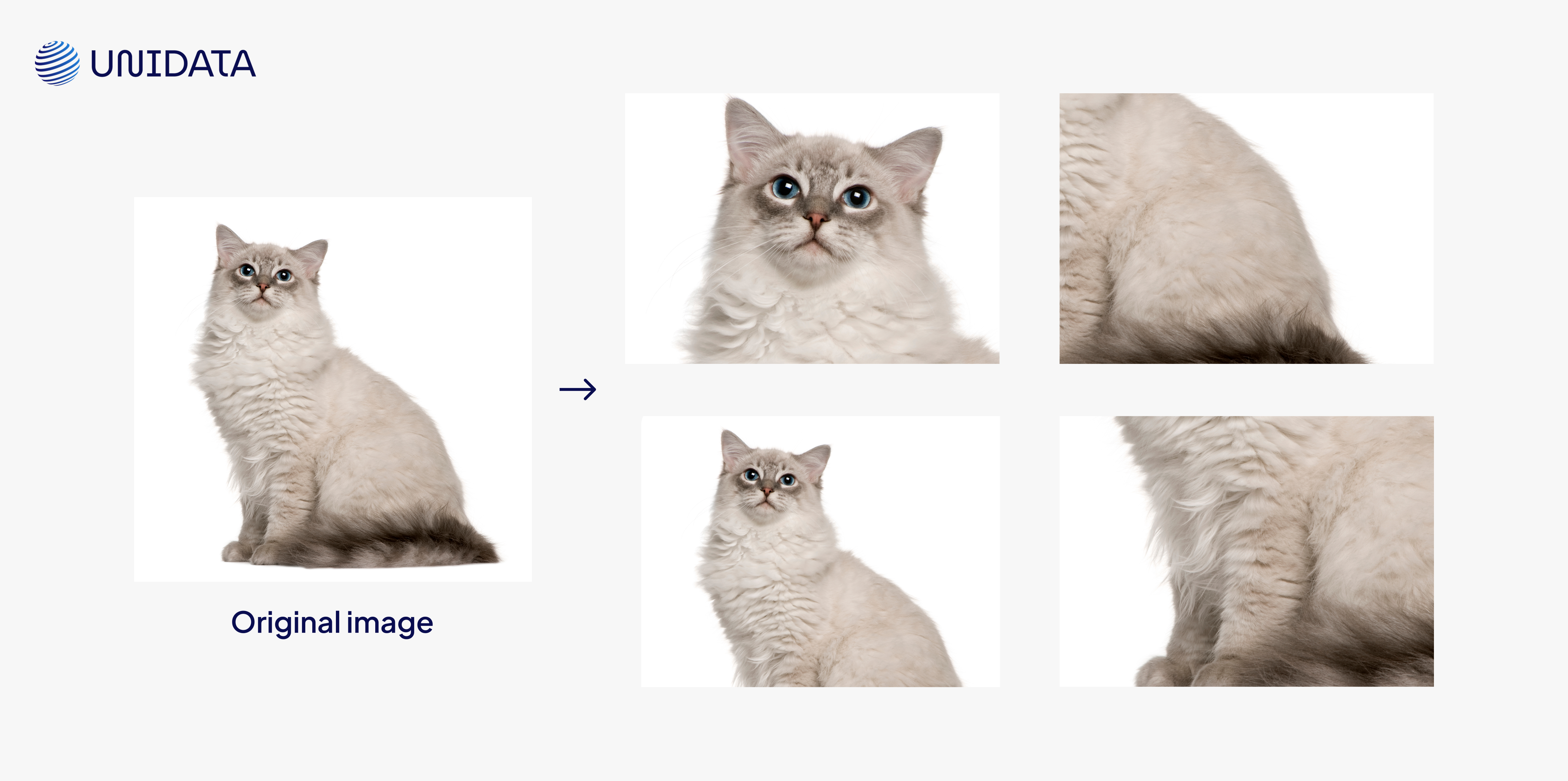

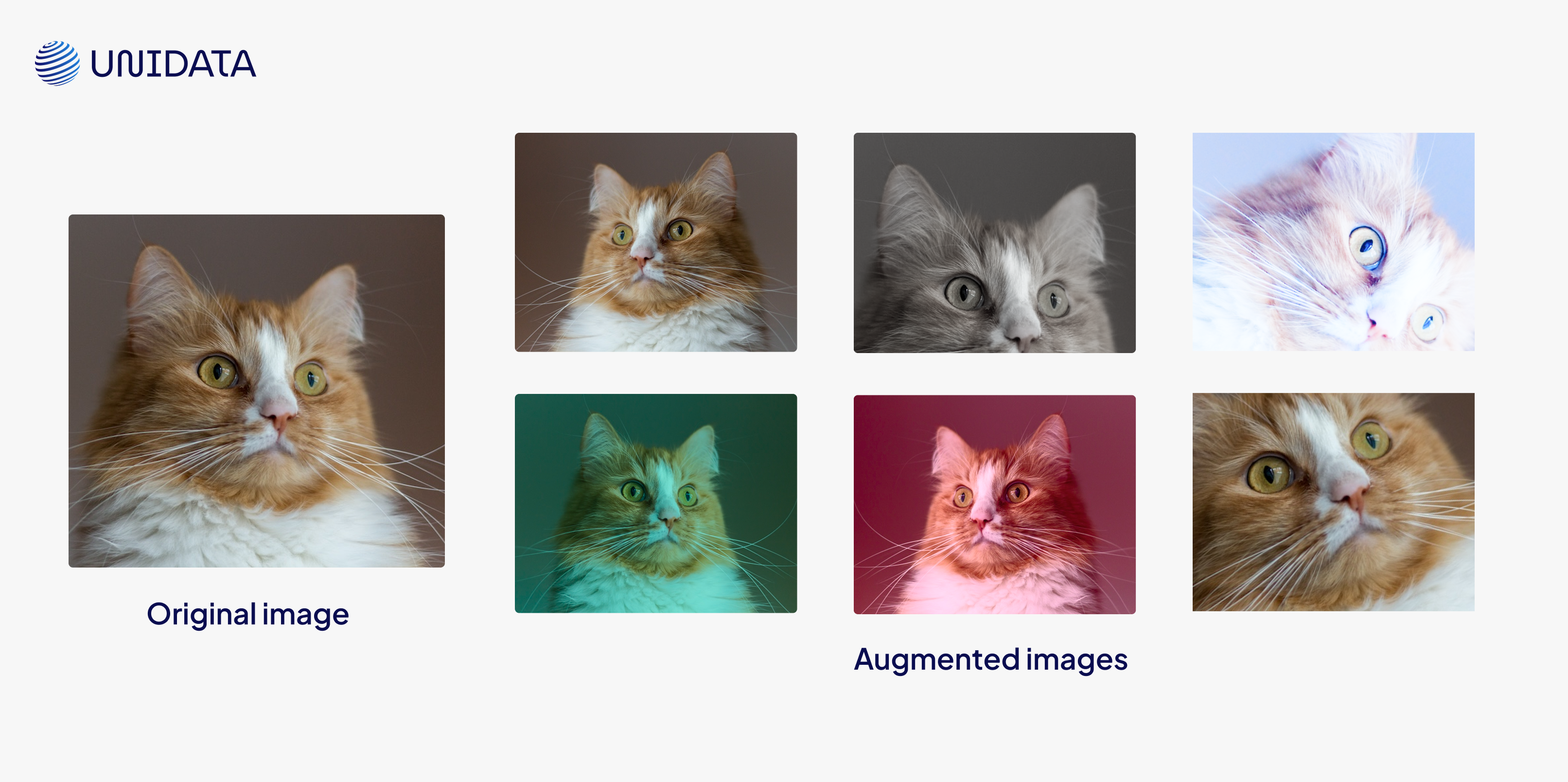

Image Data Augmentation

- Rotation: Rotating the image by a certain angle to simulate the effect of viewing the object from different perspectives.

- Cropping: Cutting out parts of the image to focus on certain regions, helping the model to focus on specific features.

- Flipping: Mirroring the image either horizontally or vertically to increase dataset variability.

- Zooming: Adjusting the image size by zooming in or out, simulating objects being closer or farther away.

- Noise injection: Adding random noise to images to simulate imperfect real-world conditions and improve model robustness.

- Perspective changes: Modifying the viewpoint or angle from which an object is seen, simulating a 3D perspective shift.

- Color transformation: Altering the color properties of images (such as brightness, contrast, saturation) to make the model more robust to color variations.

Text Data Augmentation

- Synonym replacement: Replacing words in sentences with their synonyms to slightly change the sentences while retaining the original meaning.

- Original: “The quick brown fox jumps over the lazy dog.”

- Augmented: “The speedy brown fox leaps over the lazy dog.”

- Sentence shuffling: Rearranging the sentences in a paragraph to introduce variability without altering the overall content.

- Original paragraph: "Machine learning models require diverse datasets. Data augmentation provides variety to help models generalize better. Effective augmentation techniques boost performance significantly."

- Augmented paragraph (sentence shuffled): "Effective augmentation techniques boost performance significantly. Machine learning models require diverse datasets. Data augmentation provides variety to help models generalize better."

- Back translation: Translating text to another language and then back to the original language to introduce linguistic variations.

- Original sentence: “Natural language processing is transforming digital experiences.”

- Translated to French and back to English: “Natural language processing revolutionizes digital interactions.”

- Text generation: Using advanced models to generate new text samples based on the existing corpus.

- Prompt (original corpus sentence): “Data annotation is essential for training AI.”

- Generated augmentation example: “Accurate data labeling significantly improves AI training outcomes.”

- NLP tools: Utilizing specialized tools and libraries designed for augmenting textual data.

Various specialized tools and libraries are designed explicitly for augmenting text data efficiently, simplifying the augmentation process for developers.

Recommended Tools:

- NLPAug – an open-source Python library offering comprehensive augmentation methods, including synonym replacement, random insertion, and back translation.

- TextAttack – facilitates adversarial text generation and augmentation tailored for NLP robustness.

Example:

# Synonym replacement with NLPAug

import nlpaug.augmenter.word as naw

aug = naw.SynonymAug(aug_src='wordnet')

original_text = "Artificial intelligence is reshaping industries globally."

augmented_text = aug.augment(original_text)

print("Original:", original_text)

print("Augmented:", augmented_text)

Possible output:

Original: Artificial intelligence is reshaping industries globally. Augmented: Artificial intelligence is transforming industries globally.

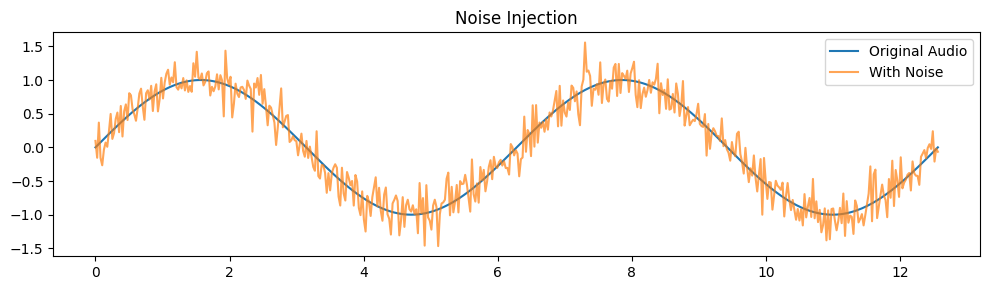

Audio Data Augmentation

- Noise injection: Adding background noise (e.g., traffic, crowd noise) to clean audio samples to improve the model's noise-handling capabilities.

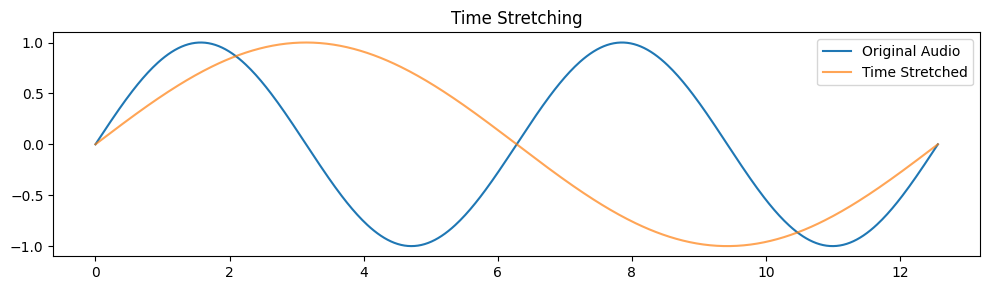

- Time stretching: Altering the speed of the audio clip without changing its pitch, simulating faster or slower speech.

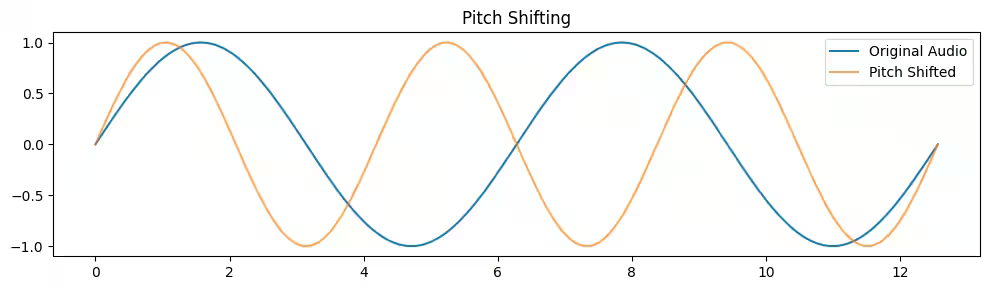

- Pitch shifting: Changing the pitch of the audio, simulating different vocal characteristics.

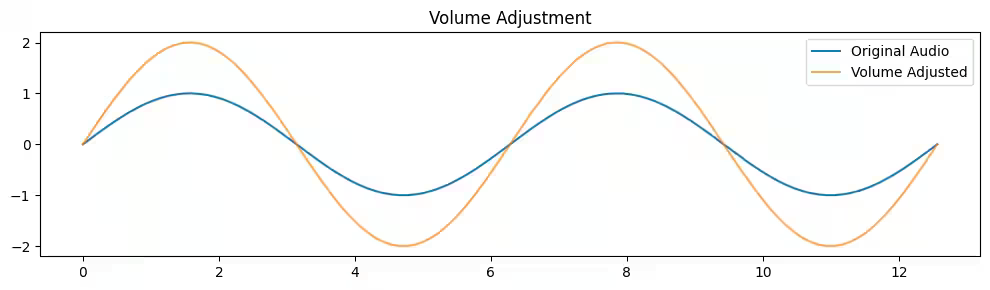

- Volume adjustment: Varying the audio's volume to prepare the model for different recording levels.

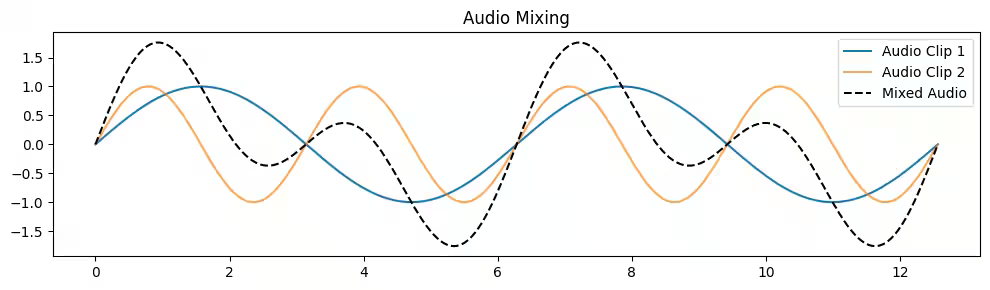

- Audio mixing: Combining different audio clips to create complex soundscapes or overlay speech with background noises.

Advanced Techniques

- Feature engineering: Creating new features from existing ones to add more information and variability to the dataset.

- SMOTE (Synthetic Minority Over-sampling Technique): Generating synthetic samples in feature space to balance class distribution in datasets.

- Random perturbation: Adding small random changes to numerical features to introduce variability.

- GANs (Generative Adversarial Networks): Using GANs to generate new, synthetic examples of data that are indistinguishable from real data, and applicable to images, text, and more.

Data Augmentation: Step-by-Step

1. Data Preparation and Analysis

Goal: Understand the dataset’s structure, biases, and limitations.

Steps:

- Exploratory Data Analysis (EDA): Visualize data distributions (e.g., class imbalance, image resolution).

- Handle Missing Data: Clean corrupted or incomplete samples.

- Normalize/Standardize: Preprocess data (e.g., scale pixel values to [0, 1] for images).

- Baseline Performance: Train a model on raw data to establish a performance benchmark.

2. Select Augmentation Techniques

Goal: Choose transformations that align with the problem domain and data type.

Common Techniques by Data Type:

| Data Type | Techniques |

|---|---|

| Images | Rotation, flipping, cropping, color jittering, noise injection. |

| Text | Synonym replacement, back-translation, random deletion. |

| Audio | Time stretching, pitch shifting, adding background noise. |

| Tabular | SMOTE (Synthetic Minority Oversampling), Gaussian noise. |

Key Considerations:

- Preserve Labels: Ensure transformations don’t alter the ground truth (e.g., rotating a "cat" image still labels it as "cat").

- Domain Relevance: Use medical image rotation for X-rays but avoid extreme distortions that misrepresent anatomy.

3. Apply Transformations

Goal: Generate augmented data programmatically or manually.

Tools and Workflows:

- Image Augmentation: Use libraries like Albumentations or TensorFlow’s ImageDataGenerator.

- Text Augmentation: Tools like NLPAug for synonym replacement.

- Automated Pipelines: Frameworks like AutoAugment (Google) learn optimal augmentation policies.

Example Code (Image Rotation with Python):

from tensorflow.keras.preprocessing.image import ImageDataGenerator datagen = ImageDataGenerator(rotation_range=30) augmented_images = datagen.flow(original_images, batch_size=32)

Parameter Tuning: Adjust transformation intensity (e.g., rotation angle, noise level) to avoid unrealistic outputs.

4. Validate Augmented Data Quality

Goal: Ensure augmented data is realistic and retains meaningful features.

Methods:

- Visual Inspection: Manually check samples (critical for images/audio).

- Automated Checks: Use scripts to verify label consistency.

- Statistical Tests: Compare feature distributions of original vs. augmented data (e.g., KL divergence).

5. Integrate with Training Pipeline

Goal: Seamlessly combine augmented data with model training.

Approaches:

- On-the-Fly Augmentation: Generate augmented batches in real-time during training (memory-efficient).

- Offline Augmentation: Pre-generate and save augmented datasets (useful for small datasets).

6. Monitor Performance and Iterate

Goal: Evaluate if augmentation improves model robustness.

Metrics to Track:

- Training Accuracy: Detect overfitting (e.g., if accuracy drops on unaugmented validation data).

- Generalization: Test on diverse real-world scenarios (e.g., low-light images for autonomous vehicles).

7. Address Ethical and Bias Concerns

Goal: Prevent augmentation from amplifying biases.

Strategies:

- Balance Classes: Oversample underrepresented groups (e.g., rare diseases in medical data).

- Audit Outputs: Check for skewed distributions (e.g., gender bias in face datasets).

Data Augmentation Tools and Libraries

Data augmentation is a critical step in the preparation of datasets for training machine learning models, especially when the available data is scarce, imbalanced, or not diverse enough. Fortunately, a wide range of tools and libraries are available to facilitate data augmentation across various types of data, including images, text, audio, and tabular data. Here's an overview of some popular data augmentation tools and libraries for these different data types:

| Library | Data Type | Strengths | Ideal Use Case | Framework Integration |

|---|---|---|---|---|

| Albumentations | Images | Fast, flexible, optimized for performance | Complex image augmentation pipelines | PyTorch, TensorFlow |

| Imgaug | Images | Rich geometric & color transforms, keypoints support | Object detection, segmentation, heatmap tasks | NumPy, TF, PyTorch |

| Augmentor | Images | Probabilistic pipelines, simple API | Quick and easy image classification tasks | Framework agnostic |

| Torchvision | Images | Real-time, native PyTorch support | Live augmentation in PyTorch training | PyTorch |

| NLPAug | Text, Speech | Synonyms, back-translation, contextual augmentation | Text classification, NLP tasks, basic audio | PyTorch, TensorFlow |

| TextAttack | Text | Augmentation + adversarial robustness testing | NLP pipelines, model hardening, fine-tuning | HF, PyTorch, TensorFlow |

| spaCy | Text | Linguistic NLP features, rule-based augmentation | Language-aware preprocessing & enrichment | spaCy-native |

| Audiomentations | Audio | Background noise, pitch/tempo, room acoustics | Speech data augmentation for ASR models | Python, NumPy |

| torchaudio | Audio | Native PyTorch audio tools, transforms, loaders | ASR model pipelines, streaming audio | PyTorch |

| librosa | Audio | Audio analysis, pitch/time transforms | Research & music/audio classification | NumPy, SciPy |

Data Augmentation with Python

Implementing data augmentation in Python can significantly improve the performance of machine learning models, especially when dealing with limited or imbalanced datasets. Here areare examples of how to perform data augmentation for various types of data—images, text, and audio—using popular Python libraries.

- Image data augmentation with TensorFlow : The ‘tf.keras.preprocessing.image.ImageDataGenerator’ class is a convenient tool for image augmentation, offering a variety of transformations.

- Text data augmentation with NLPAug: This one a library designed to augment text data using various NLP techniques, including synonym replacement and back translation.

- Audio data augmentation with librosa: It can be used for simple audio data augmentation tasks like time stretching and pitch shifting.

Data Augmentation Use Cases

| Industry | Use cases |

|---|---|

| Healthcare and medical imaging | Diagnosis and treatment enhancement with medical image augmentation; drug discovery through molecular data augmentation. |

| Autonomous vehicles | Object detection and simulation training for safer autonomous driving systems. |

| Retail and e-commerce | Improved product recommendations and automated inventory management through customer and product image data augmentation. |

| Financial services | Enhanced fraud detection and more accurate credit scoring using augmented transaction and credit history data. |

| Agriculture | Crop disease detection and yield prediction improvement through image and historical yield data augmentation. |

| Manufacturing | Automated quality control and supply chain optimization in manufacturing processes. |

| Entertainment and media | Realistic content generation in gaming and film; personalized content recommendations in media. |

| Security and surveillance | Robust face recognition for security; enhanced anomaly detection in surveillance applications. |

Best Practices

Implementing data augmentation effectively requires adherence to a set of best practices. These practices ensure that the augmentation not only contributes to model performance but also respects the integrity and distribution of the original data. Here are some key best practices in data augmentation:

Understand your data

- Data specificity: Tailor augmentation strategies to the specific characteristics and requirements of your data. What works for images may not work for text or tabular data.

- Realistic augmentations: Ensure augmented data remains realistic and representative of scenarios the model will encounter in the real world.

Balance augmentation

- Avoid overfitting: Use augmentation to combat overfitting by increasing dataset size and variability, but be cautious not to introduce noise that could lead to underfitting.

- Variety and diversity: Apply a diverse set of augmentations to cover a broad range of variations, enhancing the model's ability to generalize.

Use the right tools and techniques

- Leverage existing libraries: Utilize established data augmentation libraries and tools that are well-documented and widely used within the community.

- Experiment with advanced techniques: Consider exploring advanced techniques like GANs for generating synthetic data or domain-specific augmentations for unique challenges.

Ensure consistency and quality

- Quality control: Regularly review augmented data to ensure it maintains high quality and does not introduce unintended biases or artifacts.

- Consistent preprocessing: Apply the same preprocessing steps to both original and augmented data to maintain consistency in model training.

Ethical considerations and bias

- Monitor for bias: Be vigilant about augmentations introducing or amplifying biases in the dataset, particularly in sensitive applications.

- Privacy and ethical use: When generating synthetic data, especially involving personal information, ensure compliance with privacy regulations and ethical standards.

Continuous evaluation

- Monitor performance: Continuously evaluate the impact of data augmentation on model performance, adjusting strategies as necessary.

- Iterative process: Treat data augmentation as an iterative process, where strategies are refined based on ongoing results and insights.

Automation Prospects for Data Augmentation

Automating data augmentation holds promising prospects for enhancing the efficiency and effectiveness of training machine learning models. By intelligently selecting and applying augmentation techniques, automation can tailor the augmentation process to the specific needs of different datasets and domains. Key advancements include adaptive augmentation strategies, the use of generative models for synthetic data generation, and the integration of augmentation directly into the model training process with dynamic, real-time adjustments.

The move towards automation also involves developing policies that optimize augmentation based on model performance and creating domain-specific augmentations that respect the unique characteristics of various types of data. However, challenges such as the need for significant computational resources, maintaining data quality, and avoiding bias in augmented datasets must be addressed.

As the field progresses, we can expect further integration of AI-driven approaches in data augmentation, promising more sophisticated and efficient model training methodologies. Automation in data augmentation is set to become a key driver in the evolution of machine learning, offering scalable, customizable, and effective solutions for data enhancement.

Conclusion

Data augmentation is a smart way to improve machine learning models by creating more training examples without actually collecting new data. It works by making small changes to existing data—like flipping images, replacing words in text, or adding background noise to audio—so that the model learns to handle different variations and performs better on real-world tasks.

This technique is especially useful when there isn’t enough data available or when a model struggles to recognize patterns correctly. It helps reduce mistakes and makes AI more reliable. Many industries use data augmentation, from healthcare (to improve medical diagnoses) to self-driving cars (to recognize objects in different lighting conditions).

However, it’s important to use data augmentation wisely. If done incorrectly, it can add too much randomness and confuse the model instead of improving it. It also requires careful handling to avoid biases or unrealistic changes that could lead to poor results.

As AI technology advances, more sophisticated and automated ways to augment data are being developed, making models smarter and more adaptable. This ensures that AI can handle real-world challenges more effectively and make better decisions in various applications.