Introduction to Bounding Box Annotation

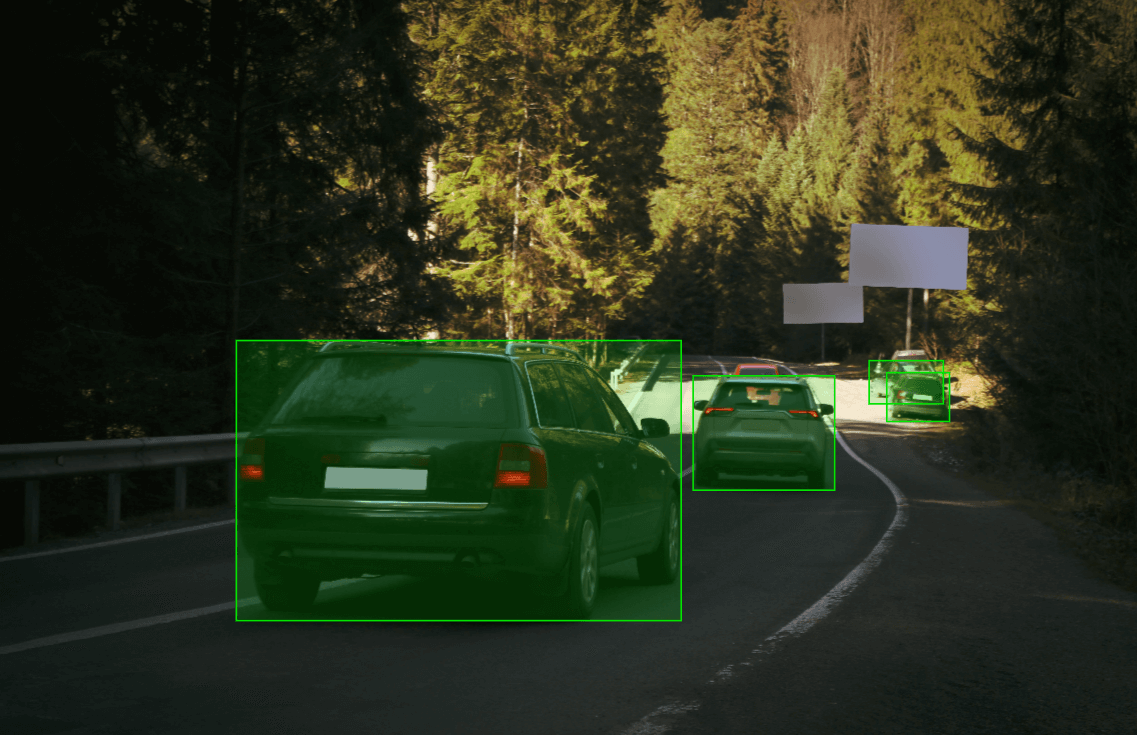

Bounding box annotation is foundational to computer vision projects that rely on accurately identifying and localizing objects within an image. This type of annotation uses rectangular boxes to enclose specific areas of interest, such as faces, objects, or other key elements. In machine learning and computer vision, these boxes allow algorithms to learn to recognize and differentiate objects, crucial in applications ranging from autonomous driving to retail visual search.

Accuracy in bounding box annotation is essential for training robust models. As more industries invest in AI-driven solutions, quality annotations become more impactful on model performance, directly influencing the reliability and safety of machine learning solutions.

The Anatomy of a Bounding Box

Bounding boxes are rectangular annotations defined by four main components:

- X and Y Coordinates – These define the starting point (top-left corner) of the box.

- Width and Height – Measurements extend from the starting coordinates, outlining the box's size.

- Label – Assigns the object category, such as “car,” “pedestrian,” or “tree.”

- Confidence Score – Often used in predictions, showing the likelihood of the label’s accuracy.

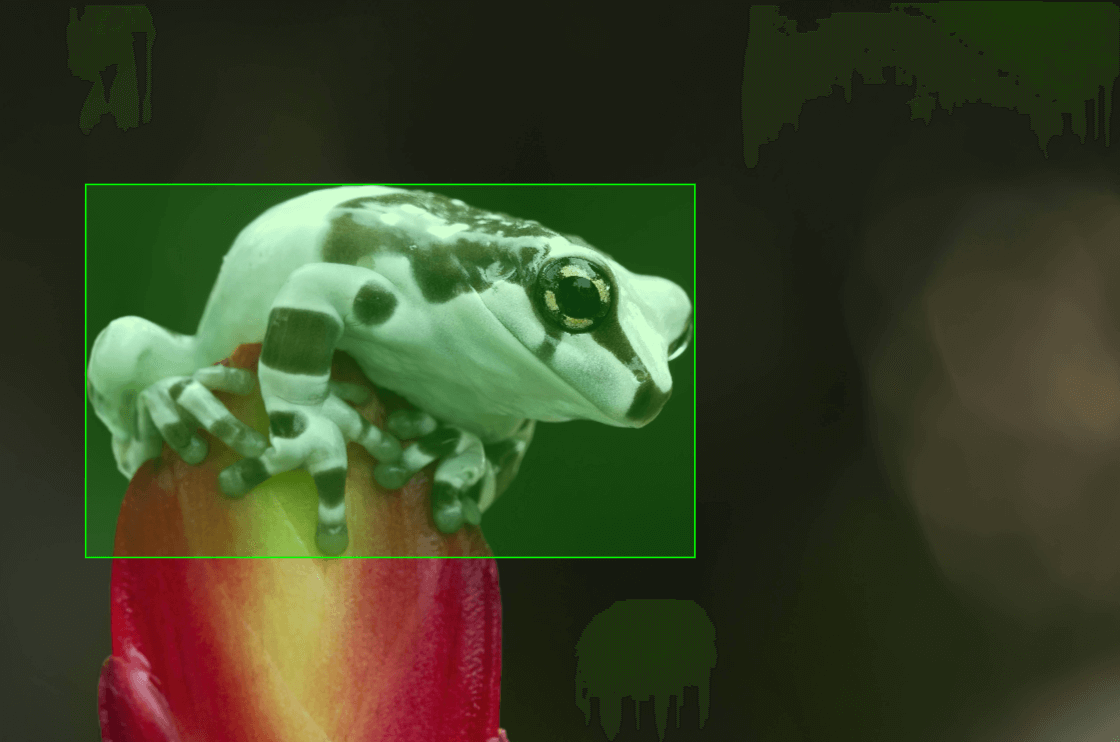

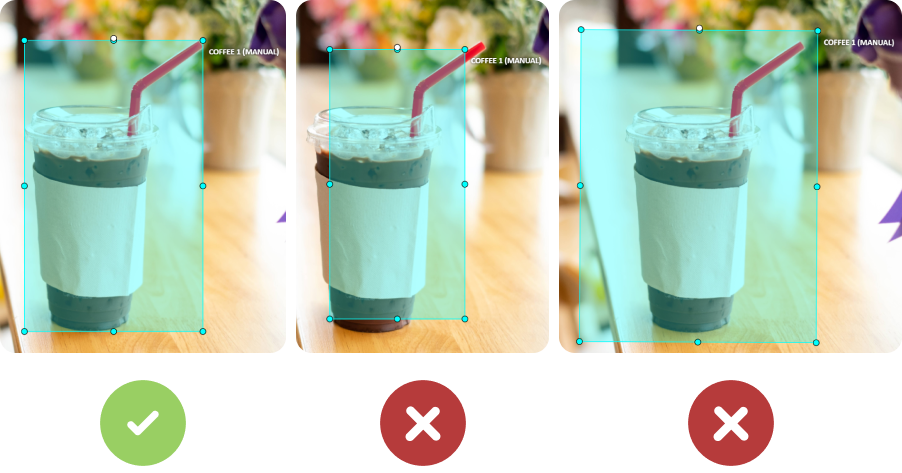

In annotating, the goal is to ensure that each box tightly fits around the object while covering its entire area. An illustration of bounding boxes, showing examples of “tight” vs. “loose” annotations, can help explain proper bounding box alignment.

Use Cases of Bounding Box Annotations Across Industries

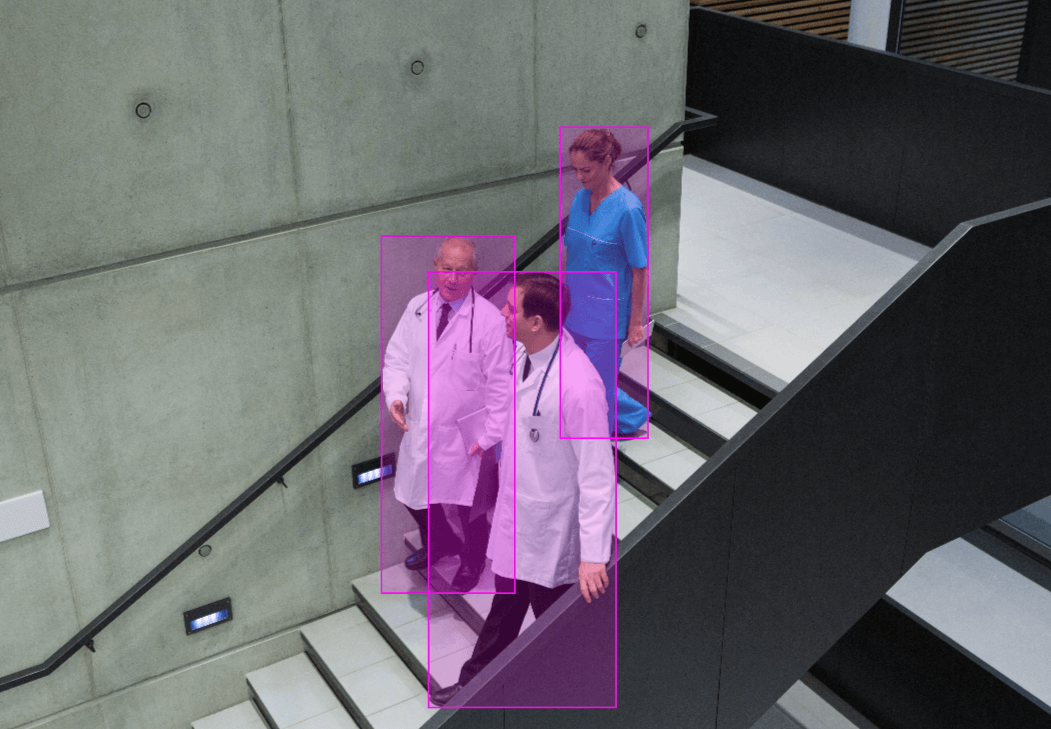

Bounding box annotations find applications across various fields, helping train models for specific, real-world tasks:

- Autonomous Driving

In self-driving cars, bounding boxes enable real-time object detection for road safety, identifying pedestrians, vehicles, and obstacles. - Healthcare

Medical imaging leverages bounding boxes to detect anomalies, like tumors in X-rays, improving diagnostic accuracy. - Retail and E-Commerce

Visual search tools use bounding boxes for product detection, helping customers find similar items and enhancing inventory management. - Agriculture

In agriculture, bounding boxes assist in crop monitoring and pest detection, boosting yields and protecting resources. - Security and Surveillance

Bounding boxes aid surveillance systems in identifying individuals or suspicious objects, bolstering security through real-time monitoring.

Why Accuracy in Bounding Box Annotation is Crucial

Accurate bounding boxes enhance the performance of machine learning models by improving their ability to detect and classify objects correctly. In critical applications, such as autonomous vehicles or medical diagnostics, any inaccuracy can lead to errors, potentially causing harm or financial loss. For instance, if an autonomous car misinterprets a pedestrian due to poor annotation, it may compromise safety.

A study conducted by Tesla highlighted the necessity of precision in bounding box labeling for obstacle detection in autonomous driving. According to the study, a 2% improvement in annotation accuracy reduced collision risks by up to 10% (Tesla Autonomy Day, 2020).

Key Principles for Effective Bounding Box Annotation

Following a set of best practices is vital for ensuring the quality and consistency of annotations:

- Precision – Annotations should fit the object boundaries closely without excess space.

- Consistency – Ensuring uniform annotation criteria across the dataset prevents model biases.

- Avoiding Bias – Annotation teams should adhere to guidelines to prevent subjective decisions, like labeling “unclear” objects inconsistently.

- Context and Coverage – Avoid cutting off parts of objects or missing peripheral objects that add contextual value to the dataset.

Tools and Software for Bounding Box Annotation

Various annotation tools help streamline the annotation process, each with unique features tailored to different use cases:

| Tool | Key Features | Strengths | Limitations |

|---|---|---|---|

| CVAT | Open-source, supports video/image annotation | Free, highly customizable, supports APIs | Requires local setup, lacks robust automation |

| Labelbox | AI-assisted labeling, cloud integration | Efficient for large datasets, scalable | Costly for small teams |

| LabelImg | Free, open-source, simple UI | Great for beginners, easy setup | Limited advanced features |

| Supervisely | Collaborative workflows, flexible project management | Supports teams, many features | Requires learning curve |

Selecting a tool depends on project needs, including scale, budget, and required features. Integrating these tools into the machine learning workflow can enhance efficiency, enabling annotators to work faster and more consistently.

Best Practices for Annotating with Bounding Boxes

For effective annotation, clear guidelines and a quality assurance process are essential. Consider these key practices:

- Guidelines for Teams

Establishing rules, such as box-tightness requirements and handling overlapping objects, ensures consistency. - Verification Processes

Periodic checks and re-annotations help verify that the annotations align with project standards. - Using Standard Templates

Template formats can guide annotators on how to handle commonly misinterpreted scenarios. - Handling Edge Cases

Some objects are partially obstructed or appear distorted. It’s best to define edge case handling to keep annotations consistent.

Video Data Collection for Street Weapon Detection

- Video Systems and Video Analysis

- 100 hours of video for annotation

- 28 days

Common Challenges in Bounding Box Annotation

Annotation can become challenging when objects are occluded, vary widely in scale, or appear within complex backgrounds. Common problems include:

- Occlusion: Partially visible objects may require tighter bounding boxes or be omitted.

- Scale Variation: Tiny objects need careful handling to avoid “loose” annotations.

- Crowded Scenes: In dense environments, distinguishing individual items becomes complex, requiring specialized guidelines for annotators.

Advanced Techniques for Bounding Box Annotation

AI-assisted and semi-automated tools can make annotation more efficient, reducing human effort in the process. For instance, pre-trained models can generate bounding boxes, allowing annotators to focus on refining these initial suggestions. This workflow helps reduce time while maintaining high-quality annotations.

Other Annotation Methods in Computer Vision

Bounding boxes aren’t the only way to label images in computer vision. Different methods offer various levels of detail, depending on the task requirements.

• Image Classification is the simplest approach. It assigns a single label to an entire image but does not specify the location of objects. This method is widely used in applications like spam detection, document categorization, and scene recognition.

• Image Segmentation provides more detailed annotations by defining the exact shape and boundaries of objects. This method ensures higher accuracy compared to bounding boxes, especially when objects overlap.

• Semantic Segmentation labels every pixel in an image according to its category, making it useful for scenarios that require precise differentiation between objects and their backgrounds, such as medical imaging, satellite image analysis, and self-driving cars.

• Instance Segmentation goes a step further by distinguishing between multiple objects of the same category. Unlike semantic segmentation, which treats all objects of the same class as one, instance segmentation assigns unique labels to each individual object. This is crucial in applications like autonomous driving, where multiple cars and pedestrians need to be separately identified.

• Polygon Annotation provides even greater precision than bounding boxes and is particularly useful for objects with irregular shapes. Instead of enclosing objects in rectangles, this technique outlines their exact contours using multiple points. It is commonly used in geospatial analysis, medical imaging (e.g., tumor segmentation), and retail shelf monitoring.

• Keypoint Annotation is used to mark specific locations on objects. This technique is essential for applications like facial recognition (e.g., detecting eye and mouth positions), human pose estimation (e.g., tracking joints for motion analysis), and gesture recognition. Keypoints allow models to analyze structural details, making them valuable for industries like sports analytics, biometrics, and robotics.

Evaluating Bounding Box Annotation Quality

Bounding box quality can be measured through metrics like Intersection over Union (IoU), which compares the predicted box to the ground truth. High IoU scores indicate accurate annotation, while low scores can signal needed adjustments.

| Metric | Description | Use Case |

|---|---|---|

| IoU | Overlap ratio between predicted and true bounding boxes | General accuracy check |

| Precision | Ratio of true positives over predicted positives | Reduces false positives |

| Recall | Ratio of true positives over actual positives | Ensures comprehensive labeling |

Popular Case Studies in Bounding Box Annotation

- Tesla Autopilot – Tesla uses bounding boxes to train models on road environments, continuously updating its model with newly annotated data for improved safety.

- Medical Imaging for Tumor Detection – Bounding boxes help identify tumor regions, supporting radiologists in early diagnosis and improved patient outcomes.

- Retail Product Detection – Companies like Amazon utilize bounding boxes to enhance product search and visual recommendation engines, streamlining the customer experience.

Future Trends in Bounding Box Annotation

The evolution of bounding boxes includes advancements in 3D bounding boxes for depth perception, particularly useful in robotics and AR. The integration of synthetic data and fully automated annotation is also expected to grow, improving annotation speed and dataset quality while reducing costs.

Conclusion

Bounding box annotation remains a core technique in computer vision, with applications spanning industries and supporting machine learning models with reliable data. Following best practices, including precision, consistency, and verification, helps improve annotation quality, leading to better-performing models. As technology progresses, innovations in 3D annotation, synthetic data, and automation promise to make annotation even more efficient and precise.