What is AI Content Moderation?

AI content moderation refers to the use of artificial intelligence (AI) to automatically review, filter, and manage user-generated content on digital platforms. It helps detect and remove harmful, inappropriate, or non-compliant content, including:

- Hate speech

- Violence and graphic content

- Misinformation and fake news

- Nudity and adult content

- Spam and scam attempts

AI-based content moderation relies on machine learning (ML), natural language processing (NLP), and computer vision to analyze text, images, videos, and audio. By leveraging automation, AI can handle vast amounts of content in real time, reducing the reliance on human moderators.

Importance of AI in Content Moderation

1. Handling Large-Scale Content

Social media platforms, forums, and streaming services receive billions of posts, comments, and media uploads daily. Manual moderation is impractical at this scale. AI automates the process, ensuring rapid analysis and intervention.

2. Improving User Safety and Experience

AI moderation helps create a safer online environment by removing harmful content before users see it. This reduces exposure to hate speech, explicit material, and misinformation, fostering a more positive user experience.

3. Enhancing Efficiency and Reducing Costs

Human moderation requires significant time and resources. AI automates content filtering, reducing operational costs while maintaining high accuracy. It allows human moderators to focus on complex cases that require nuanced judgment.

4. Ensuring Compliance with Regulations

Governments worldwide are enforcing stricter digital content regulations, such as the Digital Services Act (EU) and Section 230 (USA). AI helps platforms comply with these laws by enforcing content guidelines systematically and consistently.

5. Mitigating Psychological Harm for Moderators

Manual content moderation often exposes human reviewers to disturbing and traumatic content. AI minimizes this burden by handling most of the filtering process, reducing the psychological impact on human moderators.

How AI Content Moderation Processes

AI content moderation is transforming the way platforms monitor and filter content through:

1. Real-Time Detection and Removal

One of the standout features of automated content moderation is its ability to operate in real time. AI systems are designed to analyze content as soon as it is posted, whether it’s a comment, image, or video. This instantaneous review process is critical for platforms that rely on live interactions, such as streaming services or social media networks. By detecting and removing harmful material before it becomes visible to a broader audience, AI helps prevent the rapid spread of toxic content and minimizes potential harm to users.

This real-time capability is made possible by highly optimized algorithms that can process vast streams of data almost instantly. Tools like Apache Kafka and Google Cloud Pub/Sub enable scalable stream processing, while TensorFlow Serving and ONNX Runtime provide efficient AI model deployment. For moderation-specific applications, services like Google Cloud Content Moderation API, OpenAI Moderation API, and AWS Rekognition help platforms maintain a safe and engaging digital space.

2. Advanced Machine Learning Models

AI-based content moderation leverages sophisticated machine learning models that are continuously trained on large datasets. These models learn to identify not only overtly harmful content but also subtle patterns that may indicate emerging trends in inappropriate behavior. For instance, new forms of hate speech or coded language can be detected as the AI adapts to evolving online vernaculars.

Common machine learning models used in content moderation include:

- Transformer-based models like GPT-4, BERT, and RoBERTa for text classification and sentiment analysis.

- Ensemble learning methods such as XGBoost and LightGBM for spam detection.

- Self-supervised learning models like SimCLR and CLIP, which help AI recognize subtle patterns in text and images.

Over time, the accuracy and reliability of these models improve, leading to fewer false positives and negatives. This iterative learning process is essential for maintaining the delicate balance between effective moderation and preserving free speech.

3. Natural Language Processing (NLP) for Context Understanding

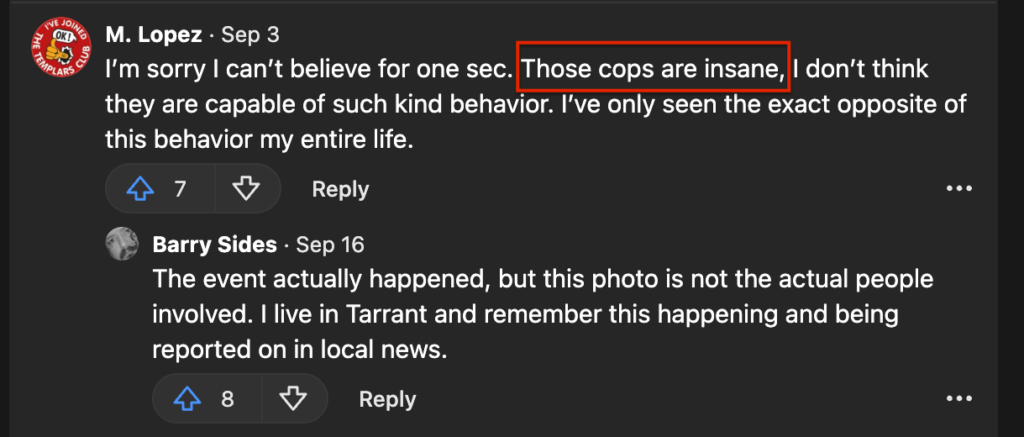

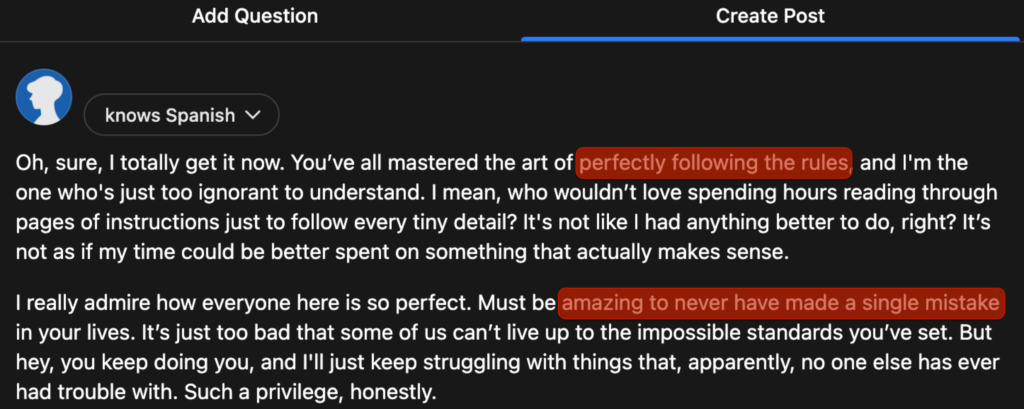

Traditional keyword filtering systems have given way to more nuanced approaches thanks to natural language processing (NLP). NLP empowers AI to understand context, sentiment, and subtext in user-generated text. This means that the AI can distinguish between a heated debate and hate speech or recognize sarcasm and contextual humor that might otherwise trigger automated flags.

Key NLP models and tools include:

- Perspective API (Google Jigsaw) – for toxicity detection in online discussions.

- OpenAI Moderation API – for detecting harmful content based on predefined categories.

- BERT and T5 – for sentiment analysis and contextual understanding.

By analyzing syntax, semantics, and even cultural references, NLP helps ensure that only genuinely harmful content is removed, while legitimate discourse remains largely unaffected.

This is how NLP can help detect potentially inappropriate sarcasm.

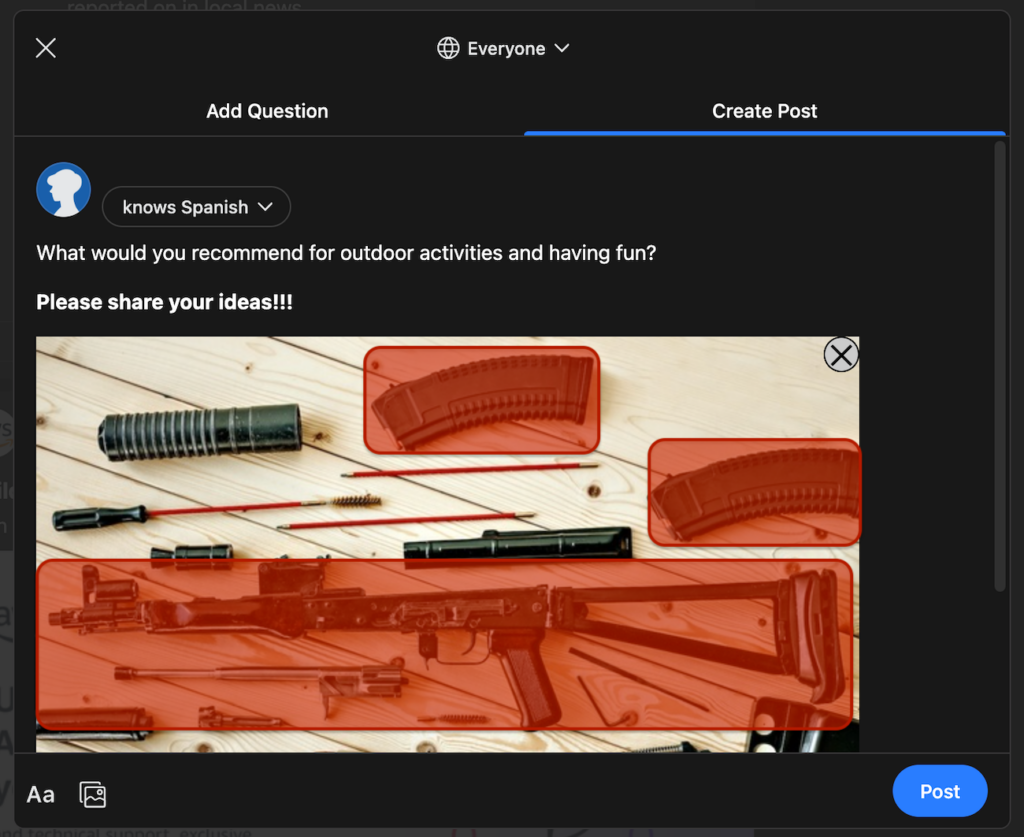

4. Computer Vision for Image and Video Moderation

When it comes to visual content, computer vision plays a pivotal role in automated moderation. AI-powered tools scan images and videos to identify elements that could be deemed inappropriate or harmful—such as explicit nudity, violent scenes, or extremist symbols.

Modern computer vision models include:

- YOLO (You Only Look Once) – real-time object detection in images and videos.

- Google Cloud Vision API and AWS Rekognition – pre-built APIs for detecting unsafe visual content.

- Deepfake detection models like FaceForensics++ and DeepFakeTIMIT – used to identify manipulated content.

These systems utilize Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs) to detect subtle modifications in images that suggest manipulation, thereby curbing the spread of deepfakes and misleading visual narratives.

For instance, this is how AI can detect weapon images and prevent their publication:

5. Audio and Speech Recognition

Not limited to text and images, automated moderation also extends to audio content. AI systems equipped with speech recognition capabilities can transcribe and analyze spoken language in videos, podcasts, and live broadcasts. This allows for the detection of harmful language or misleading statements even when they are not presented in written form.

Key technologies in speech moderation include:

- Whisper (OpenAI) – state-of-the-art speech-to-text model.

- DeepSpeech (Mozilla) – open-source speech recognition.

- Google Speech-to-Text and AWS Transcribe – commercial services for converting audio into text.

By converting speech into text and applying similar NLP techniques, these systems ensure that offensive or dangerous content is promptly flagged, regardless of its medium.

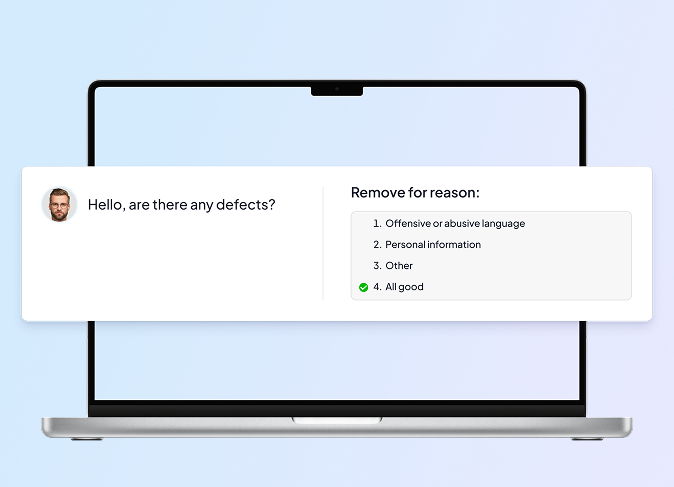

6. Hybrid Moderation Models

While automated content moderation offers impressive speed and scalability, it is most effective when combined with human oversight. Hybrid moderation models integrate AI’s efficiency with the nuanced judgment of human moderators. In this setup, AI handles the bulk of content review by filtering out clear-cut cases, while human experts review more complex or ambiguous situations.

Key technologies supporting hybrid moderation include:

- Human-in-the-loop (HITL) systems – where human moderators provide feedback to AI models to improve accuracy.

- Active learning frameworks (Hugging Face Transformers, Snorkel AI) – for continuous improvement of AI models based on human feedback.

- AI-assisted moderation tools (Facebook’s Community Standards AI, YouTube’s Content ID) – which use AI to pre-screen content before human review.

This collaboration enhances accuracy while continuously refining AI models through feedback. It ensures that the system adapts to new challenges and maintains fairness in content evaluation.

Benefits of Automated Content Moderation

Speed and Scalability

Automated content moderation can process vast volumes of data in real time, far surpassing human capabilities. This ensures that harmful content is detected and removed almost instantly, no matter how much content is generated. (можно вставить какую-то картинку с ростом объема данных, их много в интернете)

Consistency and Objectivity

AI systems apply predefined criteria uniformly across all content. This helps maintain consistency in enforcement, reducing the potential for human bias and error.

Cost Efficiency

By automating routine moderation tasks, platforms can reduce the financial and human resource costs associated with manual moderation. This allows companies to allocate human moderators to more complex issues that require deeper contextual understanding.

For instance, one moderator can check 100 messages per hour, which would amount to 150,000 messages monthly.

Moderating smaller-scale platforms, where millions of messages are sent each month, would require a team of 10-20 moderators, resulting in significant salary costs of $20-40K monthly (with a 2K monthly rate per moderator).

Setting up AI content moderation could involve several costs:

- Servers $40K(or rent 1,5К/month)

- AI tools (can be OPEN-source)

- ML engineer rates $3-5K (4-5 persons)

- Training data (can be open-source)

Enhanced User Experience

Quick removal of harmful content leads to a safer, more welcoming digital environment. Users are less likely to encounter offensive material, which improves overall satisfaction and trust in the platform.

Challenges and Limitations of AI Content Moderation

Algorithmic Bias

AI systems are only as good as the data on which they are trained. If the training data is not diverse or contains inherent biases, the AI may unfairly target certain groups or viewpoints. Continuous auditing and updating of training datasets are crucial to mitigate these issues.

Contextual Understanding

Despite advances in NLP and computer vision, AI sometimes struggles with context, sarcasm, or cultural nuances. This can lead to false positives (flagging acceptable content as harmful) or false negatives (failing to flag genuinely harmful content).

Balancing Moderation and Free Speech

Determining what constitutes harmful content without infringing on free speech is a delicate balance. Overly strict moderation can stifle legitimate discourse, while too lenient an approach may allow harmful content to proliferate.

Evolving Threats

As online behavior and harmful content trends evolve, AI models must continuously adapt. This requires constant retraining and updating to address new forms of hate speech, misinformation, and other emerging threats.

Technical setup of AI content moderation

- AI tools (Hugging face tools)

- Servers (For instance, 4x A100 NVidia)

- Training Data (Open source projects)

- ML specialists (MLOps, Devops, Data Engineer, Data Scientist)

Software for AI content moderation

The AI moderation market offers countless tools to automatically detect harmful content across online platforms. Here are a few examples:

| Software | Overview | Features |

|---|---|---|

| WebPurify | WebPurify is an affordable tool that offers image, text, video, and metaverse moderation. It can be integrated both into a website or an app. This software's AI system identifies images with the highest chances of containing harmful content, detects profanity and filters explicit content from videos and live streams. | - Real-time image, text, and video moderation - Deep learning technology - API that integrates with various platforms - 15 languages support |

| Lasso Moderation | Lasso Moderation is a software designed to moderate conversations and images online, focusing on social media and customer engagement channels. It combines advanced AI technology with human oversight to ensure high-quality moderation and engagement. The tool uses sophisticated algorithms to understand the nuances of human conversation, including slang, idioms, and cultural references. | - Sentiment analysis - Intent recognition - Real-time text and image moderation across various online channels - Engagement tools for user interaction |

| Amazon Rekognition | Amazon Rekognition is an AI service by Amazon Web Services (AWS) that provides image and video analysis. This software helps moderate content on E-commerce websites and social media by flagging inappropriate instances. It has a powerful deep learning technology that offers highly accurate facial recognition, object and scene detection. | - Facial analysis - Image, text in image, and video moderation - Integration with other AWS services for enhanced analysis and automation - Two-level labeling system |

The Different Types of AI Content Moderation

AI content moderation can take various forms depending on the kind of user-generated material and the platform’s objectives. Generally, you can categorize content moderation by:

- Medium (text, images, video, audio)

- Moderation Workflow (pre-moderation, post-moderation, reactive moderation, proactive moderation)

- Nature of Review (fully automated, human-in-the-loop/hybrid)

Below is a deeper look at each classification.

Moderation by Medium

Text Moderation

Text moderation is one of the most common and mature types of AI moderation. It typically involves:

- Keyword and Phrase Detection: Identifying explicit language, hate speech, or spam phrases.

- Sentiment Analysis: Determining whether the tone is hostile, harassing, or dangerous.

- Contextual Understanding (via NLP): Using machine learning models to understand nuances like sarcasm, cultural references, or coded language that might indicate hate speech or misinformation.

Example Use Cases

- Identifying and removing hateful comments or slurs in social media posts.

- Flagging spam, phishing attempts, or scam messages in forums or messaging apps.

- Checking user reviews or comments for profanity and harmful content before publishing.

Image Moderation

With the exponential growth of image sharing, AI-powered computer vision models help monitor visual content:

- Explicit Content Detection: Recognizing nudity or pornography.

- Violence and Graphic Content: Identifying gore or violent imagery.

- Symbol and Logo Recognition: Detecting extremist symbols, hate group logos, or unauthorized brand usage.

- Deepfake Detection: Spotting manipulated images (though this field is continually evolving).

Example Use Cases

- Scanning user profile pictures for explicit or hateful imagery.

- Detecting manipulated content intended to spread misinformation.

- Flagging image-based abuse, such as revenge porn, before it circulates widely.

Video Moderation

Video moderation combines computer vision and sometimes audio analysis to handle moving visual content:

- Frame-by-Frame Analysis: Breaking down videos into frames for detection of explicit or violent content.

- Scene Detection: Identifying unsafe or prohibited scenes (e.g., drug use, graphic violence).

- Object and Person Recognition: Recognizing weapons, violent acts, or individuals who are banned or restricted from appearing in content.

- Automatic Speech Recognition (ASR): Converting spoken dialogue to text, enabling NLP-driven text analysis.

Example Use Cases

- Monitoring livestreams to remove hateful language or explicit visuals in real time.

- Scanning user-uploaded videos for disallowed content (e.g., extremist propaganda).

Audio Moderation

While less talked about, audio moderation is increasingly important with the rise of voice-based apps, podcasts, and live audio streams:

- Speech-to-Text: Converting audio to text for further NLP analysis (detecting hate speech, harassment, misinformation).

- Tone and Sentiment Analysis: Advanced models can interpret tone, which may help identify harassment or bullying that isn’t obvious from text alone.

- Language Detection: Identifying which language is being spoken to apply appropriate content policies.

Example Use Cases

- Moderating audio chat rooms (e.g., Clubhouse, Twitter Spaces).

- Detecting hateful or extremist language in live podcasts or streaming audio.

Moderation by Workflow

Pre-Moderation

In pre-moderation, user-generated content is analyzed and approved by AI (and sometimes a human moderator) before it goes live.

- Pros: Harmful content rarely reaches the public, ensuring a safer user experience.

- Cons: Delays in publishing could frustrate users, and high accuracy is needed to avoid false positives that block legitimate content.

Example Use Cases

- Children’s platforms or apps where safety is paramount.

- Public-facing brand channels with strict content standards.

Post-Moderation

In post-moderation, content is published immediately and then reviewed afterward. If found inappropriate, it is taken down.

- Pros: Real-time engagement is possible without delays.

- Cons: Users may see harmful content before it’s removed, increasing potential risks.

Example Use Cases

- Large social media platforms with massive volumes of user posts.

- News comment sections where immediate user interaction is desired.

Reactive Moderation

Reactive moderation relies on user reports or flags. The content stays live until a complaint is filed, after which AI and/or human moderators review it.

- Pros: Reduces the workload by focusing on content already deemed suspicious.

- Cons: Offensive content might go unnoticed if not enough users report it.

Example Use Cases

- Forums or communities that emphasize user self-governance.

- Niche platforms where content volume is moderate.

Proactive Moderation

In proactive moderation, AI monitors content in real time, often using predictive models. Instead of waiting for reports, the system actively flags or removes content.

- Pros: Quick response to harmful content, minimizing the risk of viral spread.

- Cons: More resources and sophisticated algorithms are needed; potential for higher false positives if models aren’t well-tuned.

Example Use Cases

- Large social networks or news platforms with high volumes of real-time interactions.

- Live video streaming services.

Moderation by Nature of Review

Fully Automated Moderation

In fully automated systems, the AI model makes decisions without human intervention:

- Pros: Highly efficient, instant decisions, scalable for large volumes of content.

- Cons: Potential for errors due to limited contextual understanding or biases in training data.

Example Use Cases

- Initial pass for content filtering on high-traffic social media.

- Automated spam detection in messaging apps.

Human-in-the-Loop (Hybrid) Moderation

Combining AI and human reviewers, human-in-the-loop moderation uses AI to handle the bulk of straightforward cases while escalating borderline or complex content to human experts:

- Pros: Balances speed and accuracy, reduces human exposure to large amounts of disturbing content, and offers nuanced judgments on complex cases.

- Cons: Requires ongoing human resources and consistent feedback loops to improve AI models.

Example Use Cases

- Review of culturally sensitive or nuanced material (sarcasm, satire, etc.).

- Appeals process for users who dispute automated content takedowns.

Advanced Message Filtering for Platform Safety

- E-commerce and Retail

- Dialogues exchanged between users

- 3 months

Best Practices for Implementing Different Types of Moderation

Combine Multiple Techniques

- Use text, image, video, and audio analysis together for a comprehensive moderation strategy.

- Employ multiple workflows (e.g., pre-moderation for sensitive categories, post-moderation for general content).

Implement Tiered Approaches

- Allow AI to handle clear-cut cases automatically.

- Route suspicious or ambiguous cases to human moderators.

Regularly Update Models

- Continuously retrain machine learning models to adapt to evolving slang, cultural references, and new forms of harmful content.

- Conduct bias audits to detect and correct algorithmic biases.

Clear and Transparent Policies

- Provide guidelines and transparency to users about how moderation decisions are made.

- Publish an appeals process for users to challenge removals or bans.

Prioritize User Safety and Mental Health

- Use automated tools to minimize the exposure of human moderators to disturbing content.

- Offer psychological support and training for human moderators to handle borderline or distressing material.

Summary

Including a comprehensive section on the different types of AI content moderation ensures that your Complete Guide covers not just why AI moderation is important, but how it is implemented across various media types and workflows. By exploring text, image, video, and audio moderation—and pairing these methods with the right workflow approach (pre-, post-, reactive, proactive)—readers gain a full picture of the strategies that platforms can use to maintain a safe and engaging environment.When combined with robust AI models, human oversight, and clear policies, these different types of AI content moderation form a powerful, scalable, and adaptable system. This helps digital platforms keep pace with evolving online behaviors, protect user well-being, and meet regulatory requirements—all while balancing the delicate interplay between safety and free expression.